WebRTC (Web Real-time Communication) is an industry effort to enhance the web browsing model. It allows browsers to directly exchange realtime media with other browsers in a peer-to-peer fashion through secure access to input peripherals like webcams and microphones.

Traditional web architecture is based on the client-server paradigm, where a client sends an HTTP request to a server and gets a response containing the information requested. In contrast, WebRTC allows the exchange of data among N peers. In this exchange, peers talk to each other without a server in the middle.

WebRTC comes built-in with HTML 5, so you don’t need a third-party software or plug-in to use it, and you can access it in your browser through the WebRTC API.

In this article, you’ll learn how and when to use WebRTC, and how it compares to the capabilities of WebSockets.

WebRTC use cases

As a technology, WebRTC has broad applicability. In this section, we’ll cover different use cases where WebRTC is a good choice.

Peer-to-peer video, audio, and screen sharing

WebRTC was originally created to facilitate peer-to-peer communication over the internet, especially for video and audio calls. It’s now used for more use cases, including text-based chats, file sharing, and screen sharing. WebRTC is used by products like Microsoft Teams, Skype, Slack, and Google Meet. WebRTC has also found relevance in EdTech and healthcare.

File exchange

WebRTC can be used to share files in various formats, even without a video or audio connection. WebTorrent is an example of a file-sharing app built on top of the WebRTC architecture.

Internet of Things

IoT devices are embedded with software and sensors that make it possible to process data and exchange information with other devices on the internet or on a network. WebRTC is helpful when there is a need to send or receive data in real time. For instance, if a drone needs to send video or audio in realtime, WebRTC can make that possible.

Surveillance is another IoT example where WebRTC is a strong choice. Think of baby monitors, nanny cams, doorbells, and home cameras. In these scenarios, WebRTC makes it easy to stream video and audio information to smartphones and laptops.

Entertainment and audience engagement

WebRTC is used for entertainment and audience engagement, including augmented reality, virtual reality, and gaming - for example, Google’s gaming platform, Stadia, uses WebRTC under the hood.

Realtime language processing

Language processing involves live closed captions, transcriptions, and automatic translations. Through a combination of the HTML5 Speech API and WebRTC’s data channels, transcripts can be sent cross-platform in realtime. A good example of this is seen in YouTube and Google Meets, where the closed captions are automatically generated on demand.

Key features of WebRTC

WebRTC consists of several interrelated APIs. We will now look closely at some of the key ones: MediaStream, RTCPeerConnection, and RTCDataChannel.

More details about all WebRTC interfaces are available here.

MediaStream

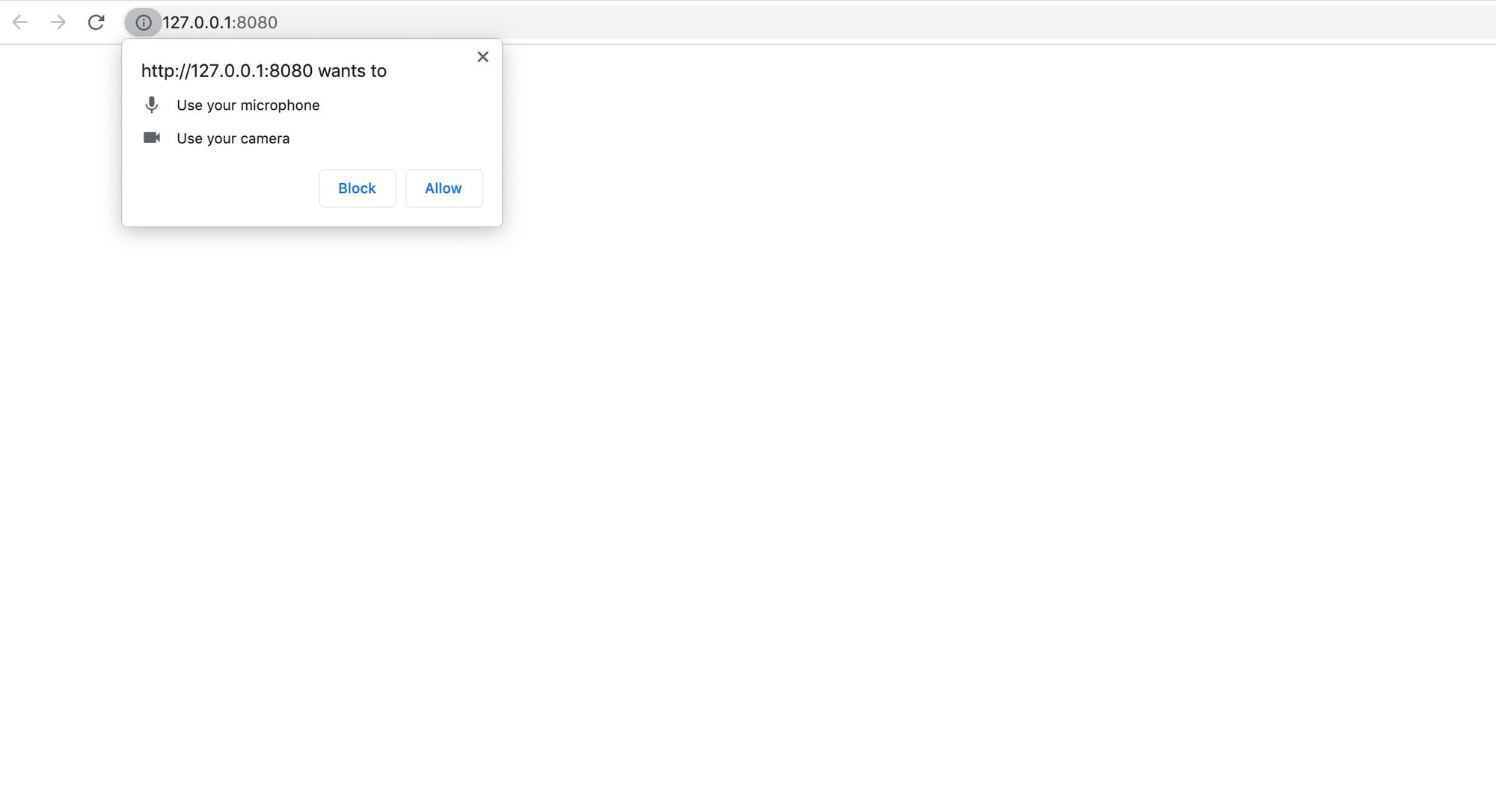

The MediaStream interface is designed to let you access streams of media from local input devices like cameras and microphones. It serves as a way to manage actions on a data stream like recording, sending, resizing, and displaying the stream’s content. To use a media stream, the application must request access from the user through the getUserMedia() method. Using this method, you can specify whether you need permission for only video, audio, or both.

To test this on a web page, you need a web server. Opening up an HTML file within the browser won’t work because of the security and permission measures that don’t allow it to connect to cameras or microphones unless it’s being served by an actual server. However, a simple Node.js server can help with this.

To get started, download and install Node.js. Then open up a terminal or command line interface and type npm install -g node-static. It’s now easy for you to serve a static file. Now you can navigate to any directory that contains the HTML files you want to host on the server.

To do this, create a file called index.html, and add the following:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8" />

<title>MediaStream</title>

</head>

<body>

<video autoplay></video>

<script src="index.js"></script>

</body>

</html>

Then create an index.js file in the same directory and add the following:

const streamOptions = {

video: true,

audio: true

}

function mediaStreamSuccess (stream) {

var video = document.querySelector('video');

video.srcObject = stream;

}

function mediaStreamError (error) {

alert("Sorry, your browser does not support getUserMedia.");

console.log(error)

}

navigator.mediaDevices.getUserMedia(streamOptions)

.then(mediaStreamSuccess).catch(mediaStreamError)

In the terminal directory, run static, which should run a local server. Then open http://127.0.0.1:8080, and you should see a permission prompt for both the camera and microphone.

Once the permissions are granted, you should see a video feed with audio capabilities.

RTCPeerConnection

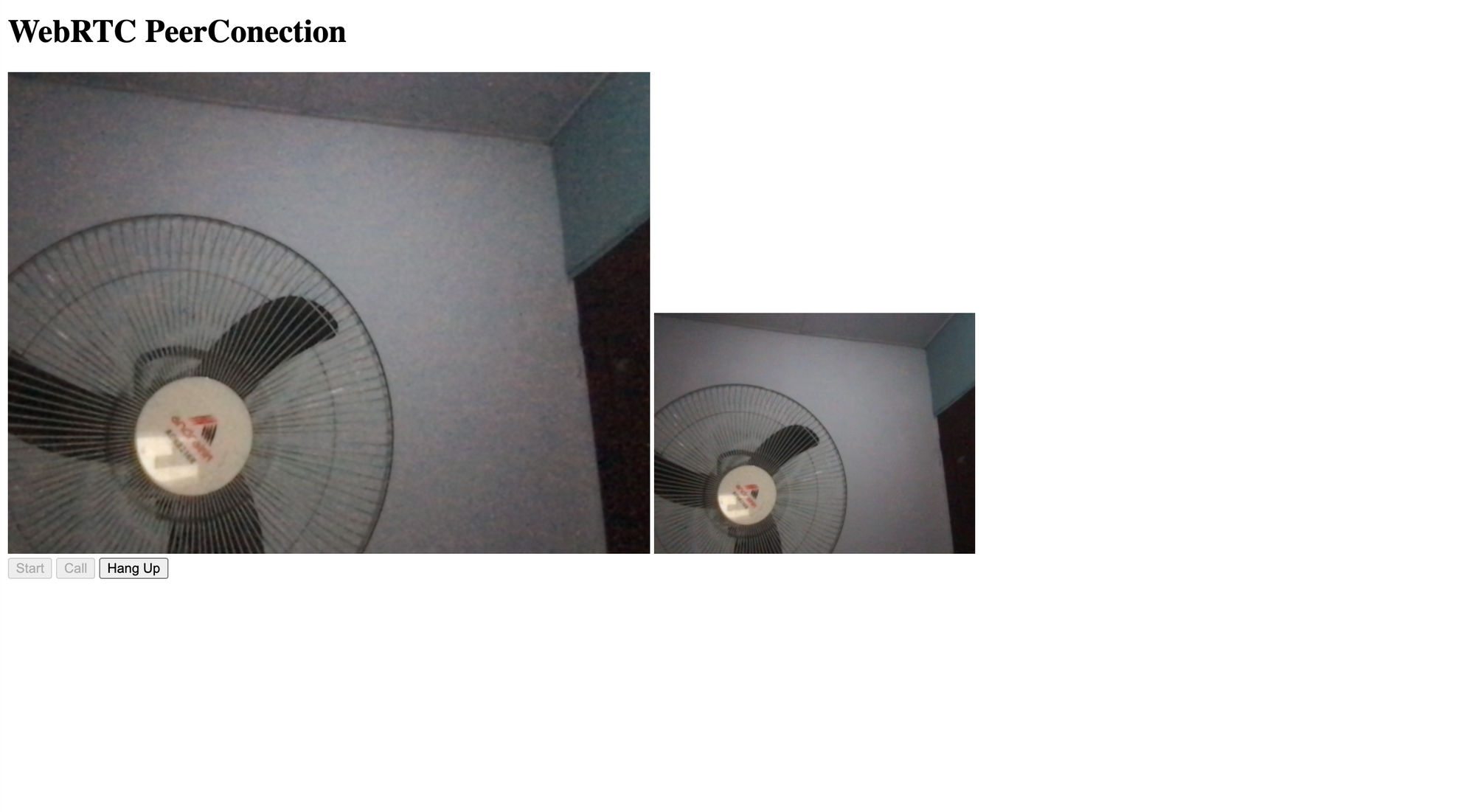

The RTCPeerConnection object is the main entry point to the WebRTC API. It’s what allows you to start a connection, connect to peers, and attach media stream information.

Typically, connecting to another browser requires finding where that other browser is located on the web. This is usually in the form of an IP address and a port number, which act as a street address to navigate to your destination. The IP address of your computer or mobile device lets other internet-enabled devices send data directly between each other and is what RTCPeerConnection is built on top of.

If you’d like to see RTCPeerConnection in action, you can download the code in this GitHub Gist. Then, in your terminal, run static and right-click on peerconnection.html. After that you can open it with your browser and you should see something like the image below:

RTCDataChannel

The RTCDataChannel API is designed to provide a transport service that allows web browsers to exchange generic data in a bidirectional, peer-to-peer fashion. It uses the Stream Control Transmission Protocol (SCTP) as a way to send data on top of an existing peer connection.

Each subsequent call to the CreateDataChannel() function creates a new data channel within the existing SCTP association. The main function for creating a data channel is the RTCPeerConnection object:

var peerConnection = new RTCPeerConnection();

var dataChannel = peerConnection.createDataChannel('myLabel', {});

dataChannel.onerror = function (error) {

console.log('Data Channel error:', error);

}

dataChannel.onmessage = function (event) {

console.log('Data Channel message:', message);

}

dataChannel.onopen = function () {

console.log('Data Channel open for sending messages!');

dataChannel.send('Hello World');

}

dataChannel.onclose = function () {

console.log('Data Channel has been closed.');

}

dataChannel events are straightforward and intuitive to use. You can only send a message after the onopen event fires. The send method can take a String, Blob, or ArrayBuffer type. If you make your data a variable of the send method, your browser will take care of the rest.

How does WebRTC compare to WebSockets?

WebSocket is a technology that enables bidirectional, full-duplex communication between a web client and a web server over a persistent, single-socket connection. This allows the client and the server to exchange low-latency, event-driven messages at will, without any need for polling. It’s important to note that the WebSocket protocol works on top of TCP, a protocol which is reliable (guaranteed ordering and delivery).

In comparison, WebRTC enables web browsers to communicate in a peer-to-peer fashion. WebRTC can work over TCP, but it’s primarily used over UDP, a protocol which is less reliable (best effort delivery, but no guarantees) than TCP, but also faster.

If data integrity is crucial for your use case, you should use WebSockets to benefit from TCP’s reliability. On the other hand, if speed is more important and losing some packets is acceptable for your use case (as is often the case with video and audio streaming), WebRTC over UDP is a better choice.

WebSockets and WebRTC actually often complement each other. WebRTC allows for peer-to-peer communication, but it still needs servers so that clients can exchange metadata to coordinate communication through a process called signaling. However, the WebRTC API itself doesn’t offer a signaling mechanism. This is something you have to implement separately, and WebSockets are a great choice for realtime signaling.

Learn more about the differences between WebRTC and WebSockets

What organizations use WebRTC?

WebRTC, as a web standard targeted at browsers, was initially released in 2011. Since then, it’s gained massive adoption in most modern browsers. It’s also being used by major organizations in applications like Google Meets, Zoom, Skype, Facebook (Chat, Messenger, Live), Gatheround, Discord, Bevy, and Snapchat. There are also numerous open-source projects built with WebRTC.

WebRTC and Ably

Ably is a feature-rich, highly dependable solution that provides robust and easy-to-use APIs to power live and collaborative experiences for millions of concurrently-connected devices. You can use our WebSocket APIs to quickly implement dependable signaling mechanisms for WebRTC apps. Get started with a free Ably account, and check out the tutorials listed in the Further reading section below for details.