WebSockets increasingly power realtime applications for use cases like chat, live data streaming, and collaborative platforms. However, as your application grows, scaling your infrastructure to support more connections becomes crucial for maintaining a smooth and reliable user experience - and for realtime, a globally consistent user experience is especially important. One way you can do this is by implementing efficient load balancing.

In this article, we explore when you might need to use a load balancer in WebSocket environments, the possible challenges in implementation, and best practices for balancing WebSocket traffic.

When do you need load balancing for WebSocket infrastructure?

Not every WebSocket implementation requires a load balancer. In fact, for smaller applications with minimal traffic and simple, localized use cases, a load balancer might be overkill. For instance, if you’re operating a low-traffic WebSocket server that only handles a handful of concurrent connections or is serving users within a single region, manually scaling your infrastructure may be sufficient.

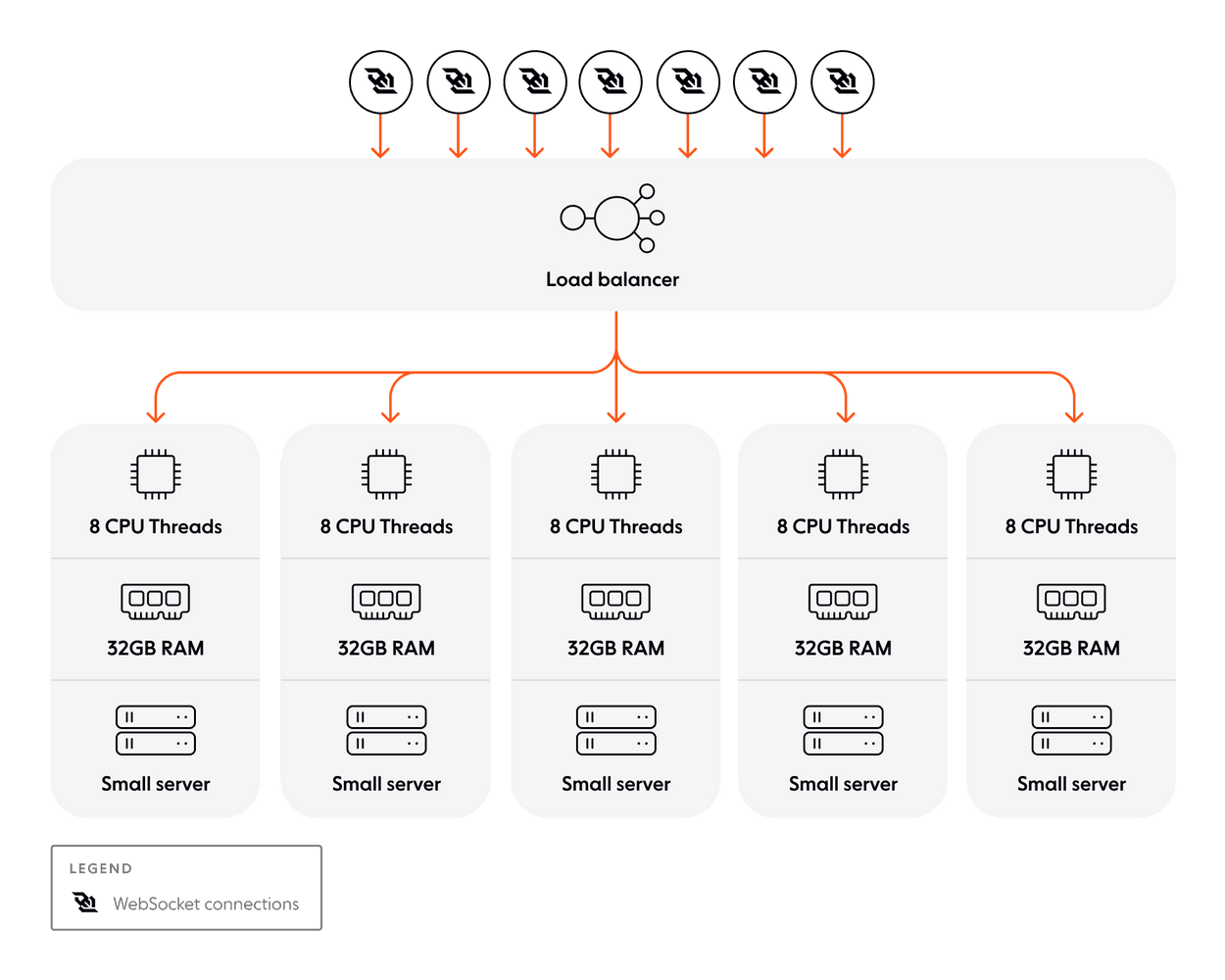

But if you anticipate significant global traffic, load balancing will probably be crucial to your success in scaling your app. Let’s qualify this: there’s more than one way to scale an app - can’t we also scale vertically, and just increase our resources on a single server without introducing a load balancer?

Unfortunately, that’s probably not going to be a viable approach for WebSockets in particular. Some relational databases can fail over to read-only if the primary machine goes offline, but WebSocket, as a stateful protocol, needs persistent bi-directional communication. So if the one server fails - and it might, especially with a spontaneous spike in traffic - there is no backup (you can read more about this in our article about vertical vs horizontal scaling for WebSockets).

With this in mind, here’s when you should consider load balancing WebSockets over multiple servers:

You have a high volume of concurrent connections: When your application begins to handle thousands or millions of simultaneous WebSocket connections, a single server can quickly become overwhelmed. A load balancer is needed to distribute traffic across multiple servers, preventing performance degradation or downtime.

You are serving geographically distributed users: If your application has users spread across different regions, latency can become a major issue. In this case, load balancing helps route users to the closest server or datacenter, reducing response times and improving the overall user experience.

You need redundancy and high availability: If uninterrupted service is critical, load balancing is necessary to provide redundancy and failover. By distributing traffic across multiple servers or regions, your infrastructure becomes more resilient to server or regional failures, ensuring continued service availability.

You are scaling up: As your infrastructure scales horizontally (adding more servers) or globally (expanding across regions), a load balancer is crucial for managing traffic distribution, preventing any single server from becoming a bottleneck.

For example, imagine you're running a live sports streaming platform that broadcasts realtime updates to millions of fans worldwide. During peak events, like the final match of a major tournament, your platform experiences a surge in WebSocket connections from users across multiple regions. Without load balancing, a single server might struggle to handle the increased traffic, resulting in dropped connections and delayed updates for users. By using a load balancer, you can distribute these connections across multiple globally-distributed servers, ensuring low latency and a seamless experience for all of your users, regardless of where they are.

The challenges of load balancing WebSocket connections

Because WebSocket connections are stateful and long-lived, load balancing them presents a few unique challenges that are not shared with traditional HTTP connections. They include:

Connection state and data synchronization: Once a WebSocket connection is established, traffic between the client and server needs to remain on the same server throughout the session. What happens if one user in a chat goes offline - how do we keep online status and messages current across the network in realtime? In the context of load balancing, persistent open connections to clients, or sticky sessions, are one way of resolving this issue. But sticky sessions also have to be handled carefully, since sticky sessions tie a client to a specific server, and can lead, in some cases, to uneven load distribution and create hotspots in your infrastructure (algorithms like consistent hashing can help with this).

Handling failover and redundancy: In stateless HTTP-based systems, requests can easily be routed to other servers. However, with WebSockets, the connection needs to be re-established with a new server, which can disrupt the communication flow. In the event of a server or regional failure, we of course want traffic to seamlessly failover to a backup server or region without disrupting the user experience. As we saw earlier, implementing that failover requires multiple servers, and load balancing to ensure low latency and redundancy is a complex task. You may need to consider different load balancing algorithms before landing on the best approach for your use case.

Maintaining connection limits: Each WebSocket connection consumes resources on the server, which limits the number of connections a single server can handle. This makes load balancing trickier because it’s not just about evenly distributing traffic but also making sure servers don’t exceed their connection limits. Load balancers need to monitor and intelligently distribute connections while preventing servers from being overloaded.

Keeping global latency low: If you have users across the globe, your load balancer should ensure clients are connected to the server nearest to them, while also managing fallback options when servers or entire regions become unavailable. This becomes increasingly difficult as global traffic grows, potentially leading to high latencies for users far from the datacenter.

Complexity of horizontal scaling: If you continue to scale your WebSocket infrastructure horizontally, each new server added increases by default the complexity of maintaining sticky sessions, managing state, and avoiding connection drops. Without the right balancing strategies, you can quickly run into bottlenecks or imbalances across your infrastructure.

Best practices for load balancing WebSocket traffic

Load balancing best practices are intrinsically tied to horizontal scaling best practices, since load balancers only become an infrastructure consideration when multiple servers are introduced. Between the two, there is bound to be some overlap. That said, here are some load balancer-specific best practices:

Use sticky sessions strategically

As we mentioned earlier, sticky sessions are often essential for WebSocket connections, as they ensure that clients remain connected to the same server throughout their session. However, solely relying on stickiness can lead to uneven load distribution. Consider using a load balancer that prioritizes reconnecting clients to the same server but allows for redistribution if a server becomes overloaded or fails.

Use load balancers globally

If you’re operating globally and at scale, consider routing traffic based on proximity to a datacenter or server. Regional load balancers will help to distribute the load while minimizing latency as much as possible. Make sure they have a fallback mechanism to reroute traffic, in case one region fails.

Implement automatic reconnection logic

Automatic reconnection logic mitigates disruptions when a stateful WebSocket connection drops due to server failure. The reconnection logic should also detect when a load balancer has rerouted a session to a new server - this ensures data consistency.

Make sure your load balancers have automated failover

If a server goes down, automated failover on your load balancers mean that traffic will be re-routed with minimal, if any, downtime. This is crucial to maintain a seamless experience with WebSocket connections.

Understand your load limits and have a fallback strategy

Run load and stress testing to understand how your system behaves under peak load, and enforce hard limits (for example, maximum number of concurrent WebSocket connections) to have some predictability. And in the event that a WebSocket connection breaks, have a fallback mechanism (for example, Socket.IO has HTTP long polling as a fallback) and reconnection strategy (like the automatic reconnection logic suggested above).

Have a load shedding strategy

When servers are reaching capacity, a load shedding strategy allows you to gracefully degrade service by limiting or dropping low-priority connections to maintain overall performance and prevent system overload. This approach can help avoid sudden, large-scale disruptions, even under high load.

Solutions for load balancing strategy

WebSockets have successfully powered rich realtime experiences across apps, browsers, and devices. Scaling out WebSocket infrastructure horizontally comes with load balancing, which, as we’ve seen, is a complex and delicate undertaking.

Ultimately, horizontal scaling is the only sustainable way to support WebSocket connections at scale. But the additional workload of maintaining such infrastructure on one’s own can pull focus away from your core product.

If your organization has the resources and expertise, you can attempt a self-build; but we generally don’t recommend this. Managed WebSocket solutions will allow you to offload the complexities of load balancing and horizontal scaling, as they often have globally-distributed networks with scaled infrastructure already taken into account.

Ably takes care of the load balancing for you by offering a globally-distributed platform designed for scaling realtime applications. With Ably, you can avoid the complexities of managing WebSocket infrastructure in-house and ensure reliable, fault-tolerant communication at scale. Some of our features include:

Predictable performance: A low-latency and high-throughput global edge network, with 6.5ms message delivery latency.

Guaranteed ordering & delivery: Messages are delivered in order and exactly once, with automatic reconnections.

Fault-tolerant infrastructure: Redundancy at regional and global levels with 99.999% uptime SLAs. 99.999999% (8x9s) message availability and survivability, even with datacenter failures.

High scalability & availability: Built and battle-tested to handle millions of concurrent connections at scale.

Optimized build times and costs: Deployments typically see a 21x lower cost and upwards of $1M saved in the first year.

Sign up for a free account to try it for yourself.

Recommended Articles

WebSocket topic page

Get a better understanding of WebSockets are, how they work, and why they're ideal for building high-performance realtime apps.

The challenge of scaling WebSockets [with video]

Learn how to scale WebSockets effectively. Explore architecture, load balancing, sticky sessions, backpressure, and best practices for realtime systems.

WebSockets vs HTTP: Which to choose for your project in 2024

An overview of the HTTP and WebSocket protocols, including their pros and cons, and the best use cases for each protocol.

Scaling WebSockets for virtual events

Learn about the challenges of building dependable virtual event platforms & apps with WebSockets that scale to support millions of concurrent users.