WebSockets enable low-latency, bi-directional communication between clients and servers, making them a fundamental protocol for realtime features like chat, dashboards, live collaboration and more. But building infrastructure that supports hundreds of thousands or even millions of concurrent WebSocket connections is a big engineering challenge.

This guide covers the challenges of scaling WebSockets and the architectural patterns, techniques and best practices for designing systems that are reliable, performant and resilient at scale based on our experience of building production-grade systems at scale.

🎥 Watch: We explain the architectural decisions behind scaling WebSockets for millions of connections — lessons from real-world platforms.

Are WebSockets scalable?

WebSockets are scalable (companies like Slack, Netflix and Uber use them to power realtime features for millions of end-users)— but only with the right architecture. While they provide persistent, low-latency connections, traditional server models struggle with high concurrency due to CPU, memory and kernel limits. Throughout this article, we'll look at what makes scaling hard and how to overcome it.

What makes scaling WebSockets hard?

Scaling WebSockets introduces a fundamentally different set of challenges compared to traditional request-response protocols like HTTP. Because WebSocket connections are persistent and bidirectional, they require servers to maintain connection state, allocate system resources over long periods, and manage potentially vast numbers of concurrent connections simultaneously.

Each WebSocket connection consumes memory, file descriptors, and other server resources. As concurrent connections increase, so does the pressure on the underlying infrastructure — particularly networking, CPU, and memory. At small scales, these demands are manageable. But at higher concurrency levels, resource consumption grows quickly and must be actively managed to prevent performance bottlenecks.

Moreover, WebSocket traffic tends to be bursty and unpredictable. Usage patterns can shift dramatically based on application context, user behavior, or external events. This variability makes it difficult to forecast demand and requires systems that can dynamically adapt to sudden changes in traffic without sacrificing reliability.

Unlike stateless HTTP APIs, scaling WebSockets also demands architectural foresight: connection state must be managed carefully, failure recovery becomes more complex, and operational strategies must account for the nuances of long-lived sessions.

How many WebSocket connections can a server handle?

There is no universal limit. On a well-tuned server with an event-driven architecture, it’s possible to handle tens or even hundreds of thousands of concurrent WebSocket connections.

In one benchmark, a single node supported up to 240,000 concurrent connections while maintaining sub-50ms message latency. This was made possible by:

Using event-driven servers (e.g. Node.js, Go)

Kernel tuning (e.g. increasing file descriptor limits, TCP stack tuning)

Stateless session management

That said, per-node performance is only part of the story. Production systems scale WebSocket connections by distributing them across many nodes. Horizontal scaling is essential for supporting millions of concurrent clients.

Approaches to scaling WebSockets

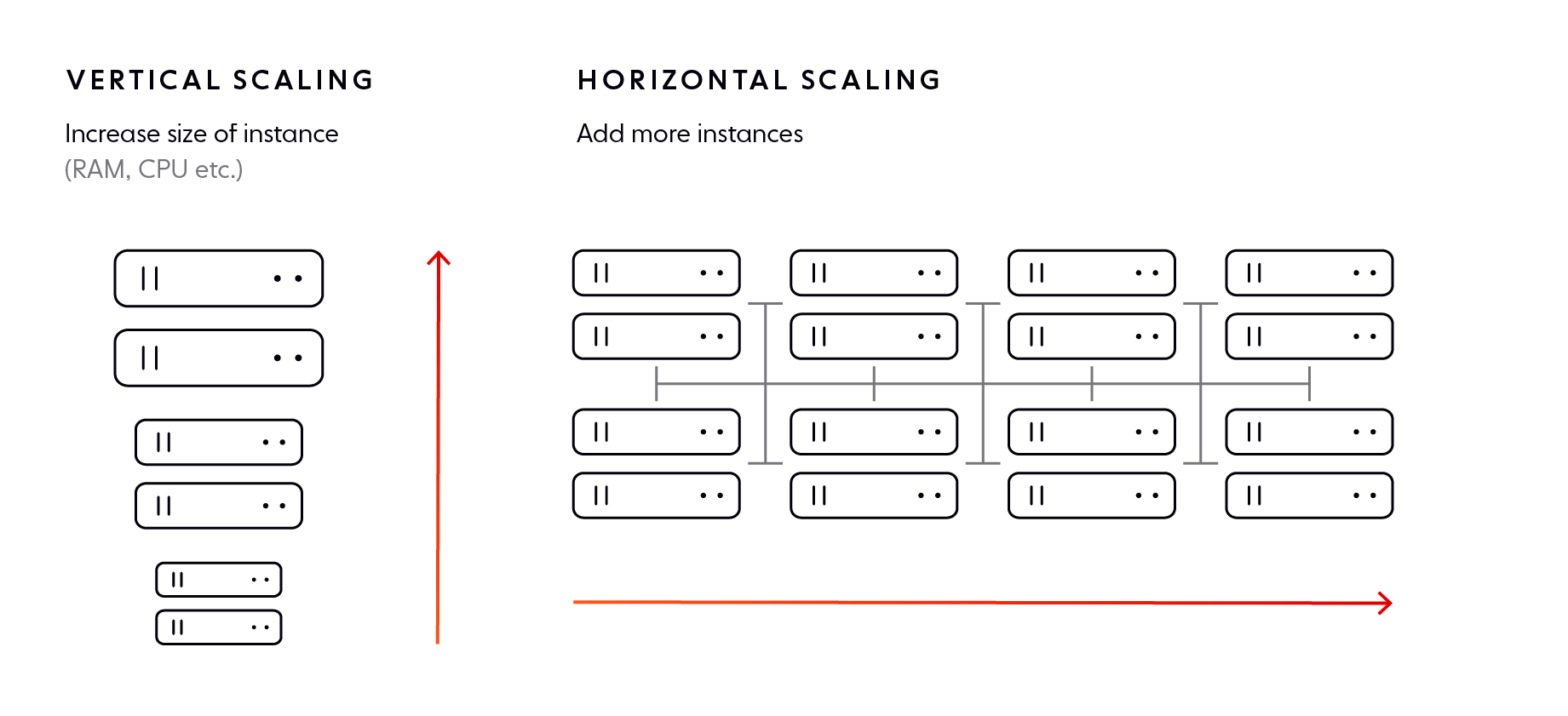

Once the key challenges of scaling WebSockets are understood, it's important to examine the core strategies available to address them. Broadly, there are two architectural approaches: scaling up individual machines (vertical scaling) or scaling out across a distributed network of machines (horizontal scaling). Each method has tradeoffs in terms of complexity, flexibility, and resilience.

Let's look at each in turn:

Vertical Scaling

The approach taken in vertical scaling is to increase the resources (CPU, RAM, bandwidth) on individual servers. This works up to a point, but hits diminishing returns and does not solve for resilience or geographic reach.

Horizontal Scaling

The approach taken in horizontal scaling is to distribute connections across multiple stateless nodes. Key to this approach is having infrastructure that supports:

Global load balancing

Shared or replicated state

Efficient routing of messages to the right connection across the network

Horizontal scaling can be challenging but is an effective way to scale your WebSocket application.

Horizontal vs vertical scaling: Which is best?

The decision between vertical and horizontal scaling depends on your application's size, growth expectations, and operational maturity. Vertical scaling can be effective in early stages or for low-concurrency use cases, offering simpler setup and fewer moving parts. However, it has finite limits and often requires substantial server hardware.

Horizontal scaling is more complex to implement but is the only viable path for systems that anticipate large numbers of concurrent users, global usage, or dynamic workloads. It supports high availability, fault tolerance, and elasticity — key properties for modern realtime applications.

In most production environments, teams eventually adopt horizontal scaling strategies, often layering them with autoscaling and observability tools to manage operational overhead.

You can read more about the merits of these two approaches in our dedicated article on horizontal vs vertical scaling for WebSockets.

Other considerations when scaling WebSockets

Scaling WebSockets is not just a matter of choosing between vertical and horizontal scaling — it requires an in-depth understanding of how connections are managed, how traffic is distributed, and how to build resilience into every layer of your architecture. This includes decisions about where and how to load balance connections, how to handle client limitations and failures, how to prevent servers from becoming overwhelmed, and how to design with operational scale in mind.

The sections that follow cover the critical architectural and operational factors to consider when scaling WebSocket infrastructure, including:

Load balancing strategies and session management

Planning for fallbacks and variable demand

Managing the lifecycle and health of each connection

Applying best practices for scale and resiliency

These topics provide a roadmap for engineering teams building or evolving systems that depend on reliable, scalable WebSocket communication.

Load balancing and session management

When you start scaling horizontally, distributing WebSocket connections efficiently across your infrastructure becomes a non-negotiable part of your architecture. WebSocket connections are long-lived, and once they’re established, they need to consistently reach the same server. That’s where load balancing comes in—it’s your frontline strategy to keep things running smoothly as demand grows.

Done well, load balancing gives your system resilience, elasticity, and responsiveness. It helps you:

Prevent any one server from becoming a performance bottleneck

Keep your system highly available, even when parts of it fail

Scale up or down based on demand, without interrupting client sessions

Let’s break down two areas you’ll need to get right: how to balance connections and how to manage session state.

Load balancing: A good load balancer does more than just distribute requests—it makes smart decisions about where each new connection should land. For WebSockets, this means using TCP-aware (layer 4) load balancers that can track server health and connection counts. If you’re running a global system, geo-routing or DNS-based strategies can direct clients to the nearest healthy region.

Now, how a load balancer makes those decisions depends on the algorithm it follows. Here are some of the most common ones:

Round-robin: Sends each connection to the next server in a rotating sequence. Simple and fair.

Weighted round-robin: Same as above, but gives more traffic to more capable servers.

Least-connected: Sends traffic to the server with the fewest active connections.

Least-loaded: Considers CPU, memory, or other usage metrics.

Least response time: Picks the server that responds fastest to health checks.

Hash-based: Routes based on a hash of client details (e.g. IP, port, hostname).

Random two choices: Picks two servers at random and chooses the one with fewer connections.

Custom load metrics: Uses specific operational data (like CPU or memory) from each server to inform routing.

Which algorithm should you use? That depends on your application. If your traffic is fairly uniform—for instance, live sports scores going to all users—round-robin might be just fine. But if some clients are more demanding than others, you’ll want something smarter like least-loaded or custom metrics. The key is to match the strategy to your usage patterns.

Sticky sessions: Sticky sessions—also known as session affinity—are a load balancing strategy where a client consistently connects to the same server across a session or multiple reconnects. For example, if a user initially connects to server A, the load balancer will continue to route them to server A, even if another server has less load or better availability.

This approach simplifies session management by keeping all state on a single server. However, it limits the effectiveness of your load balancer. If server A becomes overwhelmed or fails, a sticky client will keep trying to reconnect to it, making it difficult to shed load or recover quickly. The more your system relies on sticky sessions, the harder it becomes to scale dynamically or rebalance traffic effectively.

A more flexible and resilient alternative is to design for statelessness and externalize connection state. With this model, any server can resume a client session, allowing your load balancer to distribute traffic purely based on load, geography, or availability. This enables better elasticity, graceful failover, and smoother scaling.

In short, think of load balancing and session management as a pair: one spreads the work out, the other makes sure users don’t feel the impact when that work moves around.

Planning for fallbacks

Some clients—due to restrictive firewalls, proxies, or legacy environments—won’t be able to establish a WebSocket connection at all. To provide a consistent experience, your system should support fallback transport mechanisms like Server-Sent Events (SSE) or long polling. Clients should also retry connections using an exponential backoff strategy with jitter to avoid overwhelming the server with repeated reconnect attempts. Fallbacks aren’t just about compatibility—they’re a key part of system resilience.

In the context of scale, it’s essential to consider the impact that fallbacks may have on the availability of your system. Suppose you have many simultaneous users connected to your system, and an incident causes a significant proportion of the WebSocket connections to fall back to long polling. Your server will experience greater demand as that protocol is more resource-intensive (increased RAM usage).

To ensure your system’s availability and uptime, your server layer needs to be elastic and have enough capacity to deal with the increased load.

Handling unpredictable loads

Sudden traffic spikes can strain even the best-architected systems. These spikes might be caused by real-world events, viral growth, or unexpected client behavior. To handle this safely, you need infrastructure that can scale—but you also need mechanisms for protecting system integrity when demand exceeds capacity.

Here are several strategies to help:

Autoscaling: Use infrastructure that can automatically add or remove compute nodes based on traffic or connection load.

Buffering: Insert queues or pub/sub layers between components to absorb bursts and prevent upstream overload.

Rate limiting and throttling: Temporarily limit message frequency or new connection attempts from overly aggressive clients.

At a certain point, however, you may need to intentionally reject some traffic to preserve system health. This is called load shedding, and it’s a critical part of operating at scale.

Load Shedding Tips:

Know your thresholds: Run tests to determine your infrastructure’s safe upper limits. Anything beyond those should be a candidate for shedding.

Reject early, gracefully: If a server is approaching overload, it’s better to reject new connections with clear error messages than to risk total failure.

Use backoff logic: Clients should wait before retrying a dropped or rejected connection, or they’ll just contribute to the problem.

Drop idle connections: Even idle clients consume resources due to heartbeats. Consider closing stale or inactive connections first when shedding is necessary.

The goal with all of these strategies is to fail gracefully. In high-concurrency environments, shedding a few connections strategically is far better than letting the entire system collapse under pressure.

Managing connection lifecycle and reliability

WebSocket connections aren’t fire-and-forget—they’re living channels that consume server resources and require ongoing care. If left unmanaged, inactive or unhealthy connections can degrade performance and jeopardize system stability. As your system scales, the way you handle the full lifecycle of each connection becomes just as important as how many you can support in total.

This section looks at three key parts of connection lifecycle management: detecting dead connections, reclaiming resources from idle ones, and managing flow control to avoid overloading slow clients.

Heartbeats: Think of heartbeats as periodic "pings" between the client and server to confirm the connection is still alive. These messages help detect dropped or dead connections that haven’t been properly closed. They also serve an important purpose in keeping intermediate network infrastructure—like NATs and firewalls—from silently closing idle connections. Heartbeats should be sent at regular intervals, and systems should disconnect peers that don’t respond within a defined timeout period.

Idle Timeouts: Even if a connection hasn’t been closed, it may no longer be serving an active user. Idle timeouts allow servers to reclaim memory, file descriptors, and other resources by closing connections that have been inactive for a predefined period. This is especially useful during traffic surges, when resource efficiency becomes critical. Pairing idle timeouts with activity tracking ensures that only genuinely unused connections are dropped.

Backpressure: In a realtime system, clients don’t always consume data as fast as it’s produced. Backpressure is a way to protect your infrastructure from being overwhelmed when this happens. When a client slows down, the server can apply backpressure by buffering messages up to a safe limit. Beyond that, it may delay delivery or drop non-critical messages. This keeps your system responsive for everyone and avoids memory buildup that could take down entire servers. Designing effective backpressure policies—such as buffer thresholds and priority-based message handling—is crucial for system stability at scale.

Best practices for production ready WebSockets

You’ve got your architecture in place, you’ve planned for failure, and your connection management is solid. Now what? It’s time to apply lessons from the real world.

From monitoring to testing to client behavior, these best practices can help keep your system dependable over time:

Use event-driven, non-blocking infrastructure to support high concurrency.

Externalize session and message state to avoid tight coupling to individual servers.

Monitor metrics like connection count, error rates, latency, and reconnect frequency.

Test at scale with production-like traffic patterns.

Build client logic that supports reconnection, fallback, and session recovery.

Offloading complexity with managed WebSocket infrastructure

Scaling WebSockets for production systems comes with substantial engineering overhead—from connection lifecycle management to load balancing, fallbacks, and system resilience. If you're building for global scale, this complexity can quickly consume time, budget, and operational bandwidth.

Before investing heavily in building WebSocket infrastructure from scratch, it's worth considering whether WebSockets are the right tool for your specific use case. For example, if you're only pushing simple string data from server to client and don't need bidirectional communication, alternatives like Server-Sent Events (SSE) may be easier to implement and scale.

However, when bidirectional communication is required—as with collaborative tools, chat systems, or live multiplayer games—WebSockets are often the best fit. That said, choosing WebSockets doesn't mean you have to manage everything in-house.

There are managed services purpose-built to handle WebSocket scale for you. Platforms like Ably provide elastic, global infrastructure that abstracts away much of the operational complexity. These services offer:

Horizontal scalability out of the box

Resilience through automatic failover and load balancing

Built-in support for fallback transports and message buffering

Protocol interoperability, analytics, and QoS guarantees

Using a managed provider can drastically reduce time-to-market and ongoing operational risk—allowing engineering teams to focus on product development rather than infrastructure maintenance.

Ably, the WebSocket platform that works reliably at any scale

Ably is a realtime experience infrastructure provider. Our APIs and SDKs help developers build and deliver realtime experiences without having to worry about maintaining and scaling messy WebSocket infrastructure.

We offer:

Pub/sub messaging over serverless WebSockets, with rich features such as message delta compression, automatic reconnections with continuity, user presence, message history, and message interactions.

A globally-distributed network of datacenters and edge acceleration points-of-presence.

Guaranteed message ordering and delivery.

Global fault tolerance and a 99.999% uptime SLA.

6.5ms message delivery latency.

Dynamic elasticity, so we can quickly scale to handle any demand (billions of WebSocket messages sent to millions of pub/sub channels and WebSocket connections).

Explore our documentation to find out more and get started with a free Ably account.

Recommended Articles

What are WebSockets used for?

Learn the answers to questions like: What kind of use cases are WebSockets best suited for? Which companies use WebSockets in production?

Pros and cons of WebSockets

Discover the advantages and benefits WebSocket brings to the table, as well as its disadvantages and limitations.

WebSocket alternatives

Understand the key differences between WebSocket alternatives including SSE, HTTP polling, and MQTT. Learn when each makes sense in a realtime system.