As a consequence of the global pandemic that took the world by storm in 2020, interest in virtual events has skyrocketed. In this context, one of the biggest challenges for event companies is to scale their digital offerings to cope with unprecedented demand. This article is a deep-dive into the many aspects that need to be considered when building virtual event platforms and apps with WebSockets and scaling them to support millions of concurrent users.

Virtual events & WebSockets

2020 kick-started an impressive growth in demand for virtual events. With people around the world sheltering at home, virtual events have become the norm, and they’re now present in every aspect of our lives, whether we’re talking about school, work, or entertainment. Think of interactions like virtual polls, quizzes, and Q&A sessions, interactive presentations, breakout rooms, or chat, and how often we use them (or benefit from them) in our day-to-day lives.

Nowadays, organizations delivering engaging, virtual events are booming - see, for example, Hopin, who have recently closed their series C funding at a valuation of $5.65bn and 400% growth. While there are plenty of opportunities for virtual events companies, they also need to tackle some serious challenges when building their digital products. One of the biggest challenges is scaling to meet unprecedented demand while at the same time providing dependable & uninterrupted experiences that satisfy attendees & event organizers.

When talking about virtual events, we must, of course, consider that participants must be able to interact in real time. To build realtime functionality, you need to consider using an async, event-driven architecture powered by an adequate transport protocol, such as WebSockets.

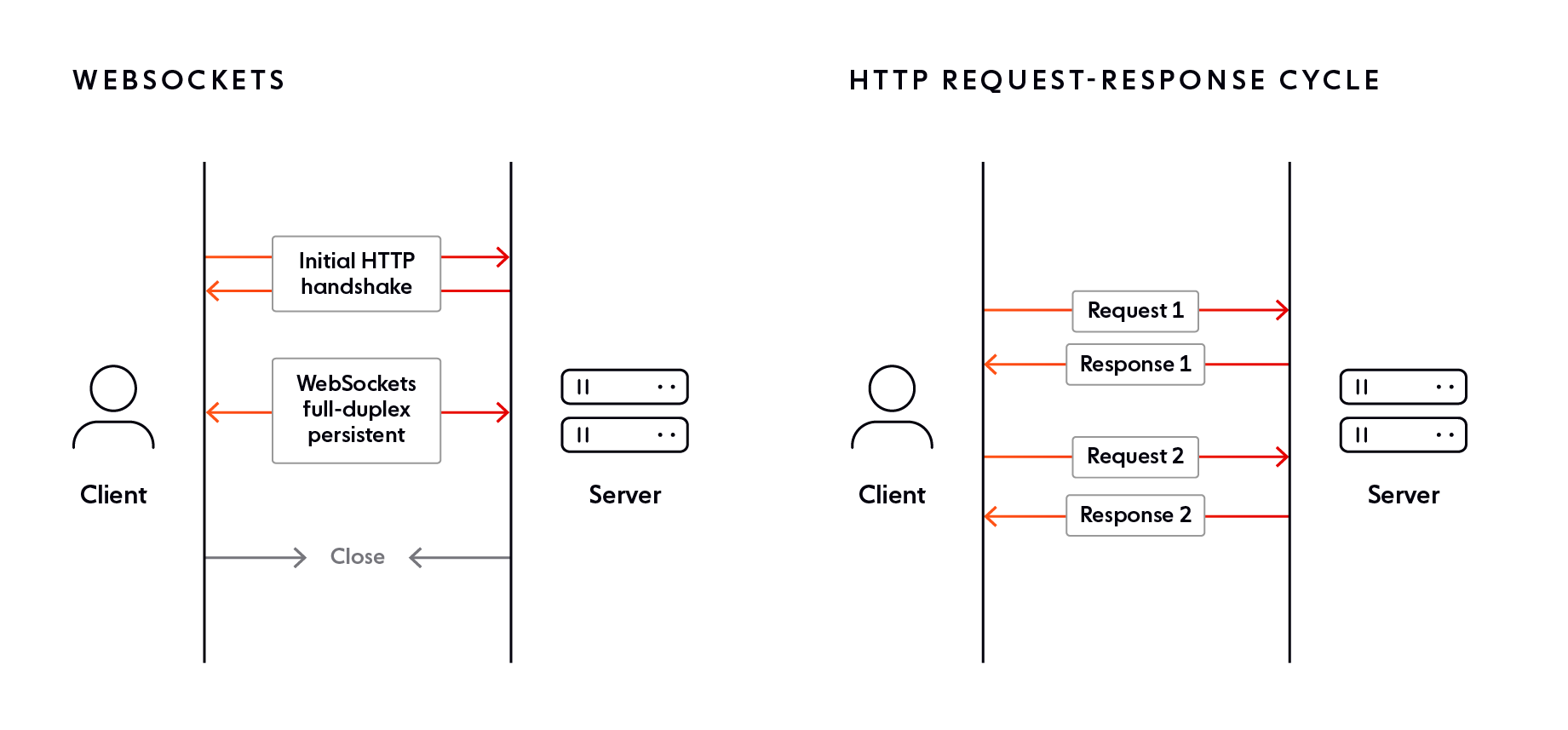

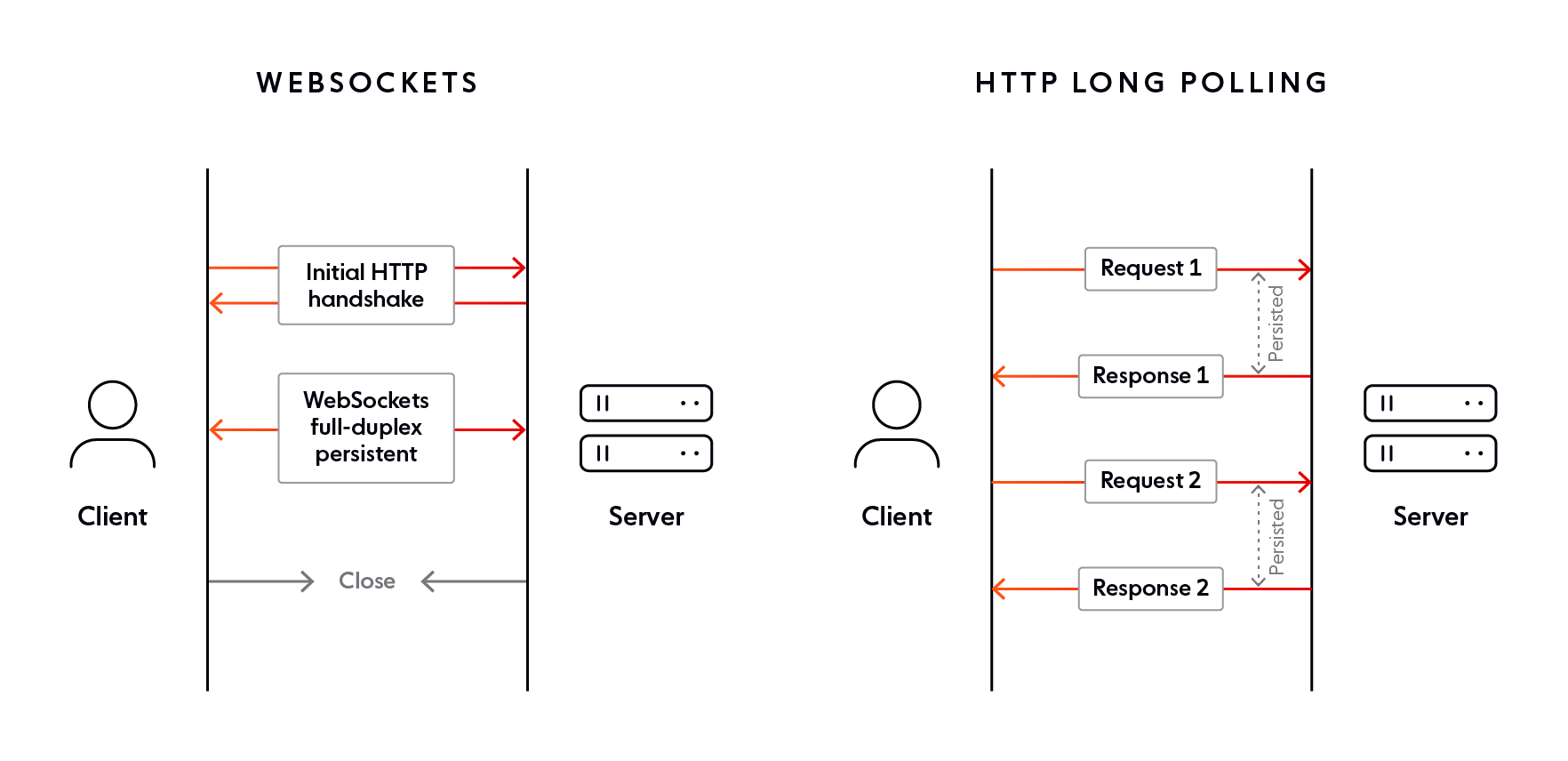

Before WebSockets came along, the realtime web existed, but it was difficult to achieve, typically slower, and was delivered by hacking existing web technologies that were not designed for realtime web applications. The WebSocket protocol paved the way to a truly realtime web.

Standardized in 2011 by RFC 6455, WebSockets are a thin transport layer built on top of a device’s TCP/IP stack. They enable two-way, full-duplex communication over a persistent connection. Compared to REST, WebSockets allow for higher efficiency and are easier to scale because they do not require the HTTP request/response overhead for each message sent and received. Furthermore, the WebSocket protocol is push-based, enabling you to push data to connected clients as soon as events occur. In contrast, with HTTP, you have to poll the server for new information. This is far from ideal in the context of virtual live events, where you want to stream data immediately, in as close as possible to real time.

The WebSocket landscape - available options

You can take several different paths to integrate WebSocket capabilities into your tech stack. Let’s quickly review these alternatives.

The first option is to build your very own WebSocket-based messaging solution from scratch and tailor it to your needs by using your chosen technologies. For example, DAZN, a well-known provider of live sports streaming services, have built their custom solution designed for broadcasting messages to millions of users over WebSockets. It uses multiple AWS-managed services combined into a globally-available solution. Of course, building and maintaining such a solution involves dedicated engineering & DevOps resources, as well as a long time to implement and test. This is not always a viable option, especially in a fast-paced moving industry like virtual events.

Another option is to use open-source technologies like Socket.IO as the backbone of your WebSocket-based features. You might even use Socket.IO in combination with Redis, which enables you to run multiple Socket.IO instances in different processes or servers, pass events between nodes, and broadcast messages via the Redis Pub/Sub mechanism. Despite its popularity, Socket.IO has its limitations. First of all, it’s a simple solution that consists of a Node.js server and a JavaScript client library for the browser. Socket.IO is essentially just a wrapper around the WebSocket API in browsers. It offers limited additional functionality. Secondly, Socket.IO doesn’t provide strong messaging guarantees (ordering, guaranteed delivery, and exactly-once semantics). If data integrity is important to your use case, you will have to build separate components or mechanisms to ensure it.

Regardless of which of these two options you choose, scaling your system to handle millions of concurrent WebSocket connections dependably is a complex & time-consuming undertaking, which requires significant financial, Engineering, and DevOps resources.

Most of the time, you are better off using a fully-managed WebSocket solution that is battle-tested and well-prepared to handle the engineering complexities of WebSockets at scale.

Here at Ably, we’ve built our own protocol on top of WebSockets. It allows you to communicate via WebSockets by using a higher-level set of capabilities - pub/sub messaging. To demonstrate how simple it is, here’s an example of how data is published to Ably:

var ably = new Ably.Realtime('ABLY_API_KEY');

var channel = ably.channels.get('test');

// Publish a message to the test channel

channel.publish('greeting', 'hello');And here’s how clients connect to Ably to consume data:

var ably = new Ably.Realtime('ABLY_API_KEY');

var channel = ably.channels.get('test');

// Subscribe to messages on channel

channel.subscribe('greeting', function(message) {

alert(message.data);

});As you can see from the code snippets above, although the communication is done over WebSockets, the underlying WebSocket protocol is ‘’hidden’’ from developers.

Regardless of what type of solution you decide to use (DIY, open-source solution, or proprietary fully-managed solution), you need to consider all of the challenges you’ll face when it comes to scaling WebSockets.

Scaling WebSockets for virtual events: what you need to consider

Before we get started, I must emphasize that this article focuses on the challenges of scaling virtual events platforms & apps to handle thousands or even millions of concurrent end-user devices connecting to consume and send messages (events) over WebSockets. This article does not cover considerations related to video streaming.

Let's now dive into some of the key things you need to think about, such as fault tolerance, using a scalable architecture pattern, or the availability of your server layer. Your system must efficiently address all these complexities if you are to build scalable and dependable realtime features with WebSockets.

The availability of your server layer

When it comes to scaling WebSockets, you must consider the availability of your server layer. Specifically, you need to make sure it's capable of sustaining an unknown but potentially very high number of concurrent WebSocket connections.

Let's start by looking at the two different paths you can take to scale your server layer:

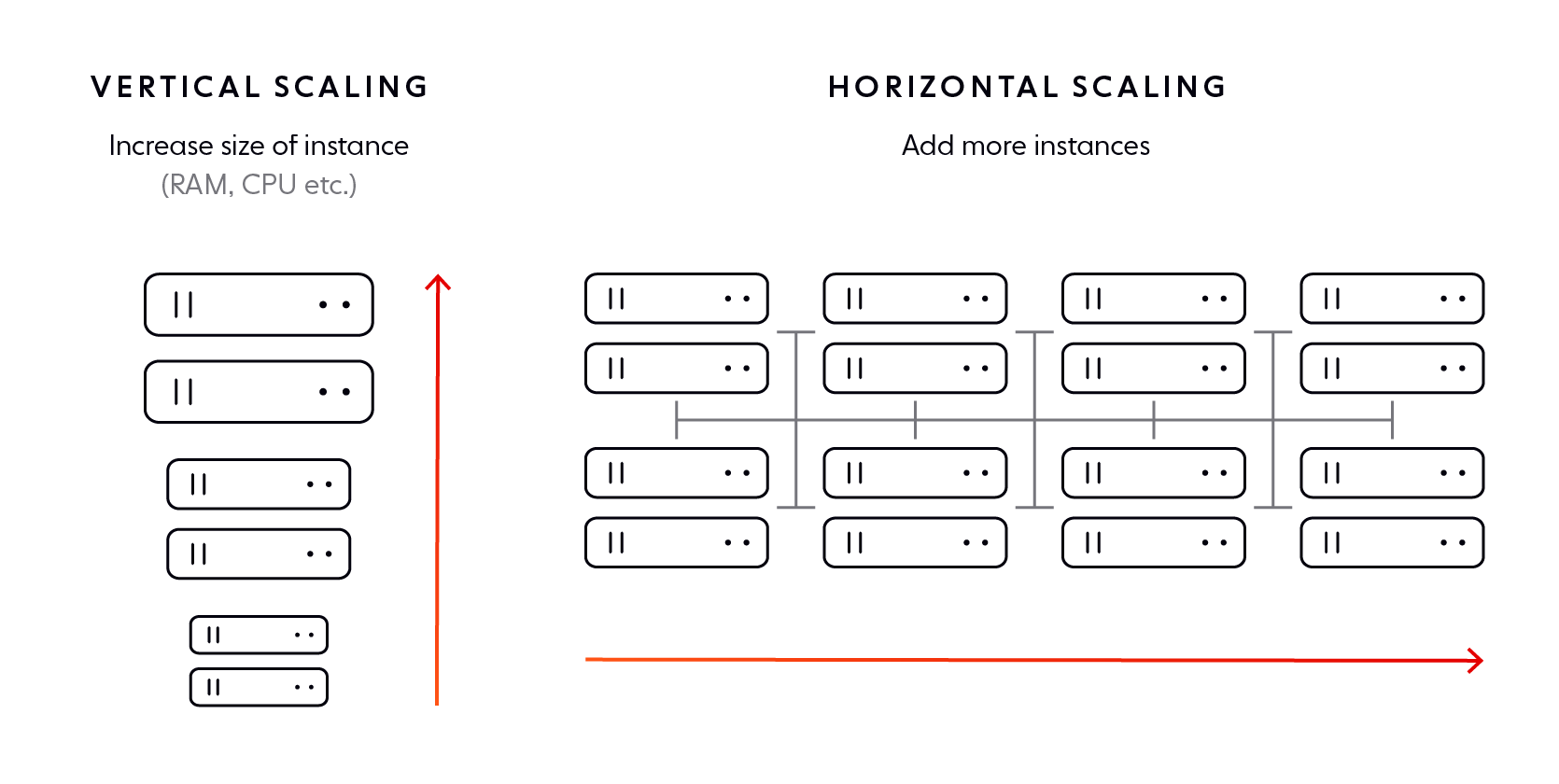

Vertical scaling. Also known as scaling up, it implies adding more power (e.g., CPU, RAM) to an existing machine.

Horizontal scaling. Also known as scaling out, it involves adding more machines to the network, which share the processing workload.

At first glance, vertical scaling seems attractive, as it's easier to implement and maintain than horizontal scaling. You might even ask yourself: how many WebSocket connections can one server handle? However, that's not the right question to ask, and that's because scaling up has severe limitations.

Let's look at a hypothetical example to demonstrate these drawbacks. Imagine you've developed an multiplayer live quiz app with WebSockets that's being used by tens of thousands of users, with more and more joining every day. This translates into an ever-growing number of WebSocket connections your server layer needs to handle. However, since you are only using one machine, there's a finite amount of resources you can add to it, which means you can only scale your server up to a finite capacity. Furthermore, what happens if one day the number of concurrent WebSocket connections prove to be too much to handle for just one machine? Or what happens if you need to upgrade your server? The availability of your system and the uptime of your quiz app would be severely affected.

In contrast, horizontal scaling is a model that's more dependable in the long run. Even if a server needs to be upgraded or crashes, you are in a much better position to protect your quiz app's overall availability since the workload of the machine that failed is distributed to the other nodes in the network.

Of course, horizontal scaling comes with its own complications - it involves a more complex architecture, load balancing & routing, increased infrastructure and maintenance costs, etc. One of the biggest challenges you'll face is automatically syncing message data and connection state across multiple WebSocket servers in milliseconds. Given that these servers need to be connected in a stateful manner with the clients, state synchronization becomes a tough nut to crack. Furthermore, you'll need to ensure that this sync mechanism is itself always available and working reliably.

Despite its increased complexity, horizontal scaling is worth pursuing, as it allows you to scale limitlessly in theory. This makes it a superior alternative to vertical scaling. So, instead of asking how many connections can a server handle, a better question would be: how many servers can I distribute the workload to?

In addition to horizontal scaling, you should also consider the elasticity of your server layer. System-breaking complications can arise when you expose an inelastic server layer to the Internet, a volatile and unpredictable source of traffic. To successfully handle WebSockets at scale, you need to be able to quickly (automatically) add more servers into the mix so that your system is highly available and has enough capacity to deal with potential usage spikes at all times.

Dynamic elasticity & high availability guaranteed

Using an architecture pattern designed for scale

Often, when you provide virtual events and interactive, realtime digital experiences at scale over the Internet, you won't always know how many participants there might be involved. Therefore, your system should be designed to handle an unknown but potentially very high number of concurrent users.

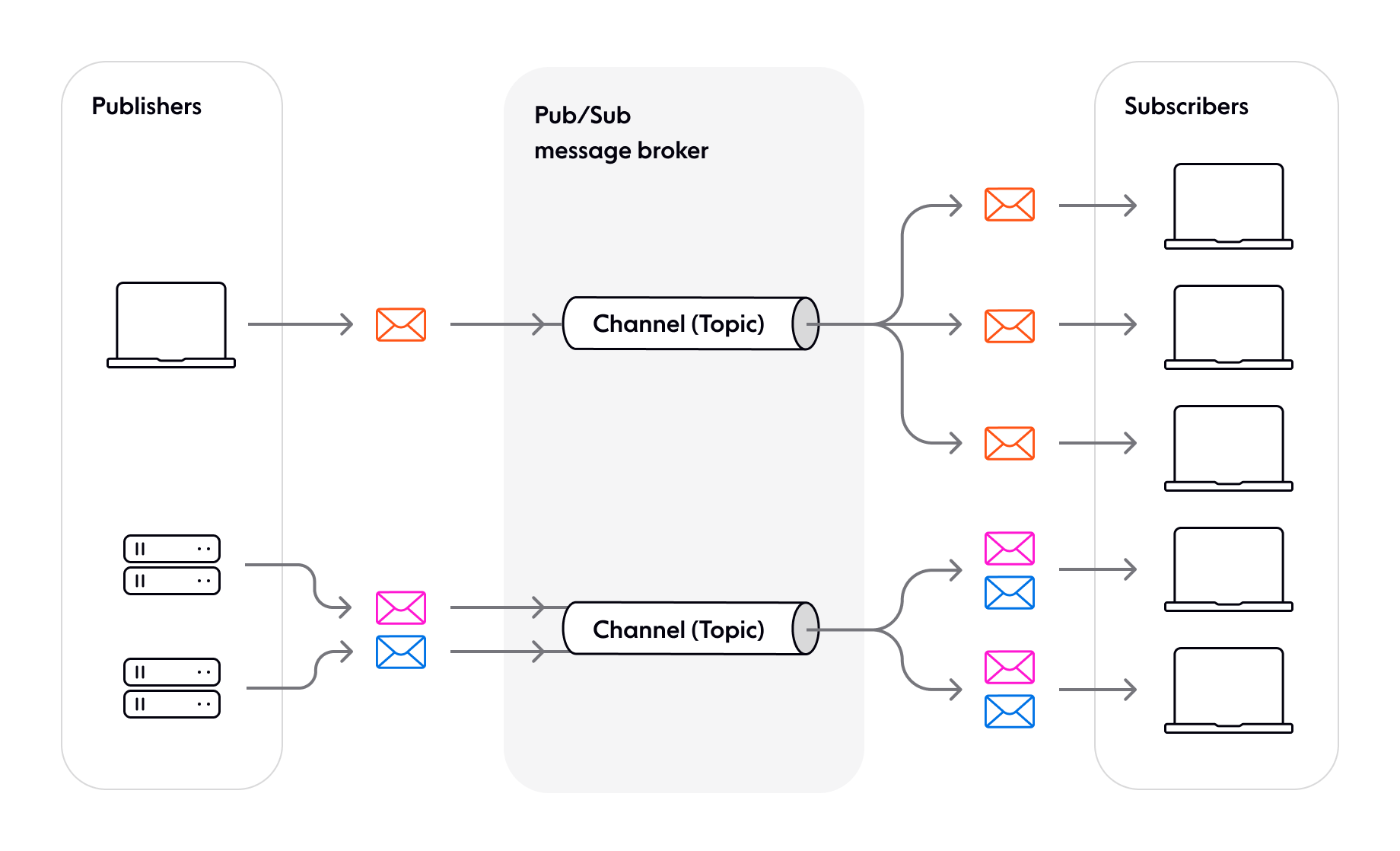

To deal with this unpredictability, you should architect your system based on a pattern designed for huge scalability. One of the most popular and dependable choices is the pub/sub pattern.

In a nutshell, pub/sub provides a framework for exchanging messages between publishers (typically your server) and subscribers (end-user devices in our context). Publishers and subscribers are unaware of each other, as they are decoupled by a message broker, which usually groups messages into channels (or topics). Publishers send messages to channels, while subscribers receive messages by subscribing to relevant channels.

The pub/sub system's decoupled nature helps ensure your apps can theoretically scale to limitless subscribers. A significant advantage of adopting the pub/sub pattern is that you often have only one component that has to deal with scaling WebSocket connections - the message broker. As long as your message broker can scale predictably, it's unlikely you'll have to add additional components or make any other changes to your system to deal with the unpredictable number of concurrent users connecting over WebSockets.

Let's now look at a real-life example to see the advantages of using the pub/sub pattern. Tennis Australia wanted a solution that would allow them to provide realtime scores, updates, and commentary to tennis fans browsing the Australian Open website. Tennis Australia had no way of knowing how many client devices could subscribe to updates at any given moment.

As they were interested in keeping engineering complexity to a minimum, Tennis Australia decided to use Ably as an Internet-facing pub/sub message broker. This enables Tennis Australia to keep engineering complexity to a minimum - all they have to do is publish a message to an Ably channel whenever an event takes place (such as a rally being won). A message might look something like this:

var ably = new Ably.Realtime('API_KEY');

var channel = ably.channels.get('tennis-score-updates');

// Publish a message to the tennis-score-updates channel

channel.publish('score', 'Game Point!');Ably then broadcasts the message to all clients that are subscribed to the channel over WebSockets; in Tennis Australia's case, the number of concurrent subscribers can be in excess of one million.

Note that Ably channels provide message filtering and allow you to shard data into smaller, more manageable streams. For example, you might have a channel containing updates for each player or each game.

While pub/sub is an excellent choice from a scalability perspective, it's worth mentioning that most pub/sub solutions come with challenges and limitations of their own, such as message delivery issues (guaranteed delivery, ordering, exactly-once semantics). Such shortcomings usually stem from the decoupled nature of pub/sub, and you will need to find ways or build mechanisms to mitigate them.

Ably’s pub/sub implementation

Making your system fault-tolerant

When you're building virtual event platforms and interactive, engaging apps with WebSockets, providing reliable, uninterrupted experiences that match and exceed user expectations is crucial. To satisfy this stringent requirement, you must think about engineering dependability and fault tolerance in your system.

As a quick definition, fault tolerance is the ability of a system to continue to be dependable (both available and reliable) in the presence of certain component or subsystem failures.

Availability can be loosely thought of as the assurance of uptime; reliability can be thought of as the quality of that uptime — that is, assurance that functionality and user experience are preserved as effectively as possible in spite of adversity.

Fault-tolerant systems tolerate faults. They're designed to treat failures as routine and to mitigate the impact of adverse circumstances, ensuring the system remains dependable. When building systems designed for scale, such as virtual event platforms, you must start from the assumption that component failures will happen sooner or later. It's vital that when these failures occur, your system has enough redundancy to continue operating.

To make your system fault-tolerant, you need to ensure it's redundant against instance or even datacenter failures. This implies distributing your infrastructure across multiple availability zones in the same region. You might want to take it even further and have your infrastructure distributed across multiple regions. This way, even in the event of a region failure, your system would still be operational.

Of course, building a distributed, fault-tolerant system that can dependably scale to handle thousands or even millions of WebSocket connections is a hard challenge, which involves significant engineering and DevOps efforts and infrastructure-related costs. Most of the time, it's more practical to offload at least parts of this complex burden to a fully-managed battle-tested solution.

Ably is built with fault tolerance in mind

Fallback transports

Despite widespread platform support, WebSockets suffer from some networking issues. Here are some of the problems you may come across:

Some proxies don't support the WebSocket protocol or terminate persistent connections.

Some corporate (firewalls) block specific ports, such as 443 (the standard web access port that supports secure WebSocket connections).

WebSockets are still not entirely supported across all browsers.

Imagine you've developed an interactive platform with WebSockets that delivers features such as interactive presentations, Q&As, virtual quizzes & polls, and breakout rooms & chat. Your product attracts interest from businesses and organizations of all types and sizes, as it's ideal for workshops, training sessions, and daily meetings. So what do you do to ensure you can deliver your features to customers, knowing that you may not be able to use WebSockets in all situations?

If you foresee clients connecting from within corporate firewalls or otherwise tricky sources, you most likely need to consider supporting fallback transports, such as XHR streaming, XHR polling, or long polling. Developing your own fallback capability is a complex process that takes a lot of time and resources. In most cases, to keep engineering complexity to a minimum, you are better off using an existing WebSocket-based solution that includes fallback options.

However, it's not enough to only have fallback capabilities. In the context of scale, another essential thing to consider is the impact fallbacks have on the availability of your system. Let's assume you have tens of thousands of concurrent users using your interactive platform, and there's an incident that causes a significant proportion of the WebSocket connections to fall back to long polling.

Not only is handling tens of thousands of concurrent WebSocket connections a challenge in itself, but it's further amplified by falling back to long polling. There are some notable differences between WebSockets and long polling. The latter is much more resource-intensive on servers and comes with additional complexity in its implementation.

When you have tens of thousands of WebSocket connections simultaneously falling back to long polling (or any other similar transport), your scalability problem can increase by further order of magnitude. To ensure your system's availability and uptime, your server layer needs to be elastic and have enough capacity to deal with the increased load.

Fallbacks & limitless capacity

Handling reconnections with continuity

It’s common for devices to experience changing network conditions. Devices might switch from a mobile data network to a Wi-Fi network, go through a tunnel, or experience intermittent network issues. Scenarios like these may lead to WebSocket connections being dropped, and they will have to be re-established.

For some use cases, data integrity is crucial, and once a WebSocket connection is re-established, the stream of data must resume precisely where it left off. Think, for example, of features like live chat, where missing messages due to a disconnection or receiving them out of order leads to a poor user experience and causes confusion and frustration.

If resuming a stream exactly where it left off after brief disconnections is important to your use case, your system needs to be stateful, and you need a resilient storage strategy. Here are some things you’ll need to consider:

Caching messages in front-end memory. How many messages do you store, and for how long?

Moving data to persistent storage. Do you need to transfer data to disk? If so, where do you store it, and for how long? How will clients access that data when they reconnect?

How does the stream resume? When a client reconnects, how do you know which stream to resume and where exactly to resume it from? Do you need to use a connection ID to establish where a connection broke off? Who needs to keep track of the connection breaking down - the client or the server?

Ably’s approach to handling reconnections with continuity

Other challenges you need to think about

Many aspects need to be considered when scaling WebSockets to power apps that deliver virtual events and interactive realtime experiences to millions of users. In addition to the topics we've covered so far in this article, here are some other things you will need to think about:

Load balancing strategy. If you scale beyond a single server, you need to use load balancers to distribute WebSocket connections across multiple machines. Effective load balancing itself is a challenge and raises many questions you will have to answer. Should you use sticky sessions or non-sticky load balancing? What allocation method (e.g., round-robin, least connections) is the best for your specific use case? How do you make your load balancers elastic? How do you route traffic away from unhealthy data centres?

Managing backpressure. You must consider the volume of data going through your system, as this has broad ramifications and impacts its dependability significantly. One of the related challenges you'll have to face is backpressure - scenarios where your server is sending data over WebSockets faster than it can be processed on the client-side. There are several mechanisms you can use to manage backpressure, such as controlling the rate at which you're sending data to consumers, buffering, or conflation/delta compression.

Terminating connections. Occasionally, you might find that some WebSocket connections are hogging all of a server's resources. Or you might have idle connections that could be dropped to decrease the load. What load shedding mechanism are you going to use, and how will you progressively allow new WebSocket connections to be established?

Connection detection. By its very nature, a WebSocket connection is persistent and always-on. You periodically need to check if it's "alive" by using heartbeat mechanisms such as TCP keepalives or ping/pong control frames. It's important to note that sending heartbeats has a direct impact on the scalability and dependability of your system, especially when you're handling millions of concurrent WebSocket connections. The more frequent you send heartbeats, the more load it creates, and your system must have the capacity to deal with it.

System abuse. You need to take the necessary precautions and ensure your system can deal with system abuse, such as DoS attacks. These incidents don't necessarily have to be the result of malicious actions. They could just as well be legitimate operations but at unsustainable rates. Regardless, a massive number of new connections being made in a very short space of time can seriously hinder your system's performance and availability, so you need to think about implementing exponential backoff & rate-limiting mechanisms.

Without a doubt, scaling WebSockets while also providing dependable services to your customers is far from being a walk in the park. In some scenarios, if your use case doesn't require bidirectional messaging but subscribe-only (e.g., streaming live score updates), then a realtime protocol like Server-Sent Events (SSE) might be a more suitable solution. To weigh up the two options, have a read of our blog post comparing WebSockets and SSE.

Final thoughts

Hopefully, this article will help you focus on the right things you need to consider when building virtual event platforms & apps with WebSockets that scale to support millions of concurrent users. As you have seen, there are many challenges you will need to overcome in order to meet unprecedented demand while at the same time providing dependable and uninterrupted experiences that satisfy attendees & event organizers.

Here at Ably, we’ve developed an enterprise-grade pub/sub messaging platform that makes it easy to efficiently design, quickly ship, and seamlessly scale critical realtime functionality with WebSockets across every major web and mobile platform. Everyday, we deliver billions of realtime messages to millions of users for thousands of companies at consistently low latencies over a secure, reliable, and highly available global edge network.

As a fully-managed platform, Ably enables event companies building digital offerings to simplify engineering, minimize DevOps overhead, reduce infrastructure costs, increase development velocity, and scale to meet any demand. Organizations such as Hopin, Mentimeter, Tennis Australia, Vitac, or Onedio trust us with their realtime needs and leverage Ably to deliver virtual events & interactive functionality at scale - without managing infrastructure.

If you want to talk more about WebSockets or if you’d like to find out more about Ably and how we can help you build scalable virtual event platforms & apps, get in touch or sign up for a free account.

References and further reading

Recommended Articles

Building realtime apps with Flutter and WebSockets: client-side considerations

Learn about the many challenges of implementing a dependable client-side WebSocket solution for Flutter apps.

Building dependable realtime apps with Python and WebSockets

Learn how to build dependable realtime apps that scale with Python and WebSockets, and the challenges associated with this.

AMQP 0-9-1

AMQP is not two versions of the same protocol, but two entirely different protocols: one is called "0-9-1", and the other "1.0". RabbitMQ uses AMQP 0-9-1.