When it comes to WebSockets vs HTTP, deciding which one to use isn’t always clear-cut. Which one is better? Which one should you use for your app?

But the answer to the question isn’t necessarily one or the other - developers often use both WebSockets and HTTP in the same app depending on the scenario. The more important question to ask is - how can I decide whether WebSockets or HTTP is the right communication protocol for a specific type of communication?

Here’s everything you need to know.

WebSockets vs HTTP at a glance

On this page, we explore how these technologies work, how they fare when it comes to implementing realtime communication, and offer specific guidance on which to use for what scenarios.

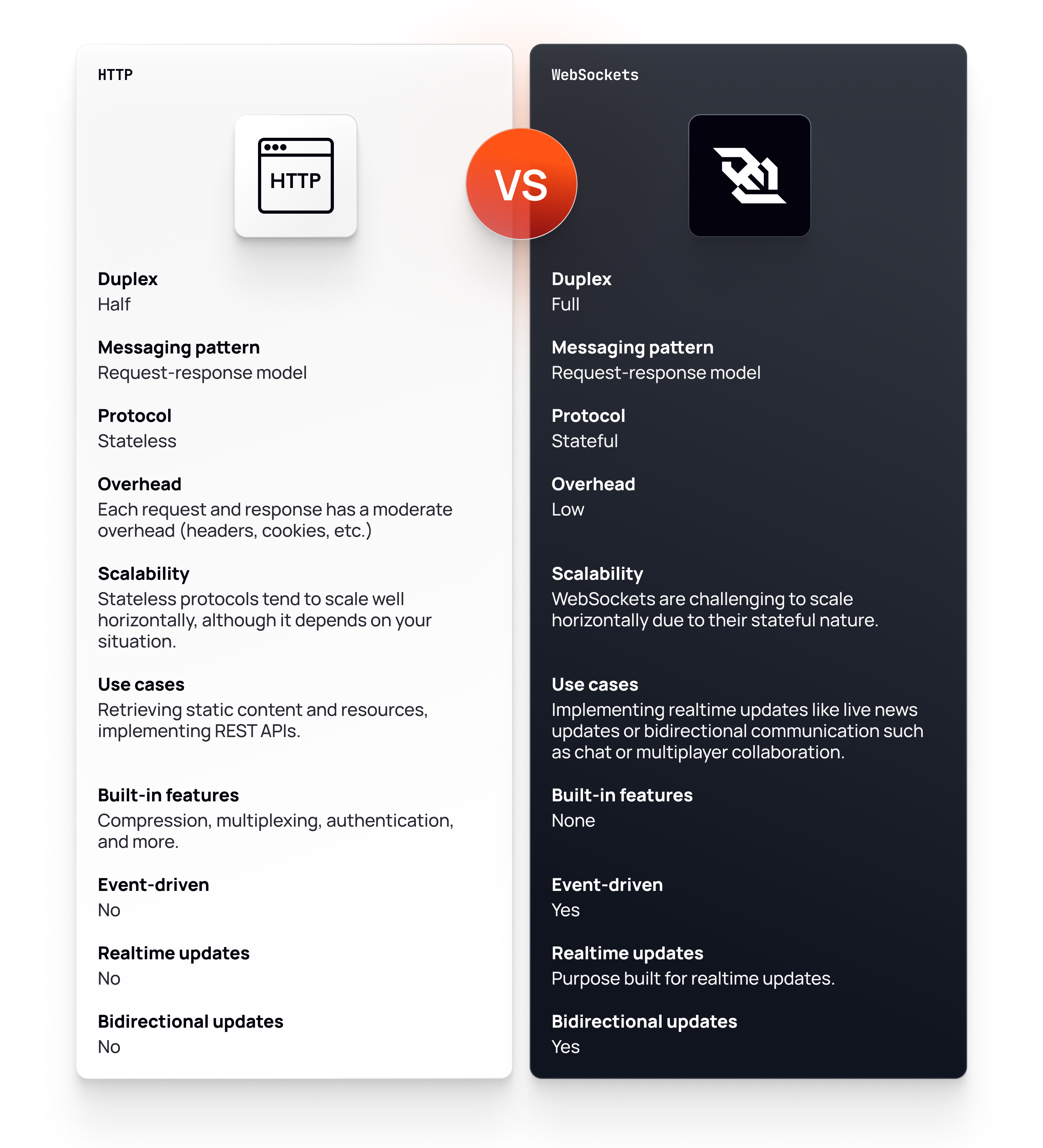

In case you're looking for a high level comparison, here are the key differences at a glance:

WebSockets vs HTTP table

Read on to learn more about how these two popular protocols compare in detail.

What is HTTP?

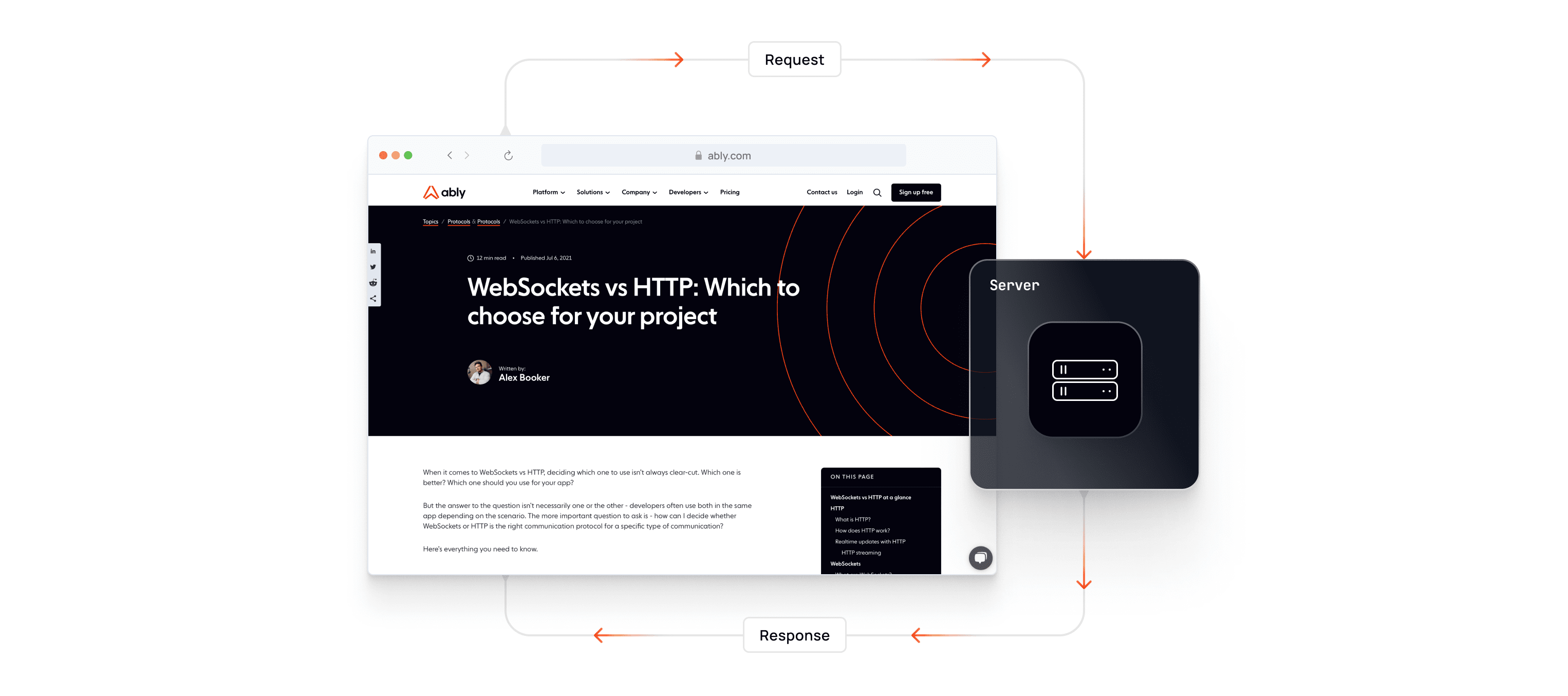

Fundamentally, HTTP is a communication protocol that enables clients (such as a web browser) and servers to share information.

For example, HTML documents, images, application data (JSON), and more.

It’s hard to think of a better example of HTTP in action than this page you’re reading right now.

When you loaded this page, your browser made an HTTP request, to which the server responded with the HTML document you’re currently reading.

How does HTTP work?

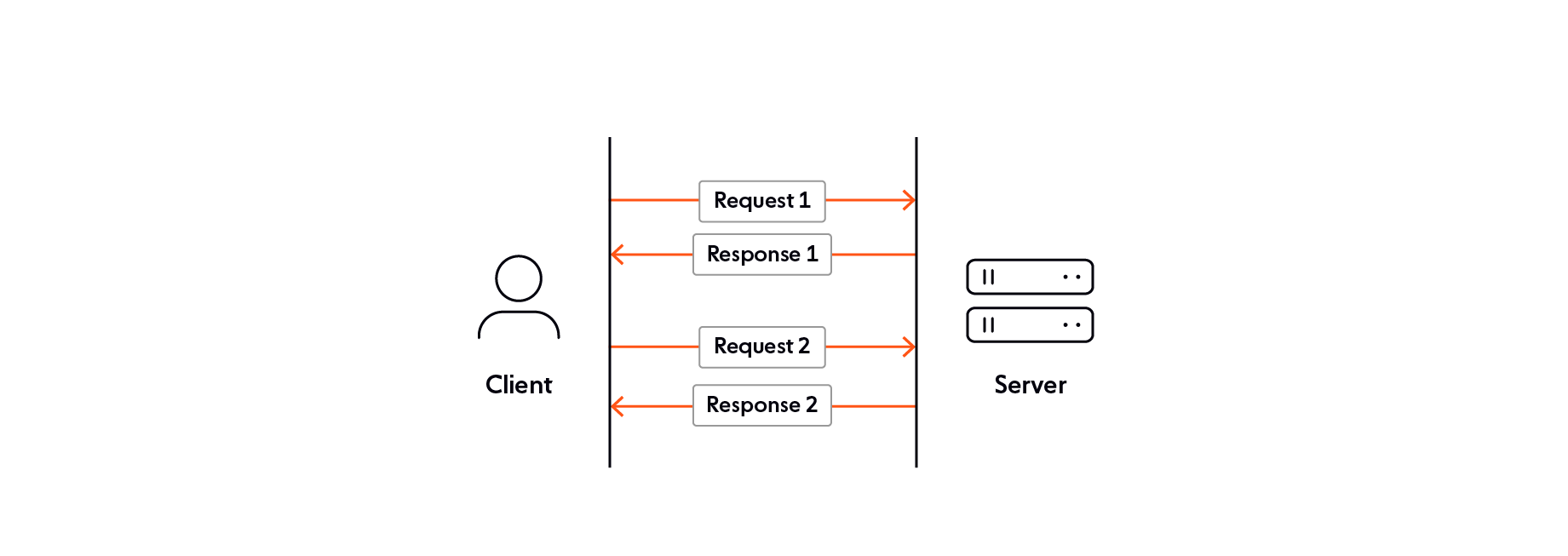

HTTP follows the request-response messaging pattern where the client makes a request and the web server sends a response that includes not only the requested content, but also relevant information about the request.

Under the hood, a short-lived connection to the server is opened for each request then closed.

HTTP examples

Web browsing.

Downloading images, videos, or binary files like desktop applications.

Making an asynchronous request to an API using the fetch function in JavaScript.

Because each HTTP request contains all the information necessary to process it, there is no need for the server to keep track of connections and requests.

This stateless design is advantageous because it makes it possible to deploy additional servers to handle requests without the need to synchronize state logic between servers.

Additionally, because every request is self-contained, it becomes straightforward to route messages through proxies to perform value-added functions such as caching, encryption, and compression.

The downside with this stateless approach is that the client opens an ephemeral connection and sends metadata for each request, incurring a small overhead.

When loading a web page or downloading a file, this overhead is negligible. However, it could have a noticeable impact on your app’s performance if you’re sending high-frequency requests with small payloads.

Realtime updates with HTTP

This pattern, where the client makes a request and the server issues a response, works well for static resources like web pages, files, or application data.

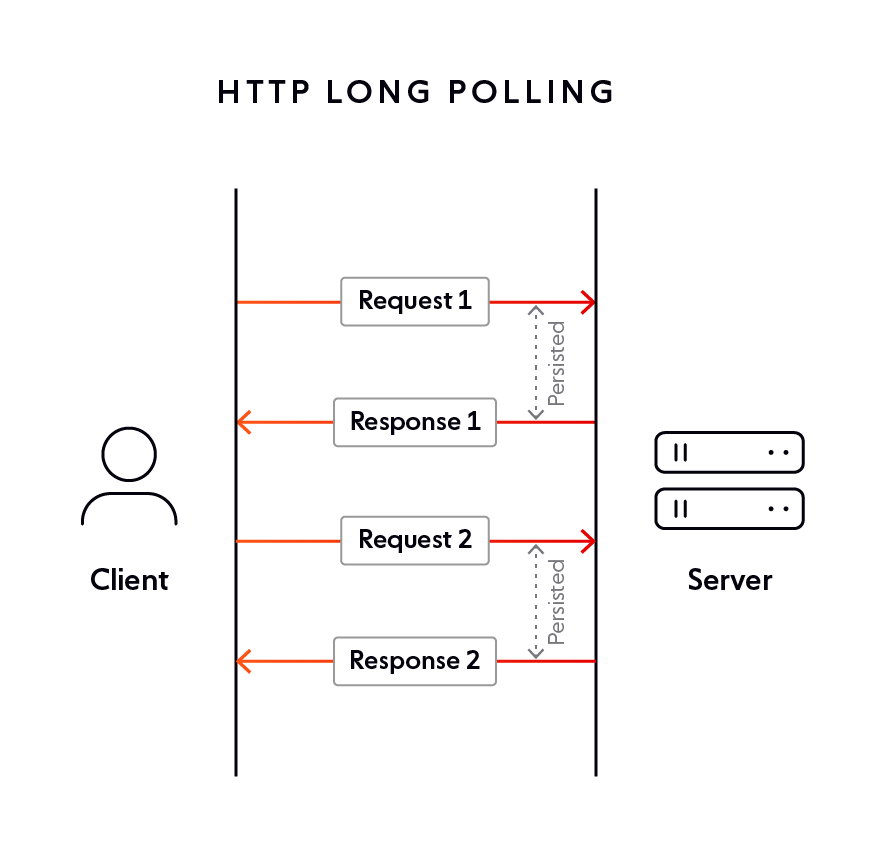

However, consider a scenario where the client doesn’t know when new information will become available.

Imagine you’re implementing a breaking news feature for the BBC, for example.

In this case, the client has no idea when the next update in the story is going to break.

Now, you could code the client to make HTTP requests at a frequent interval just in case something happens and, for a handful of clients, that might work well enough.

But suppose you have hundreds or thousands of clients (hundreds of thousands, in the case of BBC) hammering the server with requests that yield nothing new between updates.

Not only is this a waste of bandwidth and server resources, but say the update breaks moments after the most recent request finished - it could be several seconds before the next request is sent and the user gets an update. Broadly, this approach is called HTTP polling and it’s hardly efficient nor realtime!

Instead, it would be better if the server could push data to the client when new information becomes available but this fundamentally goes against the grain of the request-response pattern.

Or does it?

HTTP streaming

Although HTTP fundamentally follows the request-response pattern, there is a workaround available to implement realtime updates.

Instead of the server responding with a complete response, instead it issues a partial HTTP response and keeps the underlying connection open.

Building on the breaking news example from the previous section, with HTTP streaming, the server can append partial responses (chunks, if you like) to the response stream every time a news update breaks - the connection remains open indefinitely, enabling the server to push fresh information to the client when it becomes available with minimal latency.

We’ve written about HTTP streaming and Server-Sent Events (a standard implementation of HTTP streaming) elsewhere on the Ably website if you’re interested in learning more about how this pattern works in practice.

With HTTP streaming, the server has to maintain the state of numerous long-lived connections and can no longer be considered stateless. This introduces new challenges around scaling HTTP streaming, and introduces a single point of failure as well.

HTTP streaming drawbacks

HTTP streaming is a viable way to implement realtime updates, however, we can’t consider it a comprehensive realtime solution.

The main drawback of HTTP streaming compared to other realtime solutions like WebSockets is that HTTP is a half-duplex protocol. This means, like a walkie talkie, information can only flow over a connection in one direction at a time.

For realtime updates like breaking news or realtime graphs where updates predominantly flow one-way from the server to client, the fact that HTTP is half-duplex is unlikely to present an immediate limitation (although you may want to future-proof your messaging layer by starting with something inherently full-duplex).

However, for situations where information needs to flow simultaneously in both directions over the same connection like a multiplayer game, chat, or collaborative app like Figma, HTTP is plain inappropriate, even with the advent of streaming.

For situations like these, we’ll need to look at WebSockets, an all-in-one solution for realtime updates as well as bi-directional communication.

WebSockets

What are WebSockets?

Like HTTP, WebSockets is a communication protocol that enables clients (usually web browsers, hence the name) and servers to communicate with one another

Unlike HTTP with its request-response model, WebSockets are specifically designed to enable realtime bi-directional communication between the server and client.

This means the server can push realtime updates (like breaking news) as soon as they become available without waiting for the client to issue a request.

What’s more, WebSockets is a full-duplex protocol.

In simple terms, this means data can flow in both directions over the same connection simultaneously, making WebSockets the go-to choice for applications where the client and server are equally “chatty” and require high throughput. We’re talking about things like chat, collaborative editing, and more.

How do WebSockets work?

Even though we are comparing the two, it’s important to note that HTTP and WebSockets aren't mutually exclusive.

Generally, your application will use HTTP by default then WebSockets for realtime communication code.

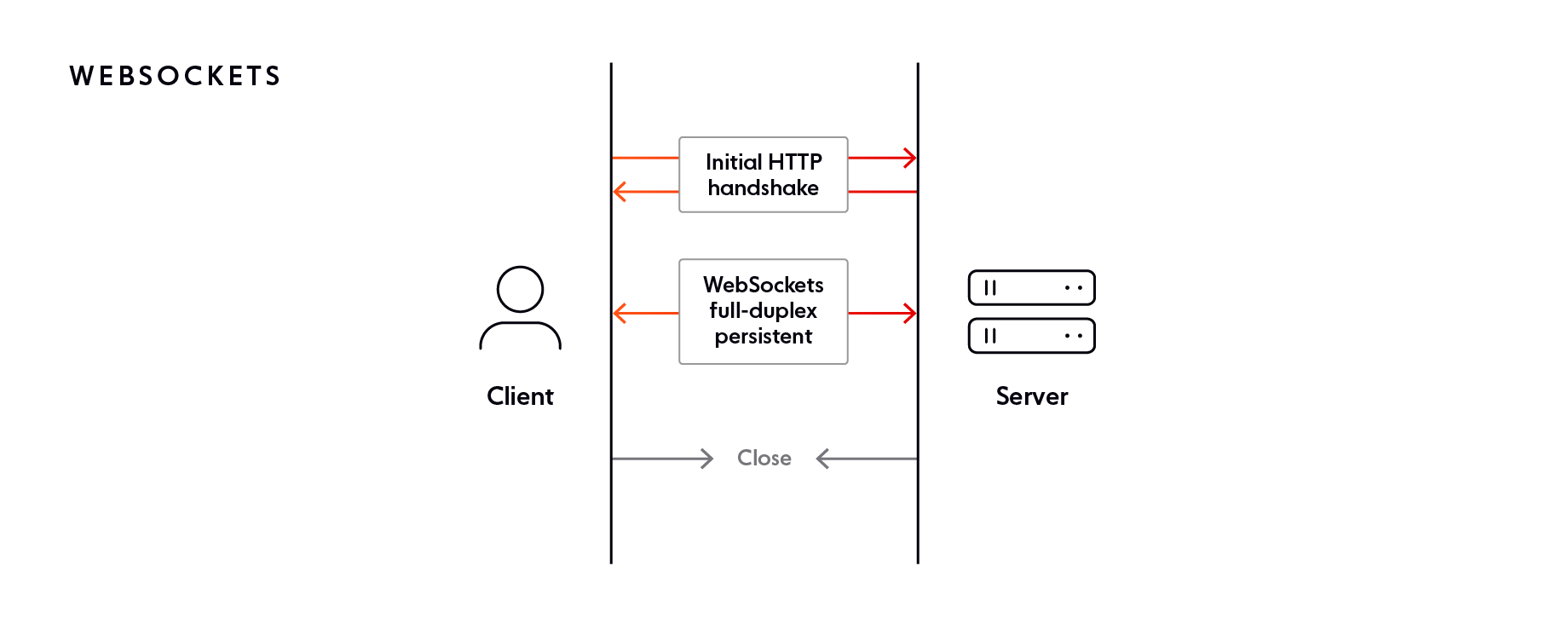

Interestingly, the way WebSockets work internally is by upgrading the HTTP connection to a WebSocket connection.

When you make a WebSocket connection, WebSockets make a HTTP request to a WebSocket server to ask, “Hey! Do you support WebSockets?”

If the server responds “Yes”, the HTTP connection is upgraded to a WebSocket connection – this is called an opening handshake.

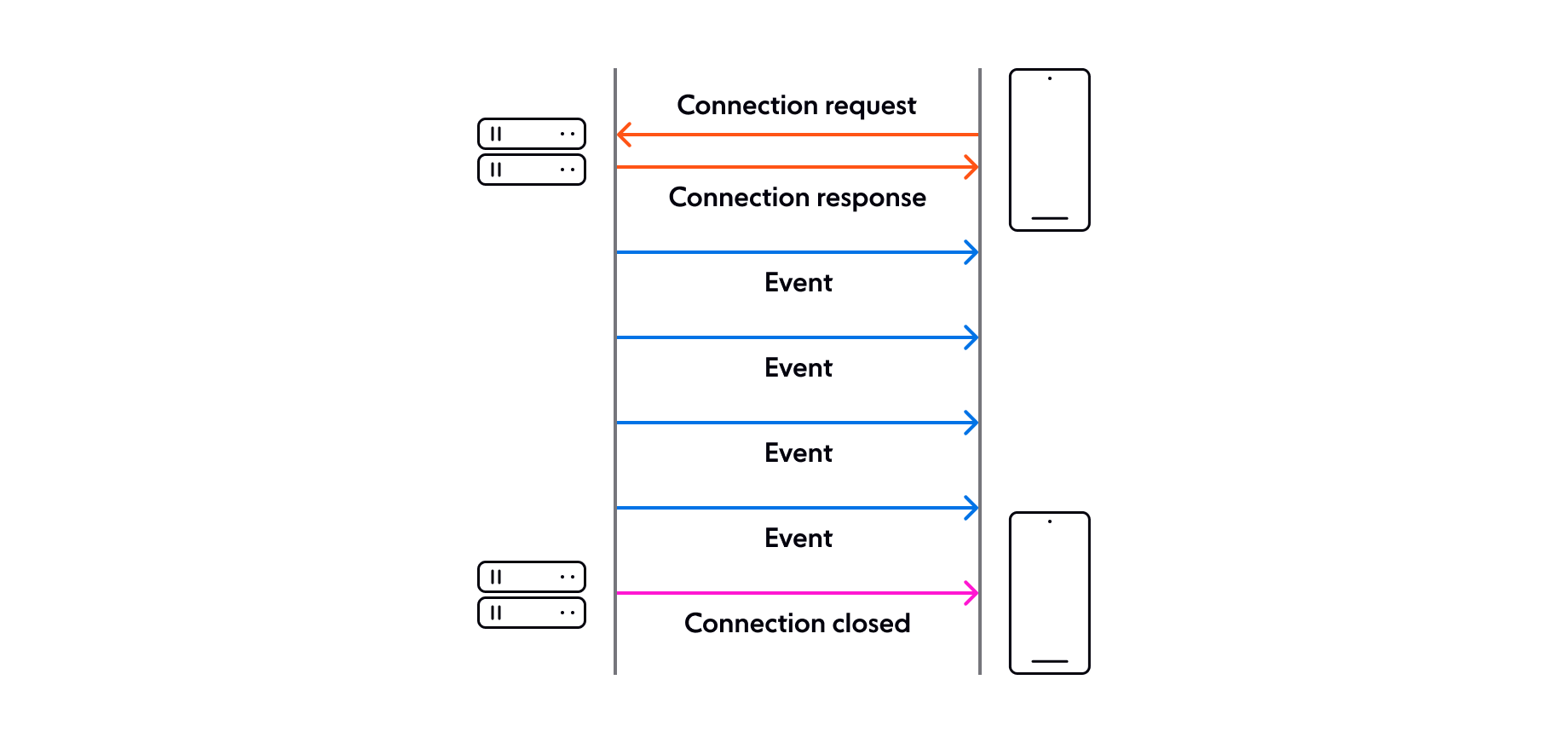

Sequence for a websocket connection/disconnection

It’s not something you ordinarily need to think about because most WebSocket APIs take care of this for you. Still, it illustrates the relationship between HTTP and WebSockets.

Once the connection is established, both the client and server can transmit and receive data in realtime with minimal latency until either party decides to close the connection.

Realtime updates with WebSockets

Whereas HTTP is usually handled seamlessly by the web browser (for example, when you load this page), WebSockets always require you to write custom code.

To bring the idea to life, here’s an an example of WebSockets in action with JavaScript and the built-in WebSocket Web API:

// Create WebSocket connection.

const socket = new WebSocket("ws://localhost:8080");

// Connection opened

socket.addEventListener("open", (event) => {

socket.send("Hello Server!");

});

// Listen for messages

socket.addEventListener("message", (event) => {

console.log("Message from server ", event.data);

});Above, we initiate the WebSocket constructor with a URL to the WebSocket server (not shown in this post).

Note how the URL is prefixed with ws:// for “WebSocket” instead of http:// for "HTTP". Similarly, you would use wss:// instead of https:// when using encryption.

As far as protocols go, WebSockets aren’t exactly low level, but they are flexible.

The flexibility afforded can be advantageous in specialized situations where you need absolute fine-grain control over your WebSockets code. However, for many developers, the fact that WebSockets are quite barebones is actually a burden because it creates a lot of extra work.

WebSockets don’t know how to detect or recover from disconnections. You need to implement something called a heartbeat yourself.

Because WebSockets is a protocol completely separate from HTTP, you can’t benefit from the value-added stuff HTTP gets from HTTP proxies like compression, for example.

You need to decide your own way to implement authentication and error codes compared to HTTP where it’s fairly standardized.

In many ways, you end up reinventing the wheel as you build out a comprehensive WebSocket messaging layer and that takes time - time you would probably prefer to spend building something unique to your app.

Although the WebSocket Web API used in the code example above gives us a handy way to illustrate how WebSockets work, generally, developers sidestep it in favor of open source libraries that implement all those things like authentication, error handling, compression, etc., for you, as well as patterns like pub/sub or “rooms” to give you a simple way to route messages.

These WebSocket libraries tend to focus on the frontend. On the backend, you’re still working stateful protocol which makes it tricky to spread work across servers to isolate your app from failures that might lead to congestion (high latency) or even outages.

While open source libraries provide a comprehensive frontend solution, there’s usually more work to do on the server if you want to ensure your realtime code is robust and reliable with low latency.

Increasingly, developers rely on realtime experience platforms like Ably that solve all the annoying data and infrastructure headaches so you can focus on building a fantastic realtime experience for your users.

Comparing HTTP vs WebSockets

A helpful way to think about the difference between HTTP and WebSockets is mail vs a phone call.

HTTP When Tim Bernes-Lee invented the World Wide Web and HTTP in 1989, the big idea was to exchange documents between computers over a network. In that sense, HTTP is a lot like mail - a client (typically a web browser) requests a HTML document from the server, and the backend routes it to the client’s address. If the client wants more information, it makes another request, and the server sends something else.

WebSockets Imagine a web app like chat, a collaborative editor, or multiplayer game where information really needs to flow simultaneously in both directions. To draw on the previous analogy, mail is dependable, but it’s slow and one-way. When you require information to flow in both directions, a phone call would be the superior choice, and that’s what WebSockets do - open a continuous and two-way dialogue between the server and client so that information may flow in realtime with high throughput in either direction.

Which to choose: WebSockets or HTTP?

When HTTP is better

Requesting resources: When the client needs the current state of a resource and does not require realtime updates, HTTP would be a sound choice.

Requesting cacheable resource: Resources that are frequently accessed yet change infrequently can benefit from HTTP caching. WebSockets do not support caching.

Implementing a REST API: HTTP methods such as POST, GET, and UPDATE align perfectly with the principles of REST.

Synchronizing events: The request-response pattern is well-suited to operations that require synchronization or need to execute in a specific order. This is because HTTP requests are always accompanied by a response that tells you the result of the operation (be that “200 OK” or not). By comparison, WebSockets offer no guarantee that a message will be acknowledged in any form out of the box.

Maximizing compatibility: HTTP is ubiquitous and widely-supported. In increasingly rare situations, misconfigured our outdated enterprise firewalls can interfere with the WebSocket upgrade handshake, preventing a connection from being established. In such cases, a fallback to HTTP streaming or long polling is required.

When WebSockets are better

Realtime updates: When a client needs to be notified when new information becomes available, WebSockets are generally a good fit.

Bi-directional communication: WebSockets provide low-latency bi-directional communication, allowing instant data transfer between the client and server. Unlike HTTP, which requires a new request for every server response, WebSockets maintain a persistent connection, making them ideal for realtime applications like chat, gaming, and live updates.

High performance and low latency: WebSockets have less overhead compared to HTTP, as they don't require the headers and handshakes for each request-response cycle. This efficiency leads to lower data transfer costs and improved performance for realtime situations like chat and live data updates.

WebSocket vs HTTP FAQ

Is WebSockets a replacement for HTTP?

No, WebSockets do not replace HTTP. While both communication protocols are used in web development, they each serve a different purpose. HTTP follows the request-response model and is mainly used to retrieve static resources like web pages or make stateless API requests. WebSockets, on the other hand, enable realtime bi-directional communication between client and server - they are ideal for realtime updates or applications that require continuous data exchange.

Do people still use WebSockets?

WebSockets are still widely used and remain a popular choice for implementing realtime experiences in web development. Popular applications like Slack and Uber leverage WebSockets to enable real time data exchange.

While new technologies and protocols like Web Transport continue to emerge, WebSockets have proven to be reliable and effective for realtime scenarios, and developers often choose them for their simplicity and widespread browser support.

HTTP/2 vs WebSockets?

HTTP/2 introduced several features such as multiplexing, header compression, and unlimited connections per domain. In doing so, HTTP/2 makes HTTP streaming and SSE (a standardized implementation of HTTP streaming) a viable alternative to WebSockets when you need realtime updates. With that said, WebSockets are currently the only way to achieve full-duplex bi-directional communication in the browser.

Are WebSockets better than HTTP?

WebSockets are not inherently better than HTTP - rather, they serve different purposes. HTTP is a request-response protocol and is best-suited for traditional web applications where clients make occasional requests to servers for data and resources. On the other hand, WebSockets excel in realtime, bi-directional communication, making them ideal for applications that require constant data streaming and instant updates, such as live chat, online gaming, and collaborative tools.

Why not use WebSockets for everything?

HTTP is the standard protocol used by web browsers to request data and resources. When it comes to fetching a web page, for example, WebSockets would be unsuitable.

In theory, you could use WebSockets for API communication (instead of a HTTP REST API, for example). In practice, there aren’t any compelling reasons to do so, and the stateless nature of HTTP would be better-suited for these types of short-lived requests. Generally, you should assume your API is traditional HTTP by default then upgrade to WebSockets for realtime features.

Recommended Articles

Socket.IO vs. WebSocket

Compare WebSocket (a protocol) and Socket.IO (a library). Learn performance trade-offs, scaling limits, and how to choose the right approach for your realtime apps.

XMPP vs WebSocket: Which is best for chat apps?

Learn about the features of the XMPP and WebSocket protocols - and which is best for chat apps based on their pros and cons - in our comparison guide.

PubNub alternatives: 5 competitors to consider in 2024

Learn about similar solutions to PubNub that you can use to build realtime features like chat, asset tracking, live dashboards, and mobile push notifications.