- Topics

- /

- Protocols

- &Event-driven servers

- /

- How to stream Kafka messages to Internet-facing clients over WebSockets

How to stream Kafka messages to Internet-facing clients over WebSockets

Apache Kafka is one of the most powerful asynchronous messaging technologies out there. Designed in 2010 at LinkedIn by a team that included Jay Kreps, Jun Rao, and Neha Narkhede, Kafka was open-sourced in early 2011. Nowadays, the tool is used by a plethora of companies (including tech giants, such as Slack, Airbnb, or Netflix) to power their realtime data streaming pipelines.

Since Kafka is so popular, I was curious to see if you can use it to stream realtime data directly to end-users, over the Internet, and via WebSockets. After all, Kafka has a series of characteristics that seem to make it a noteworthy choice, such as:

High throughput

Low latency

High concurrency

Fault tolerance

Durability (persistence)

Existing solutions for streaming Kafka messages to Internet-facing clients

I started researching to see what the realtime development community has to say about this use case. I soon discovered that Kafka was originally designed to be used inside a secure network for machine to machine communication. This made me think that you probably need to use some sort of middleware if you want to stream data from Kafka to Internet-facing users over WebSockets.

I continued researching, hoping to find some open-source solutions that could act as the middleware. I discovered several of them, that can theoretically be used as an intermediary between Kafka and clients that connect to a stream of data over the Internet:

Unfortunately, all of the solutions listed above are proofs of concept and nothing more. They have a limited feature set and aren’t production-ready (especially at scale).

I then looked to see how established tech companies are solving this Kafka use case; it seems that they are indeed using some kind of middleware. For example, Trello has developed a simplified version of the WebSocket protocol that only supports subscribe and unsubscribe commands. Another example is provided by Slack. The company has built a broker called Flannel, which is essentially an application-level caching service deployed to edge points of presence.

Of course, companies like Trello or Slack can afford to invest the required resources to build such solutions. However, developing your own middleware is not always a viable option — it’s a very complex undertaking that requires a lot of resources and time. Another option — the most convenient and common one — is to use established third-party solutions.

As we’ve seen, the general consensus seems to be that Kafka is not suitable for last mile delivery over the Internet by itself; you need to use it in combination with another component: an Internet-facing realtime messaging service.

Here at Ably, many of our customers are streaming Kafka messages via our pub/sub Internet-facing realtime messaging service. To demonstrate how simple it is, here’s an example of how data is consumed from Kafka and published to Ably:

const kafka = require('kafka-node');

const Ably = require('ably');

const ably = new Ably.Realtime({{ABLY_API_KEY}});

const ablyChannel = ably.channels.get('kafka-example');

const Consumer = kafka.Consumer;

const client = new kafka.KafkaClient({ kafkaHost: {{KAFKA_SERVER_URL}} });

const consumer = new Consumer(

client,

[

{ topic: {{KAFKA_TOPIC}}, partition: 0 }

]

);

consumer.on('message', function (message) {

ablyChannel.publish('kafka-event', message.value);

});And here’s how clients connect to Ably to consume data:

const Ably = require('ably');

const ably = new Ably.Realtime({{ABLY_KEY}});

const channel = ably.channels.get('kafka-example');

channel.subscribe(function(message) {

console.log('kafka message data: ', message.data);

});You can decide to use any Internet-facing realtime messaging service between Kafka and client devices. However, regardless of your choice, you need to consider the entire spectrum of challenges this messaging service layer must be equipped to deal with.

Introducing the Ably Kafka Connector: we've made it easy for developers to extend Kafka to the edge reliably and safely.

Using Kafka and a messaging middleware: engineering and operational challenges

Before I get started, I must emphasize that the design pattern that is covered in this article involves using a WebSocket-based realtime messaging middleware between Kafka and your Internet-facing users.

It’s also worth mentioning that I’ve written this article based on the extensive collective knowledge that the Ably team possesses about the challenges of realtime data streaming at scale.

I’m now going to dive into the key things you need to think about, such as message fan-out, service availability, security, architecture, or managing back-pressure. The Internet-facing messaging service you choose to use between Kafka and end-users must be equipped to efficiently handle all these complexities if you are to build a scalable and reliable system.

Message routing

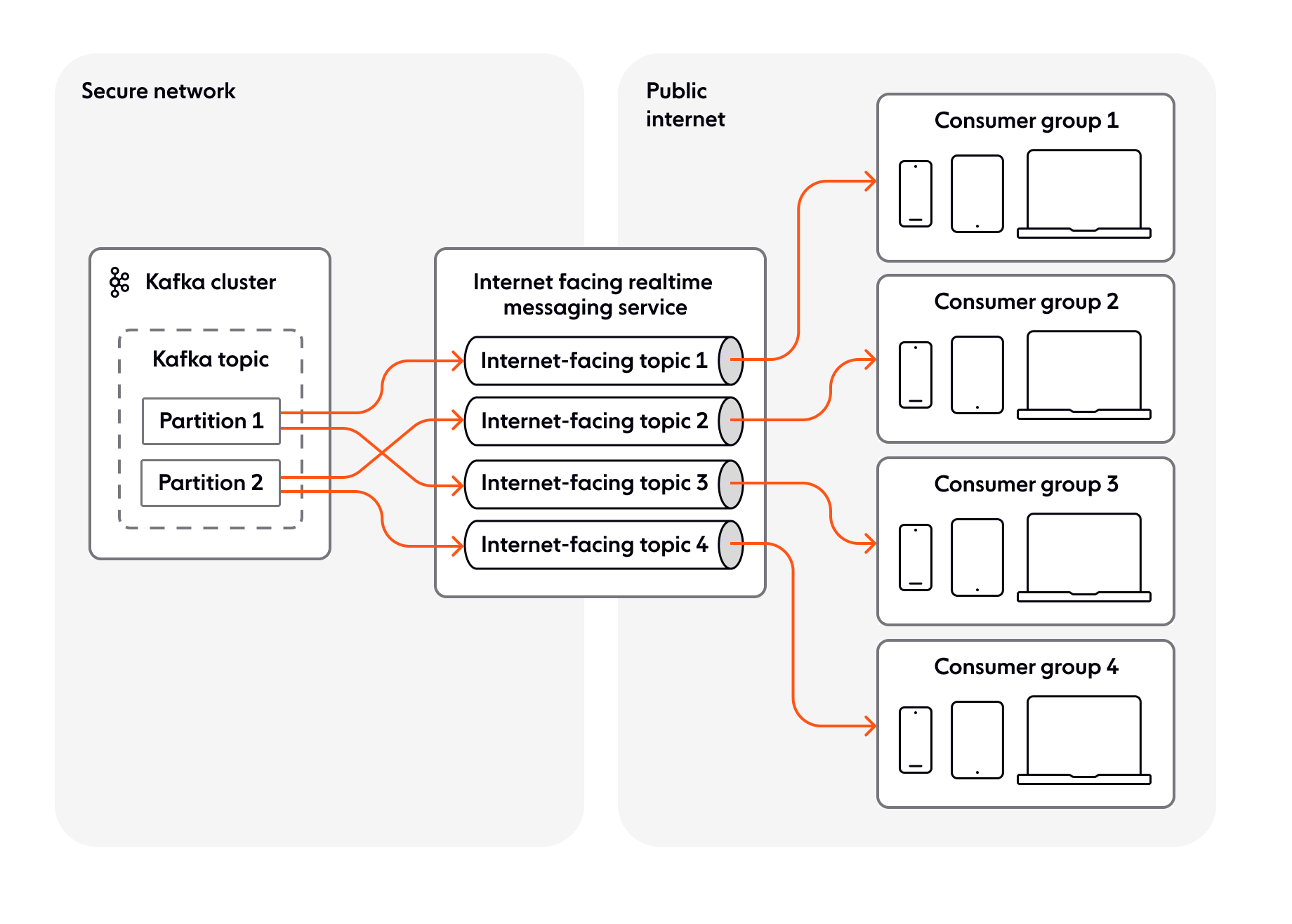

One of the key things you will have to consider is how to ensure that client devices only receive relevant messages. Most of the time, it’s not scalable to have a 1:1 mapping between clients and Kafka topics, so you will have topics that are shared across multiple devices.

For example, let’s say we have a credit card company that wants to stream a high volume of transaction information to its clients. The company uses a topic that is split (sharded) into multiple partitions to increase the total throughput of messages. In this scenario, Kafka provides ordering guarantees — transactions are ordered by partition.

However, when a client device connects via a browser to receive transaction information, it only wants and should only be allowed to receive transactions that are relevant for that user/device. But the client doesn’t know the exact partition it needs to receive information from, and Kafka doesn’t have a mechanism that can help with this.

To solve the problem, you need to use an Internet-facing realtime messaging service in the middle, between your Kafka layer and your end-users, as illustrated below.

The benefits of using this model:

Flexible routing of messages from Kafka to Internet-facing topics.

Ensures clients connecting over the Internet only subscribe to relevant topics.

Enhanced security, because clients don’t have access to the secure network where your Kafka cluster is deployed; data is pushed from Kafka to the Internet-facing realtime messaging service; client devices interact with the latter, rather than pulling data directly from Kafka.

System security

One of the main reasons Kafka isn’t used for last-mile delivery relates to security and availability. To put it simply, you don’t want your data-processing component to be accessible directly over the Internet.

To protect the integrity of your data and the availability of your system, you need an Internet-facing realtime messaging service that can act as a security barrier between Kafka and the client devices it streams messages to. Since this messaging service is exposed to the Internet, it should sit outside the security perimeter of your network.

You should consider pushing data to the Internet-facing realtime messaging service instead of letting the service pull it from your Kafka layer. This way, in the event that the messaging service is compromised, the data in Kafka will still be secure. An Internet-facing realtime messaging service also helps ensure that you never mistakenly allow a client device to connect to your Kafka deployment or subscribe to a topic they shouldn’t have access to.

You would expect your Internet-facing realtime messaging service to have mechanisms in place that allow it to deal with system abuse, such as denial of service (DoS) attacks — even unintentional ones, which can be just as damaging as malicious attacks.

Let’s now look at a real-life situation of a DoS attack the team at Ably has had to deal with. Although it wasn’t malicious, it was a DoS attack nonetheless. One of our customers had an issue where a fault in the network led to tens of thousands of connections being dropped simultaneously. Due to a bug in the code, whenever there was a connection failure, the system tried to re-establish the WebSocket connection immediately, regardless of network conditions. This, in turn, led to thousands of client-side connection attempts every few seconds, which didn’t stop until the clients were able to reconnect to the Internet-facing realtime messaging service. Since Ably was the messaging service in the middle, it absorbed the spike in connections, while the underlying Kafka layer remained completely unaffected.

Discover how Ably enforces security

Data transformation

Often, the data you use internally in your streaming pipeline isn’t suitable for end-users. Depending on your use case, this can lead to performance or bandwidth-related issues for your customers, because you might end up streaming additional and redundant information to them with each message.

I’ll use an example to better demonstrate what I mean. At Ably, we enable our customers to connect to various data streams. One of these streams is called CTtransit GTFS-realtime (note that CTtransit is a Connecticut Department of Transportation bus service). It’s a free stream that uses publicly available bus data.

Now imagine you want to connect directly to CTtransit GTFS-realtime to stream data to an app that provides live bus updates to end-users, such as vehicle position or route changes. Each time there is an update (even if it’s for only one bus), the message sent by CTtransit might look something like this:

[

{

"id":"1430",

"vehicle":{

"trip":{

"tripId":"1278992",

"startDate":"20200612",

"routeId":"11417"

},

"position":{

"latitude":41.75381851196289,

"longitude":-72.6827621459961

},

"timestamp":"1591959482",

"vehicle":{

"id":"2430",

"label":"1430"

}

}

},

{

"id":"1431",

"vehicle":{

"trip":{

"tripId":"1278402",

"startDate":"20200612",

"routeId":"11413"

},

"position":{

"latitude":41.69612121582031,

"longitude":-72.75396728515625

},

"timestamp":"1591960295",

"vehicle":{

"id":"2431",

"label":"1431"

}

}

},

// Payload contains information for an additional 150+ buses

}

]As you can see, the payload is huge and it covers multiple buses. However, most of the time, an end-user is interested in receiving updates for only one of those buses. Therefore, a relevant message for them would look like this:

{

"id":"1430",

"vehicle":{

"trip":{

"tripId":"1278992",

"startDate":"20200612",

"routeId":"11417"

},

"position":{

"latitude":41.75381851196289,

"longitude":-72.6827621459961

},

"timestamp":"1591959482",

"vehicle":{

"id":"2430",

"label":"1430"

}

}

}Let’s take it even further — a client may wish to only receive the new latitude and longitude values for a vehicle, and as such the payload above would be transformed to the following before it’s sent to the client device:

{

"id":"1430",

"lat":41.75381851196289,

"long":-72.6827621459961

}The point of this example is to demonstrate that if you wish to create optimal and low-latency experiences for end-users, you need to have a strategy around transforming data, so you can break it down it (shard it) into smaller, faster, and more relevant sub-streams that are more suitable for last mile delivery.

On top of data transformation, and if it’s relevant for your use case, you could consider using message delta compression, a mechanism that enables you to send payloads containing only the difference (the delta) between the present message and the previous one you’ve sent. This reduction in size decreases bandwidth costs, improves latencies for end-users, and enables greater message throughput.

Check out the Ably realtime deltas comparison demo to see just how much of a difference this makes in terms of message size. Note that the demo uses the American CTtransit data source, so if you happen to look at it at a time when there are no buses running (midnight to 6 am EST or early morning in Europe), you won’t see any data.

You’ve seen just how important it is to transform data and use delta compression from a low-latency perspective — payloads can become dozens of times smaller. Kafka offers some functionality around splitting data streams into smaller sub-streams, and it also allows you to compress messages for the purposes of more efficient storage and faster delivery. However, don’t forget that, as a whole, Kafka was not designed for last mile delivery over the Internet. You’re better off passing the operational complexities of data transformation and delta compression to an Internet-facing realtime messaging service that sits between Kafka and your clients.

Discover Ably’s message delta compression feature

Transport protocol interoperability

The landscape of transport protocols that you can use for your streaming pipeline is quite diverse. Your system will most likely need to support several protocols: aside from your primary one, you also need to have fallback options, such as XHR streaming, XHR polling, or JSONP polling. Let’s have a quick look at some of the most popular protocols:

WebSocket. Provides full-duplex communication channels over a single TCP connection. Much lower overhead than half-duplex alternatives such as HTTP polling. Great choice for financial tickers, location-based apps, and chat solutions. Most portable realtime protocol, with widespread support. (For more info on scaling Kafka with WebSockets, check out our engineering blog piece.)

MQTT. The go-to protocol for streaming data between devices with limited CPU power and/or battery life, and for networks with expensive/low bandwidth, unpredictable stability, or high latency. Great for IoT.

SSE. Open, lightweight, subscribe-only protocol for event-driven data streams. Ideal for subscribing to data feeds, such as live sport updates.

On top of the raw protocols listed above, you can add application-level protocols. For example, in the case of WebSockets, you can choose to use solutions like Socket.IO or SockJS. Of course, you can also build your own custom protocol, but the scenarios where you actually have to are very rare. Designing a custom protocol is a complex process that takes a lot of time and effort. In most cases, you are better off using an existing and well-established solution.

Kafka’s binary protocol over TCP isn’t suitable for communication over the Internet and isn’t supported by browsers. Additionally, Kafka doesn’t have native support for other protocols. As a consequence, you need to use an Internet-facing realtime messaging service that can take data from Kafka, transform it, and push it to subscribers via your desired protocol(s).

Ably and protocol interoperability

Message fan-out

Regardless of the tech stack that you are using to build your data streaming pipeline, one thing you will have to consider is how to manage message fan-out (to be more specific, publishing a message that is received by a high number of users, a one-to-many relationship). Designing for scale dictates that you should use a model where the publisher pushes data to a component that any number of users can subscribe to. The most obvious choice available is the pub/sub pattern.

When you think about high fan-out, you should consider the elasticity of your system, including both the number of client devices that can connect to it, as well as the number of topics it can sustain. This is often where issues arise. Kafka was designed chiefly for machine to machine communication inside a network, where it streams data to a low number of subscribers. As a consequence, it’s not optimized to fan-out messages to a high number of clients over the Internet.

However, with an Internet-facing realtime messaging service in the middle, the situation is entirely different. You can use the messaging service layer to offload the fanning out of messages to clients. If this layer has the capacity to deliver the fan-out messages, then it can deliver them with very low latency, and without you having to add capacity to your Kafka cluster.

Using Ably at scale

Server elasticity

You need to consider the elasticity of your Kafka layer. System-breaking complications can arise when you expose an inelastic streaming server to the Internet, which is a volatile and unpredictable source of traffic.

Your Kafka layer needs to have the capacity to deal with the volume of Internet traffic at all times. For example, if you’re developing a multiplayer game, and you have a spike in usage that is triggered by actions from tens of thousands of concurrent users, the increased load can propagate to your Kafka cluster, which needs to have the resources to handle it.

It’s true that most streaming servers are elastic, but not dynamically elastic. This is not ideal, as there is no way you can boost Kafka server capacity quickly (in minutes as opposed to hours). What you can do is plan and provision capacity ahead of time, and hope it’s enough to deal with traffic spikes. But there are no guarantees your Kafka layer won’t get overloaded.

Internet-facing realtime messaging services are often better equipped to provide dynamic elasticity. They don’t come without challenges of their own, but you can offload the elasticity problem to the messaging service, protecting your Kafka cluster when there’s a spike of Internet traffic.

Let’s look at a real-life example. A while back, Ably had the pleasure of helping Tennis Australia stream realtime score updates to fans who were browsing the Australian Open website. We had initially load tested the system for 1 million connections per minute. Once we went into production, we discovered that the connections were churning every 15 seconds or so. As a consequence, we actually had to deal with 4 million new connections per minute, an entirely different problem in terms of magnitude. If Tennis Australia hadn’t used Ably as an elastic Internet-facing realtime messaging service in the middle, their underlying server layer (Kafka or otherwise) would have been detrimentally affected. Ably absorbed the load entirely, while the amount of work Tennis Australia had to do stayed the same — they only had to publish one message whenever a rally was completed.

Another thing you’ll have to consider is how to handle connection re-establishment. When a client reconnects, the stream of data must resume where it left off. But which component is responsible for keeping track of this? Is it the Internet-facing realtime messaging service, Kafka, or the client? There’s no right or wrong answer — any of the three can be assigned that responsibility. However, you need to carefully analyze your requirements and consider that if every stream requires data to be stored, you will need to scale storage proportionally to the number of connections.

The Ably network provides dynamic elasticity

Globally-distributed architecture

To obtain a low-latency data streaming system, the Internet-facing realtime messaging service you are using must be geographically located in the same region as your Kafka deployment. But that’s not enough. The client devices you send messages to should also be in the same region. For example, you don’t want to stream data from Australia to end-users in Australia via a system that is deployed in the other part of the world.

If you want to provide low latency from source through to end-users when the sender and receivers are in different parts of the world, you need to think about a globally-distributed architecture. Edge delivery enables you to bring computational processing of messages close to clients.

Another benefit of having a globally-distributed Internet-facing realtime messaging layer is that if your Kafka server fails due to a restart or an incompatible upgrade, the realtime messaging service will maintain the connections alive, so they can quickly resume once the Kafka layer is operational again. In other words, an isolated Kafka failure would have no direct impact on all the clients that are subscribed to the data stream. This is one of the main advantages of distributed systems — components fail independently and don’t cause cascading failures.

On the other hand, if you don’t use an Internet-facing realtime edge messaging layer, a Kafka failure would be much harder to manage. It would cause all connections to terminate. When that happens, clients would try to reconnect immediately, which would add more load to any other existing Kafka nodes in the system. The nodes could become overloaded, which would cause cascading failures.

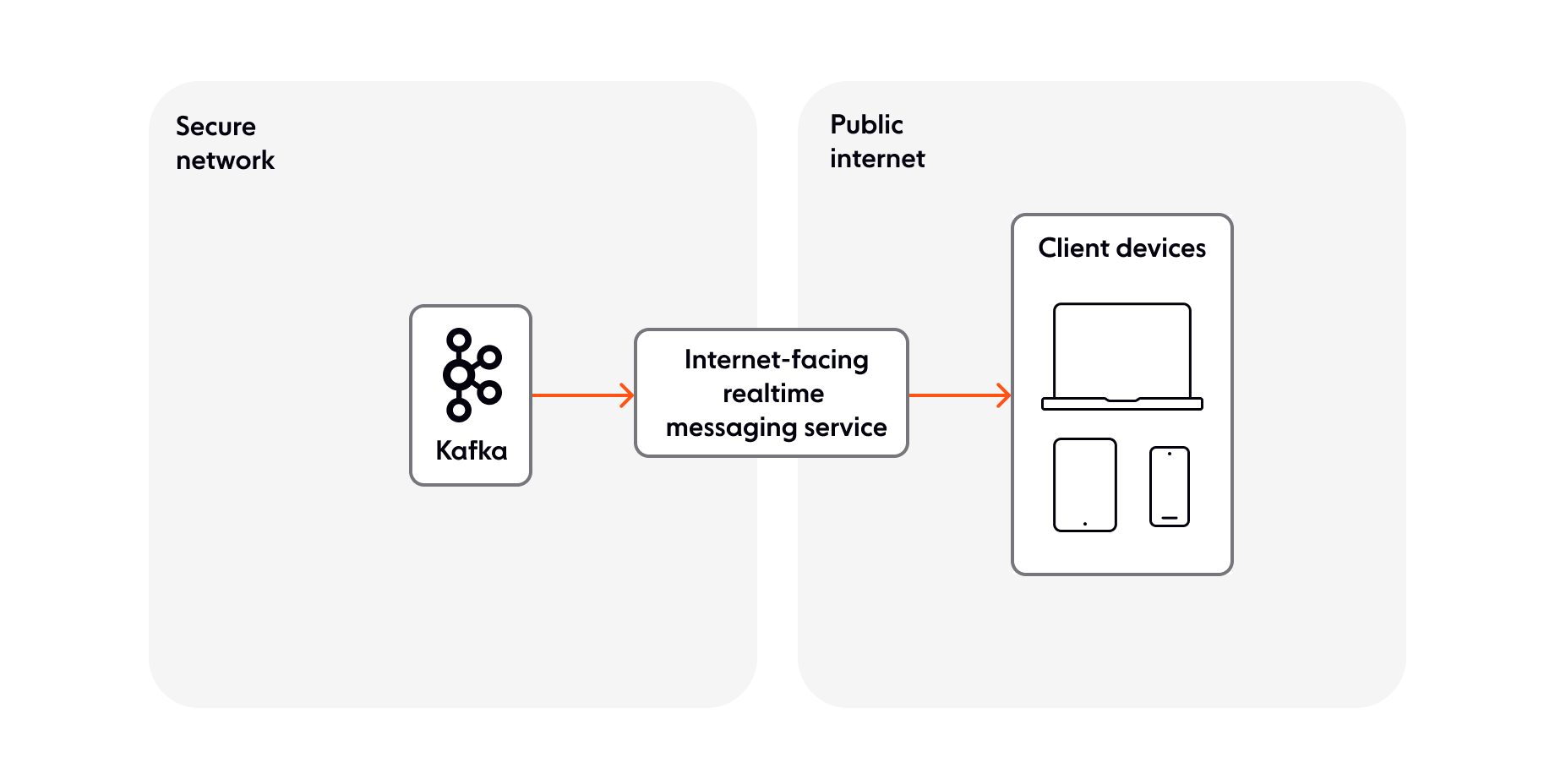

Let’s look at some common globally-distributed architecture models you can use. In the first model, Kafka is deployed inside a secure network and pushes data to the Internet-facing realtime messaging service. The messaging service sits on the edge of the secure network, being exposed to the Internet.

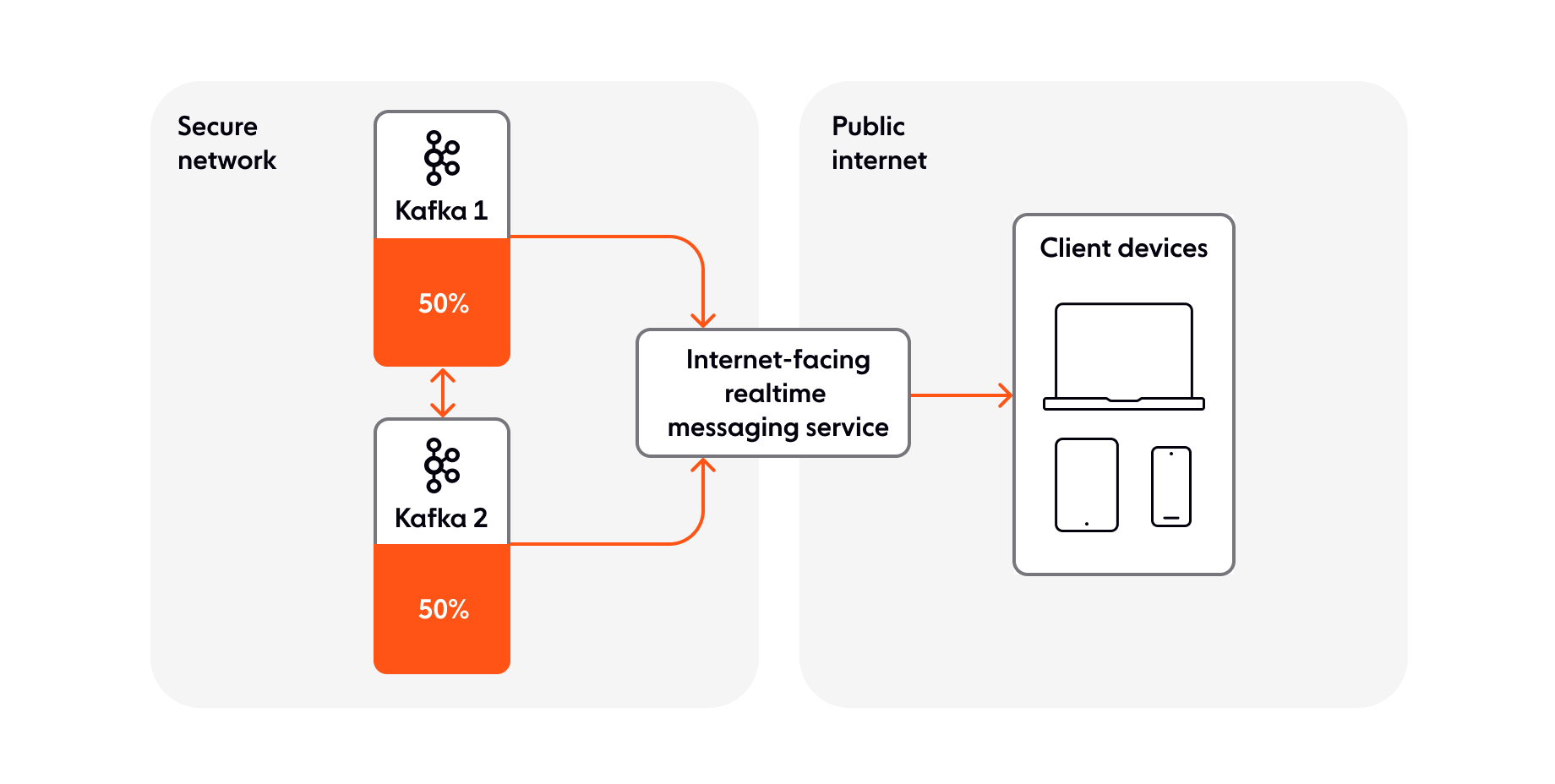

A secondary model you can resort to involves one Internet-facing realtime messaging service and two instances of Kafka, primary and backup/fallback. Since the messaging service is decoupled, it doesn’t know (nor does it care) which of the two Kafka instances is feeding it data. This model is a failover design that adds a layer of reliability to your Kafka setup: if one of the Kafka instances fails, the second one will take its place.

A third model, which is quite popular with Ably customers, is an active-active approach. It requires two Kafka clusters running at the same time, independently, to share the load. Both clusters operate at 50% capacity and use the same Internet-facing realtime messaging service. This model is especially useful in scenarios where you need to stream messages to a high number of client devices. Should one of the Kafka clusters fail, the other one can pick up 50% of the load, to keep your system running.

Managing back-pressure

When streaming data to client devices over the Internet, back-pressure is one of the key issues you will have to deal with. For example, let’s assume you are streaming 25 messages per second, but a client can only handle 10 messages per second. What do you do with the remaining 15 messages per second that the client is unable to consume?

Since Kafka was designed for machine to machine communication, it doesn’t provide you with a good mechanism to manage back-pressure over the Internet. However, if you use an Internet-facing realtime messaging service between Kafka and your clients, you may be better equipped to deal with this issue.

Even with a messaging service in the middle, you still need to decide what is more important for your streaming pipeline: low latency or data integrity? They are not mutually exclusive, but choosing one will affect the other to a certain degree.

For example, let’s assume you have a trading app that is used by brokers and traders. In our first use case, the end-users are interested in receiving currency updates as quickly as possible. In this context, low latency should be your focus, while data integrity is of lower importance.

To achieve low latency, you can use back-pressure control, which monitors the buffers building up on the sockets used to stream data to client devices. This packet-level mechanism ensures that buffers don’t grow beyond what the downstream connection can sustain. You can also bake in conflation, which essentially allows you to aggregate multiple messages into a single one. This way, you can control downstream message rates. Additionally, conflation can be successfully used in unreliable network conditions to ensure upon reconnection that the latest state is an aggregate of recent messages.

If you’d rather deal with back-pressure at application level, you can rely on ACKs from clients that are subscribed to your data stream. With this approach, your system would hold off sending additional batches of messages until it has received acknowledgement codes.

Now let’s go back to our trading app. In our second use case, the end-users are interested in viewing their transaction histories. In this scenario, data integrity trumps latency, because users need to see their complete transaction records. To manage back-pressure, you can resort to ACKs, which we have already mentioned.

To ensure integrity, you may need to consider how to deal with exactly-once delivery. For example, you may want to use idempotent publishing over persistent connections. In a nutshell, idempotent publishing means that published messages are only processed once, even if client or connectivity failures cause a publish to be reattempted. So how does it work in practice? Well, if a client device makes a request to buy shares, and the request is successful, but the client times out, the client could try the same request again. Idempotency prevents the client from getting charged twice.

Find out how Ably achieves idempotency

Conclusion: Should you use Kafka to stream data directly to client devices over the Internet?

Kafka is a great tool for what it was designed to do — machine to machine communication. You can and should use it as a component of your data streaming pipeline. But it is not meant for streaming data directly to client devices over the Internet, and intermediary Internet-facing realtime messaging solutions are designed and optimized precisely to take on that responsibility.

Hopefully, this article will help you focus on the right things you need to consider when building a streaming pipeline that uses Kafka and an Internet-facing realtime messaging service that supports WebSockets. It doesn’t really matter if you plan on developing such a service yourself, or if you wish to use existing tech — the scalability and operational challenges you’ll face are the same.

But let’s not stop here. If you want to talk more about this topic or if you'd like to find out more about Ably and how we can help you in your Kafka and WebSockets journey, get in touch or sign up for a free account.

We’ve written a lot over the years about realtime messaging and building effective data streaming pipelines. Here are some useful links, for further exploration:

Confluent Blog: Building a Dependable Real-Time Betting App with Confluent Cloud and Ably

The WebSocket Handbook: learn about the technology behind the realtime web

Ably Kafka Connector: extend Kafka to the edge reliably and safely

Building a realtime ticket booking solution with Kafka, FastAPI, and Ably

Recommended Articles

The challenge of scaling WebSockets [with video]

Learn how to scale WebSockets effectively. Explore architecture, load balancing, sticky sessions, backpressure, and best practices for realtime systems.

Socket.IO vs. WebSocket

Compare WebSocket (a protocol) and Socket.IO (a library). Learn performance trade-offs, scaling limits, and how to choose the right approach for your realtime apps.

SignalR vs. WebSocket: Key differences and which to use

We compare SignalR and WebSocket, two popular realtime technologies. Discover their advantages and disadvantages, use cases, and key differences.