Socket.IO is a powerful library for enabling realtime bidirectional communication in web applications. But what happens when your app goes from 100 to 100,000 users — or more?

If you’re running a self-hosted Socket.IO deployment, you’ve likely hit one or more of these pain points:

Dropped or slow connections at high user volumes

Challenges with Socket.IO sticky sessions and state management

Difficulty scaling beyond a single server instance

Complicated infrastructure for Socket.IO horizontal scaling

This guide walks through these scaling challenges and explores both how to mitigate them — and when it might be time to consider an alternative.

What makes it difficult to scale Socket.IO?

There are many hard engineering challenges to overcome and aspects to consider if you plan to build a scalable, production-ready system with Socket.IO. Here are some key things you need to be aware of and address:

Complex architecture and infrastructure. Things are much simpler when you have only one Socket.IO server to deal with. However, when you scale beyond a single server, you have an entire cluster to manage and optimize, plus a load balancing layer, plus Redis (or an equivalent sync mechanism). You need to ensure all these components are working and interacting in a dependable way.

High availability and elasticity. For example, if you are streaming live sports updates, and it's the World Cup final or the Superbowl, how do you ensure your system is capable of handling tens or thousands (or even millions!) of users simultaneously connecting and consuming messages at a high frequency? You need to be able to quickly (automatically) add more servers into the mix so your system is highly available, and ensure it has enough capacity to deal with potential usage spikes and fluctuating demand.

Fault tolerance. What can you do to ensure fault tolerance and redundancy? We’ve already mentioned previously in this article that Socket.IO is single-region by design, so that’s a limitation from the start.

Managing connections and messages. There are many things to consider and decide: how are you going to terminate (shed) and restore connections? How will you manage backpressure? What’s the best load balancing strategy for your specific use case? How frequently should you send heartbeats? How are you going to monitor connections and traffic?

Successfully dealing with all of the above and maintaining a scalable, production-ready system built with Socket.IO is far from trivial.

Understanding the scaling limits of Socket.IO

As mentioned, scaling Socket.IO isn’t just about adding more servers. The architecture imposes key limitations that teams must confront head-on: connection bottlenecks, complex load balancing with sticky sessions, and coordination overhead between nodes. These challenges can degrade performance, increase operational complexity, and introduce reliability risks — all at a time when your application needs to perform flawlessly.

In the sections below, we’ll walk through the specific technical ceilings you’ll encounter, why they arise, and how teams typically attempt to overcome them.

Socket.IO max connections

Even with a performant WebSocket-based stack, Socket.IO servers hit scaling ceilings quickly. In most Node.js environments, practical limits are often in the range of 10,000–30,000 concurrent connections per instance. Beyond that, the combination of open file descriptors, event loop contention, memory pressure, and garbage collection starts to degrade performance — often in unpredictable ways.

Teams typically respond by:

Tuning OS-level connection limits (

ulimit, TCP backlog, etc.)Offloading CPU-bound work to background services

Splitting workloads across multiple nodes behind a load balancer

While these steps can help, they merely postpone the inevitable: at scale, you must design for horizontal distribution.

Sticky sessions in Socket.IO

Sticky sessions are a direct byproduct of Socket.IO’s architecture. Since connection state (like subscriptions or user context) is usually stored in memory, clients must consistently connect to the same server instance — otherwise, that context is lost.

To enforce this, teams configure session affinity at the load balancer layer (IP-based or cookie-based). But this adds architectural rigidity:

It reduces the benefits of elastic autoscaling

It increases blast radius — if a server crashes, all connected clients lose state

It couples clients to infrastructure, making resilience and flexibility harder

The bigger your deployment, the more fragile this becomes. As traffic scales, many teams are forced to implement external state storage (e.g. Redis), introducing further latency and failure points.

Horizontal scaling Socket.IO

For small applications or internal tools, a single Socket.IO server may suffice. But as your user base grows — particularly if you're supporting thousands of concurrent users, multiple geographic regions, or realtime collaboration at scale — a single server quickly becomes a bottleneck.

Horizontal scaling on the other hand enables you to:

Increase capacity: More instances = more simultaneous connections served.

Improve reliability: Isolates failure domains — one crashed server doesn’t bring down the whole system.

Support elastic scaling: Dynamically add or remove instances based on traffic load.

Serve global traffic: Deploy instances closer to users to reduce latency.

How Socket.IO scales horizontally

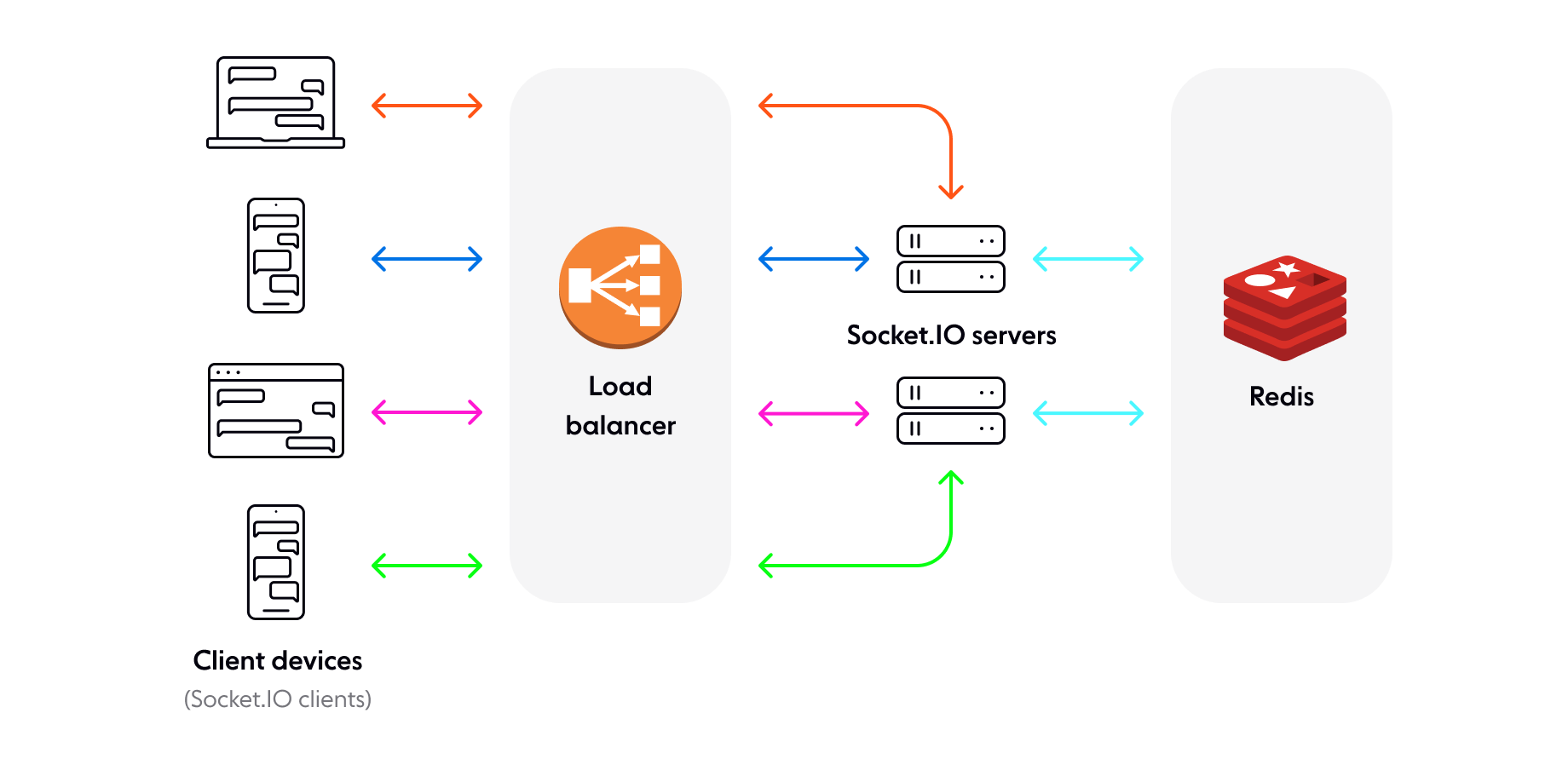

Scaling Socket.IO horizontally means you will need to add a few new components to your system architecture:

A load balancing layer. Fortunately, popular load-balancing solutions such as HAProxy, Traefik, and NginX all support Socket.IO.

A mechanism to pass events between your servers. Socket.IO servers don’t communicate between them, so you need a way to route events to all clients, even if they are connected to different servers. This is made possible by using adapters, of which the Redis adapter seems to be the most popular choice.

Below is a basic example of a potential architecture:

Scaling Socket.IO horizontally with Redis

The load balancer handles incoming Socket.IO connections and distributes the load across multiple nodes. By making use of the Redis adapter, which relies on the Redis Pub/Sub mechanism, Socket.IO servers can send messages to a Redis channel. All other Socket.IO nodes are subscribed to the respective channel to receive published messages and forward them to relevant clients.

For example, let’s say we have chat users Bob and Alice. They are connected to different Socket.IO servers (Bob to server 1, and Alice to server 2). Without Redis as a sync mechanism, if Bob were to send Alice a message, that message would never reach Alice, because server 2 would be unaware that Bob has sent a message to server 1.

Now this setup allows room-level messaging across servers — but it doesn’t unify connection state or eliminate the need for sticky sessions.

Challenges and trade-offs of horizontal scaling with Socket.IO

Even with horizontal scaling in place:

Redis adds latency and becomes a single point of failure

Connection state remains local to each instance

You still need sticky sessions to preserve client-server affinity

Failure recovery and state synchronization become your responsibility

In other words, Socket.IO supports horizontal scaling in principle, but not natively or seamlessly. You’ll need to invest in building out the infrastructure and coordination logic yourself — which can be justified if your use case is tightly specialized, but increasingly inefficient at larger scale.

Socket.IO scaling strategies and trade-offs

There’s no single path to scaling Socket.IO — each option comes with trade-offs in complexity, cost, and operational risk. Here’s a deeper look at the main strategies, and how they stack up in real-world deployments:

| Strategy | Overview | Strengths | Limitations |

|---|---|---|---|

| Vertical scaling | Scale up a single server to handle more connections | Simple setup; no distributed coordination | Hard performance ceiling; single point of failure |

| Sticky sessions + Load Balancer | Use IP or cookie affinity to tie clients to the same server instance | Maintains in-memory state; familiar approach | Fragile under failure; limits autoscaling flexibility |

| Redis pub/sub adapter | Use socket.io-redis to sync events and room membership across instances | Enables horizontal scale; widely supported | Redis bottleneck; session affinity still required |

| External state store (e.g. Redis) | Offload session/presence data outside the app layer | Resilient to node failure; enables stateless scaling | Adds latency; requires consistency logic |

| Custom broker adapter | Use Kafka, NATS, or RabbitMQ for scalable pub/sub messaging | High throughput; durable messaging options | Integration complexity; no first-party support in Socket.IO |

| Kubernetes + sticky sessions | Deploy in pods with sessionAffinity to preserve client-server linkage | Scalable with infra-as-code; familiar to DevOps teams | Doesn’t eliminate stickiness issues; harder to test at scale |

| Serverless WebSocket (e.g. API GW) | Use cloud-native services to manage socket connections | Low maintenance; “infinite” scale potential | Limited connection state; cold starts; vendor lock-in |

Who uses Socket.IO at scale?

There are plenty of small-scale and demo projects built with Socket.IO covering use cases such as chat, multi-user collaboration (e.g., whiteboards), multiplayer games, fitness & health apps, planning apps, and edTech/e-learning.

However, there is limited evidence that organizations are building large-scale, production-ready systems with Socket.IO.

Trello used Socket.IO in the early days. To be more exact, Trello had to use a modified version of the Socket.IO client and server libraries, because they came across some issues when using the official implementation:

"The Socket.IO server currently has some problems with scaling up to more than 10K simultaneous client connections when using multiple processes and the Redis store, and the client has some issues that can cause it to open multiple connections to the same server, or not know that its connection has been severed."

Even using the modified version of Socket.IO had some hiccups:

"We hit a problem right after launch. Our WebSocket server implementation started behaving very strangely under the sudden and heavy real-world usage of launching at TechCrunch disrupt, and we were glad to be able to revert to plain polling..."

After a while, Trello replaced Socket.IO with another custom implementation that allowed them to use WebSockets at scale:

"At peak, we’ll have nearly 400,000 of them open. The bulk of the work is done by the super-fast ws node module. [...] We have a (small) message protocol built on JSON for talking with our clients - they tell us which messages they want to hear about, and we send ‘em on down."

In addition to Trello, Disney+ Hotstar considered using Socket.IO to build a social feed for their mobile app. Socket.IO and NATS were assessed as options, but disqualified in favor of an MQTT broker.

Beyond the examples above, we couldn’t find proof of any other organization currently building scalable, production-ready systems with Socket.IO. Perhaps they’re trying to avoid the complexity of having to scale Socket.IO themselves (or maybe they’re just not writing about their experiences).

When scaling Socket.IO becomes too costly or complex

At some point, your team’s effort to scale Socket.IO may start to outweigh the benefits of maintaining control. Common signs it’s time to rethink:

Uptime incidents due to scale

Constant firefighting to maintain sticky session logic

High infrastructure or DevOps costs

Lack of time to focus on your product

This is where managed realtime platforms like Ably come in.

Ably is a globally distributed realtime platform built for elastic scale — with built-in support for connection state, message delivery guarantees, and edge acceleration.

Key advantages:

Global scalability out of the box

No sticky sessions required

Built-in pub/sub, presence, message ordering

99.999% uptime SLA

SDKs that support WebSocket, MQTT, and even Socket.IO protocols

How to scale with confidence

The reality is that although Socket.IO provides a great starting point, scaling it requires heavy investment.

If you decide to include Socket.IO in your tech stack and plan to use it at scale, you just have to be aware that it won't be a walk in the park. One of the biggest challenges you’ll have to face is to dependably scale and manage complex system architecture and messy infrastructure. Sometimes it’s easier and more cost-effective to offload this complexity to a managed realtime solution that offers similar features to Socket.IO.

If you’re already constantly patching scaling issues, it might be time to reconsider your architecture. Why not take a look at our list of Socket.IO alternatives, or sign up to Ably to try our platform for free?

Recommended Articles

Comparing Socket.IO and HTTP: Key differences and use cases

Discover the different features, performance characteristics and use cases for Socket.io - a realtime library, and the HTTP protocol in our comparison guide.

Socket.IO vs. WebSocket

Compare WebSocket (a protocol) and Socket.IO (a library). Learn performance trade-offs, scaling limits, and how to choose the right approach for your realtime apps.

Socket.IO vs SockJS

Compare realtime libraries Socket.IO and SockJS on performance, scalability, developer experience, and features.