Introduction

Databases are one of the most useful and foundational constructs in computing. They are what allows us to take our exponentially growing amounts of information and to organize it according to efficient, logical patterns of information architecture. Any time any information needs to be held in place for any length of time, it is held by databases ranging from a simple grocery list on a piece of paper, to billions of convoluted, interrelated records organized within a database construct which needs to be highly responsive to its users, whether one or millions.

A database’s state is a snapshot of it at any arbitrary instant in time. State can be changed by events: the snapshot morphs into a new state every time an event occurs to change the database. For example, if you add an item to our shopping list, that is an event. Similarly, if you put a grocery item in your basket and cross it off your list, you have altered the list's state (along with the state of your mind about that item, in the sense of not having to think about it).

Event-driven architecture is a computing concept that deals exclusively with the paradigm of how events affect information. It's a way of doing something with information we possess, in this case specifically in terms of actions that can be taken in response to whatever state the information is in. For example, if the light turns green, we react to this information as soon as possible and, assuming cross traffic has cleared the intersection completely, we proceed. The green light event triggered an action that changes our state from stationary to mobile.

The concept of realtime is the capacity to not just respond to events, but to do so instantaneously, or practically so in terms of human perception. Consumers increasingly demand experiences that are (perceptibly) instantaneous in nature, and SLAs of "as quick as possible, but not quicker" no longer cut it. The deadlines of realtime are much more strict to satisfy these requirements.

Some developers use databases because their use case has a requirement to write, store, and retrieve data. Other developers use realtime, because they have interactive, live demands from their users, where the data loses all or most of its business value after a very short deadline passes. Some developers still have a strong need for both, and, for the purposes of getting a minimum viable product off the ground as quickly as possible, they go for low-hanging fruit of all-in-one solutions which happen to include some realtime functionality.

No problem is simple at scale

If you separate your problem into a series of blocks, early on in the development cycle having one massive "it fits all boxes" solution is great because it means you can get started fast, and know where you're going. Say you use a BaaS because it has so many features, and one of them is some functionality advertised as realtime.

Early in the dev cycle, generalist solutions that do some database things and also some realtime things are great. If you never get to a stage where you outgrow your platform offerings, then a close coupling of your application with your service will probably be sufficient.

Some examples of software that combines database and realtime offerings include Firestore, Firebase, and Supabase. They have a lot to offer in terms of value at the prototyping stages.

As soon as you go out of that basic paradigm of a BaaS for simple mobile and web applications — and specifically simple ones, because most applications do not require a very large amount of state, complex databases, or joins of some data in a place that's easy to get to and from — as soon as you go outside of that paradigm, then this is no longer a simple thing. The tight coupling / lack of modularity strains and breaks down at serious enterprise scale.

When your app starts going very fast or hits very large numbers, the simple bit goes away because no problem is simple at scale. Even serious at-scale database solutions like Postgres — able to handle thousands of concurrent writes per second — can struggle when attempts are made to use them in a realtime capacity.

On modularity

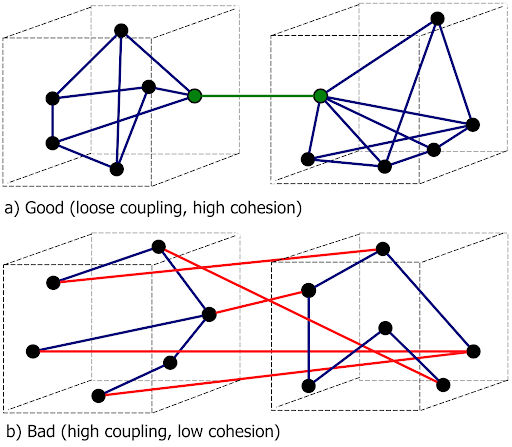

The main problem is lack of modularity: each tool should do its own job. Databases are bases of data. They should do one thing and one thing well, which is to be the one base source of truth and data. They should never be saddled with realtime concerns — that is not their strength by design. They should do storage, and a different dedicated piece should do realtime comms. All the other problems stem from this.

At scale, especially at the enterprise level, the tight coupling of the everything-in-one solution balloons in complexity and starts breaking down/exposing how weakly the interconnectedness was put together in the early stages. For example, if you can accept that not all your reads necessarily have the latest state, then it's easier to scale up because you don’t have to check for the latest state every time some data is read. The more instances you have, the more costly the check. But if you want to guarantee that the state is in sync everywhere, then that will break at scale.

The right tool for the right job

It makes perfect sense to choose an all-in-one solution if that’s what suits your business needs. You want to minimize operational costs and maximize competitive advantage by choosing the right tool for the right job and at the right time.

However, some of those features are frequently either tacked on or not best in breed. There is a familiar trap of trying to squeeze everything out of every available feature. The most pragmatic approach, however, is to choose a key subset of features — authentication, storage, hosting, realtime features, cloud functions, etc. — then evaluate them for your business needs, and, for the missing pieces, add best-in-breed in a modular fashion.

Questions to ask when evaluating realtime databases

By definition, at scale you have to think modular — no tool can do everything; each tool should do one thing well independently — and you should work as hard as possible to decouple your realtime concerns from your storage concerns.

There is a significant number of questions that should be raised during the evaluation process of any solution where the requirements call for both storage and realtime.

What follows is a non-exhaustive list of caveats to consider when evaluating realtime databases.

Performance

Does your platform autoscale your database? How does it stand up to a serious load or a fast ramp-up of said load? Can the bandwidth, CPU, memory, and disk usage stand up to a spike, esp. a very sudden one, while maintaining acceptable latency? Do you rely on cloud functions for instantaneous results or do you just deploy them for longer running tasks? At what number of concurrent connections does the performance go sideways? Do you need to synchronize your tables to small devices that may or may not have the memory? Is the claimed availability provided by your platform as available as advertised? Does it scale elastically with your usage? Do you need to artificially spool up ahead of anticipated rush of traffic?

Operations

What are the platform’s limitations? Are the integrations best-in-class? Is this an architecture you can succeed with? Is it an acceptable risk to have all components tied to a single platform simultaneously? What kind of safeguards are in place to prevent accidental overages? Can you pay your way out of a bottleneck?

Database features

Do you require complex database constructs? Is the database ACID-compliant (Atomicity, Consistency, Isolation, and Durability)? Does it include a full-featured query language, triggers, foreign keys, or stored procedures? Which of those do you need now? (The DB probably should generally have most of these, while separate realtime systems should be dedicated to realtime concerns.) Is there a modular way to add functionality? Will your data architecture scale? Do you need to think about local stores and the impact of local processing?

Realtime features

Do your requirements include realtime features like guaranteed, practically-instantaneous, ordered delivery? How about idempotency, or presence at scale? How are they defined and are they bounded? What is your potential platform's proposed solution? Is it a simple implementation? Is it modular? Is your architecture event-driven? Can it elastically scale out with topics for each type of event? Are you forced to design your own load balancing for massive fanouts beyond the stated limits? Can you make realtime updates without a database transaction? Are you using HTTP or WebSockets? What kind of delivery promises can you give your users? Do you need a BaaS service with some realtime capabilities, or a performant database solution with a scalable realtime component loosely coupled with it?

State

State synchronization is difficult to achieve and a lot of the answers are "it depends". Is the latest state all that you care about? Do you have to synchronize large amounts of data between devices or are you just sharing state with the server? Would event-based triggers suffice? Do you have the ability to adjust how and when your state is synchronized? Has the platform figured out the hard deterministic convergence problems of synchronization via events? Are there primitives for distributed state/events?

Ultimately, all these questions boil down to just one:

“What is the right tool for the right job at the right time?”

Typical development paths when massively scaling database-centric applications

There are several development paths you can take when your app scales on a serious level, but the end result is invariably a solution characterized by high cohesion and loose coupling.

For example, your app starts out with BaaS because a requirement exists for the full package of back-end features such as authentication, storage, and cloud compute. You add realtime features to your application to the point that it’s becoming difficult to scale and the complexity of making realtime updates to something in a way that feels dynamic to a user is too complicated using the database directly.

This is the point where it's best to abstract out the realtime aspect to a specialist provider to do triggers and other events. You still use the original database for data and maybe for other bonus things that come with the BaaS.

Or, alternatively, you start with a database, but find that your needs increase toward more complex operations on data. You move on to more powerful structured solutions from the same provider, and/or move to Cassandra, Postgres, DynamoDB or equivalent. The realtime components remain lacking as the scale goes up, at which point it makes sense to add a realtime updating system that can pair well with any of the others beyond the constraints of a database.

Real world example

One way to picture this bottleneck is using the example of a sports betting app built on top of a common realtime database such as Firebase. You set up Firebase to handle all your logins, user management, and realtime updates. People can then place bets in the app in realtime.

This gets you up and running, but you start to run into problems when your high-speed order book has to put its data into the database to broadcast, and it starts to take too long causing the app to either reject bets or expose you to unbalanced risk by allowing users to bet on potentially out of date betting odds.

Now with a million users, you're genuinely running into difficulties because you're sending out new odds that have to be replicated to multiple databases before they can be fanned out to the users. This obviously creates conditions of unacceptable risk and unacceptable user experiences.

In such a case, the database can still be the storage of record where the user data lives and where transactions go. However, instead, you can now call a cloud function on a state change from your now much simpler database and publish into your realtime solution whatever you would have put into your database, because calling a cloud function on publish is easy. You thus have a single arrow out of your database. You can still use the database, but you instead just call an action on the storage instead of having it try to fire its own broadcaster.

You can do any of the following:

- Everyone writes to the database directly and the updates trigger a cloud function which triggers your realtime broadcaster to send the change to everyone else.

- Use your realtime broadcaster to publish the messages to store them directly into the database using an integration.

- You can call a cloud function on every single inbound message.

If you remove the realtime part of your database — the part where you used to read and write a million times to it — you can use it as a store. Or, in the case of a BaaS like Firestore, which is a big system that does everything, you can use it as a store, authentication layer, REST API, everything. Like a lot of other serverless solutions, it’s a full back end with all the benefits, like a perfectly fine NoSQL database. The realtime functionalities that work so well on a small scale might not necessarily hold up under hard scrutiny. However, this scaling conundrum can be easily resolved with a robust realtime module that plugs well into your existing solution.

Conclusion

In general engineering terms, modularity is the golden standard of design patterns. By definition, generalist all-in-one solutions are rarely a good idea if you want to do anything specific, especially on a large scale where heretofore small or immaterial problems start posing material risks to the business.

If you're thinking of your architecture in terms of databases with integrated realtime features, then you’re not really thinking in terms of actual realtime that your large user-base demands. The class of products called realtime databases most certainly has its place. Firebase is great for what it does, Firestore is also great. They are databases with an entire back end as a service, and they also happen to offer some realtime(-ish) features. It's a highly attractive value proposition, at least for rapid development purposes at non-enterprise scale. The trade-offs might be entirely worth it.

However, specialist providers exist for a very good reason, and that’s operational modularity. Realtime databases are not a contradiction in terms, at least not at a small scale, but for strategic purposes, they do not offer low enough scalable primitives you can build upon. Instead, they are services you must build with, or, rather around, forcing your architecture into big complexity down the line. When that time arrives, you must be ready to switch your perspective rapidly beyond the quick and dirty into bigger-picture.

When you choose a database and have realtime requirements, you generally scale up with it until it stops meeting your needs. We love Firebase and Firestore and several other realtime databases. They're great at what they do, and they will get you off the ground faster than a lot of robust solutions.

The overarching idea is not to force yourself into patterns just because it's the only thing you know at the time. Decoupling systems means you can scale them at any time, now or later. If you can afford the time to think about it earlier, it will pay dividends later. If you must pump out an MVP now — yesterday! — then design your system to be portable for later by giving some thought to the considerations listed above.

When the realtime part eventually stops scaling, the crystal ball says it's because it was never going to scale. The idea all along was to choose the best dedicated tools for their individual jobs, ideally in such a way that they can work well together while knowing almost nothing about each other. True modularity. High cohesion within each module with loose coupling among modules with dedicated tasks. The principles of robust distributed realtime computing that scales. Instead of trying to hoist unnatural functionality onto your database, use it for its dedicated purpose, and find a realtime tool that fulfills all your serious at-scale business requirements. Your massive user base will thank you later.

About Ably

Ably is a fully managed Platform as a Service (PaaS) that offers fast, efficient message exchange and delivery and state synchronization. We solve hard Engineering problems in the realtime sphere every day and revel in it. If you are running into problems trying to massively, predictably, securely scale your realtime messaging system, get in touch and we will help you deliver seamless realtime experiences to your customers.

Further Reading

- Redis scripts do not expire keys atomically

- Channel global decoupling for region discovery

- The realtime web: evolution of the user experience

- A real-world introduction to event-driven architecture

- Scaling the Firebase Realtime Database beyond 200k users

- The shift to Event-Driven: evolution, benefits, and competitive advantages

- Community project showcase: an Ably-Postgres connector to stream DB changes to millions of clients in realtime

- Database-driven realtime architectures: building a serverless and editable chat app - Part 1

- Database-driven realtime architectures: building a serverless and editable chat app - Part 2