We recently announced Ably's Control API, a REST API that enables you to manage Ably's configuration programmatically. You can now use the Control API to configure anything previously only configurable via the Ably dashboard.

The Ably platform is designed around four pillars of dependability: Performance, Integrity, Reliability, and Availability. The Control API is no exception; it should adhere to those too, and our development process tested it to ensure reliable support for multiple simultaneous requests. Load testing determined the number of concurrent users the Control API could support at any given time and how it behaved under heavy load. We used the results of the load tests to provision our server resources adequately.

What is load testing?

Load testing is used to measure how a system performs under stress by simulating a large number of concurrent users. The test scenario needs to be realistic and simulate user behavior as realistically as possible.

We could have just hammered the Control API with requests and counted the number of concurrent users it took to make it fall over. This is a good metric to know, but ultimately that wouldn't tell us much about why it fell over nor what we could do to prevent it, since it only reveals the breaking point where the pressure was such that the Control API couldn't cope with the load.

We set out to measure more than just the number of concurrent users that the Control API could support. We wanted to understand how the Control API performs under specific scenarios.

For example, if it is under attack and dealing with multiple malicious requests, how well does it serve valid requests? What effect does this have on response latency? How are our supporting cache and database clusters affected? Are all other external resources and services working as they should? Is this adversely impacting other parts of the Ably system?

Internally, we had a few hypotheses on how the Control API would perform under heavy load (based on an educated guess on past usage). However, we wanted to remove any guesswork and get actual data to back up our predictions as part of these tests.

Tools for load-testing

We have previously used Apache JMeter for load testing and, although there is a learning curve to overcome to use it, it was our first choice for this project. But it wasn't as flexible mainly because it relies heavily on verbose XML configurations, and it is thread-bound, which can be very resource-intensive, and creates a new thread for each user it simulates.

Also, as our Control API evolves, we would like our performance test scripts to evolve along with it. One of the best ways to do this is to version the scripts in Git. Although you can check JMeter configurations into Git, it's verbose and not very readable.

We instead turned to Locust, an open-source, scalable, distributed load-testing framework for web services, which accepts straightforward Python scripts. Unlike JMeter, Locust uses green threads and needs less resources to simulate users. We wrote a Python script to set up our load testing, and we have added this to our codebase so we can expand upon it as we continue to extend what we offer through the Control API.

How to identify realistic usage patterns when load testing

A powerful load testing strategy needs to be able to simulate user behavior as much as possible. We identified the following types of users that we would expect to access Ably's Control API.

- Typical users - These are users with valid access and good intentions who are using the API responsibly.

- Power users - These users are pushing our system to the limit but don't have malicious intent.

- Bad users and bots - Users with valid access but bad intentions who are not using the API responsibly. We also include bots (and other users with no value access that keep the Control API busy serving requests) into this category.

The main aim is to continue to serve the typical users and power users without any impact from bad users or bots. The first thing we did was determine the expected behaviors for the user types we identified.

Typical/Power users

- Get valid authorization tokens

- Make different types of requests to manage requests

- Throttled to a few seconds per requests

Bad users

- Same steps as a good user but not throttled

Bots

- Some authenticated with a valid looking token, while others have invalid/gibberish token

- Attempt to make requests to manage resources

How to configure Locust

A single machine wasn't capable of simulating the number of users that we needed, so, to make sure we could run the test with the desired number of users, we configured Locust to run the collection of users (known as the Swarm) in distributed mode.

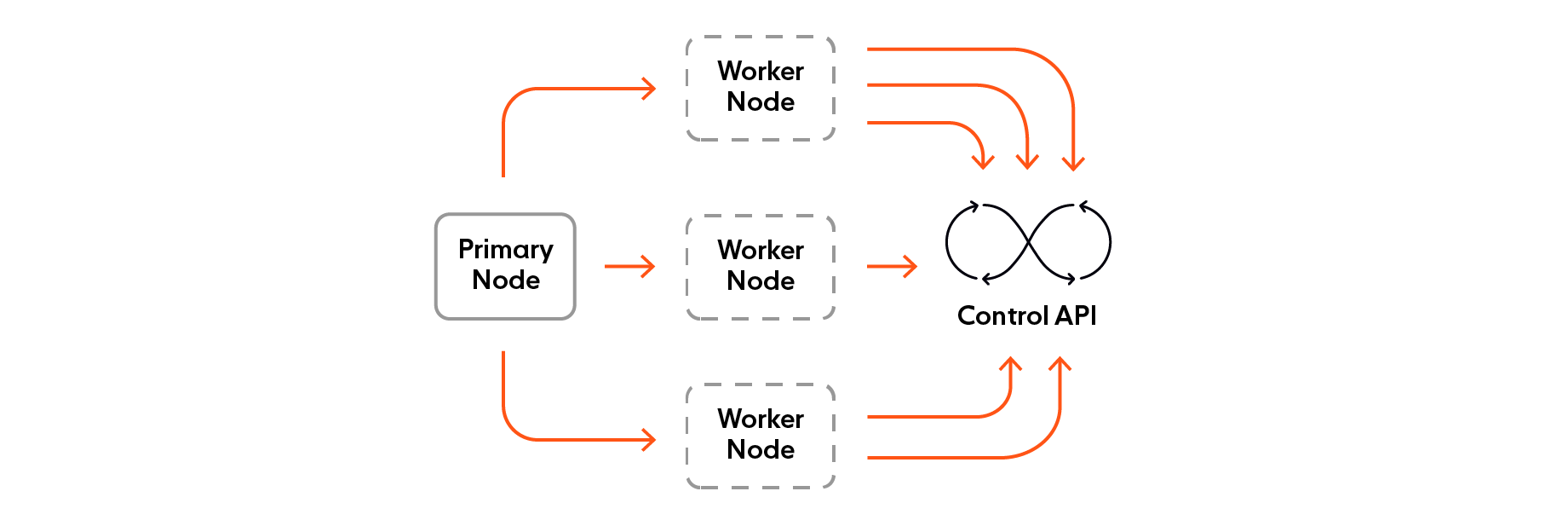

Primary node: This node does not simulate any user itself. It'll be running Locust's web interface where we can start the test and see live statistics. We used the Locust –master flag to make any instance behave as this node when running the command.

Worker nodes: These nodes simulate our users. To make an instance run in worker mode, we used the –worker flag. As you can have multiple worker nodes when testing, we use a few to represent the different types of users we have

How to deal with rate-limiting when load testing

One of the main problems we ran into as we started load testing was a rate-limiting issue. To slow down or stop attacks, we limit requests made by a specific user / IP. Our initial test setup sent all the requests from the same IP (the test machine), and when it hit the limit, it was throttled/blocked by the server. While that confirms that we can limit unauthorized and bad users who make too many requests, the testing failed because we also blocked the simulated good users on the same IP.

We needed to simulate traffic load from different IPs to measure resilience, so we used Squid proxy, which is a caching and forwarding HTTP web proxy, to forward requests. The network allows you to send unlimited concurrent connection requests.

We set up a few EC2 instances on an AWS account that each ran an instance of Squid proxy. We then forwarded requests made from the local test machine to the proxy on AWS to ensure that requests originated from different IPs, as they would in the real world.

How to manage resources when load testing

By its very nature, load testing in a production-like environment needs a lot of resources to be provisioned for the system. We faced an issue because we initially under-provisioned our database for managing all the requests concurrently. We bumped it up, then kept a close eye on our Amazon EC2 usage to monitor our consumption.

We were mindful that we could rack up a huge bill if the load testing system went rogue. After provisioning our testing servers to mimic production closely, we put some alerting in place to warn us if our usage got too high, together with some metrics to help us better measure and predict real-world usage of the Control API.

Conclusion

We were able to run the tests for our desired number of concurrent users. This gave us confidence in the Control API and led us to discover breaking points for both our web server and our database for the dashboard system. The test also confirmed that we made the correct choice in choosing Puma as the application web server.

We now have a better idea of how the Control API will perform when we release it in general availability (GA). We can use it in regression tests to ensure that large new features don't slow things down unexpectedly as we have threshold metrics. We can use the information gathered to optimize our web architecture further.

We found Locust and Squid to be amazing tools, right out of the box. Locust, in particular, was very convenient to use, extensible, and it is easy to exactly configure what you want to test. Purely for the flexibility to write your own test based on your use case, we recommend it for web application testing.

Useful resources

- Locust documentation

- Information on how to set up Squid proxy

- Infrastructure as Code: Manage apps using the Ably Control API GitHub Action

- Find out more about the Ably platform

Latest from Ably Engineering

- Stretching a point: the economics of elastic infrastructure ?

- A multiplayer game room SDK with Ably and Kotlin coroutines ?

- Save your engineers' sleep: best practices for on-call processes

- How to connect to Ably directly (and why you probably shouldn't) – Part 1

- Migrating from Node Redis to Ioredis: a slightly bumpy but faster road

- No, we don't use Kubernetes

About Ably

Ably is a fully managed Platform as a Service (PaaS) that offers fast, efficient message exchange and delivery, and state synchronization. We solve hard Engineering problems in the realtime sphere every day and revel in it. If you are running into problems trying to massively, predictably, securely scale your realtime messaging system, get in touch, and we will help you deliver seamless realtime experiences to your customers.