What is long polling?

Long polling is a technique for achieving realtime communication between a client and a server over HTTP.

It works by keeping a connection open until the server has new data to send, so the client gets updates as soon as they’re available - without needing a constant connection like WebSockets.

This method emerged as a practical solution before WebSockets were standardized and remains relevant in certain scenarios today, especially in constrained or legacy environments.

If you are evaluating realtime communication strategies, understanding how long polling works - and where it fits in a modern architecture - is key to making scalable, maintainable choices.

How HTTP long polling works

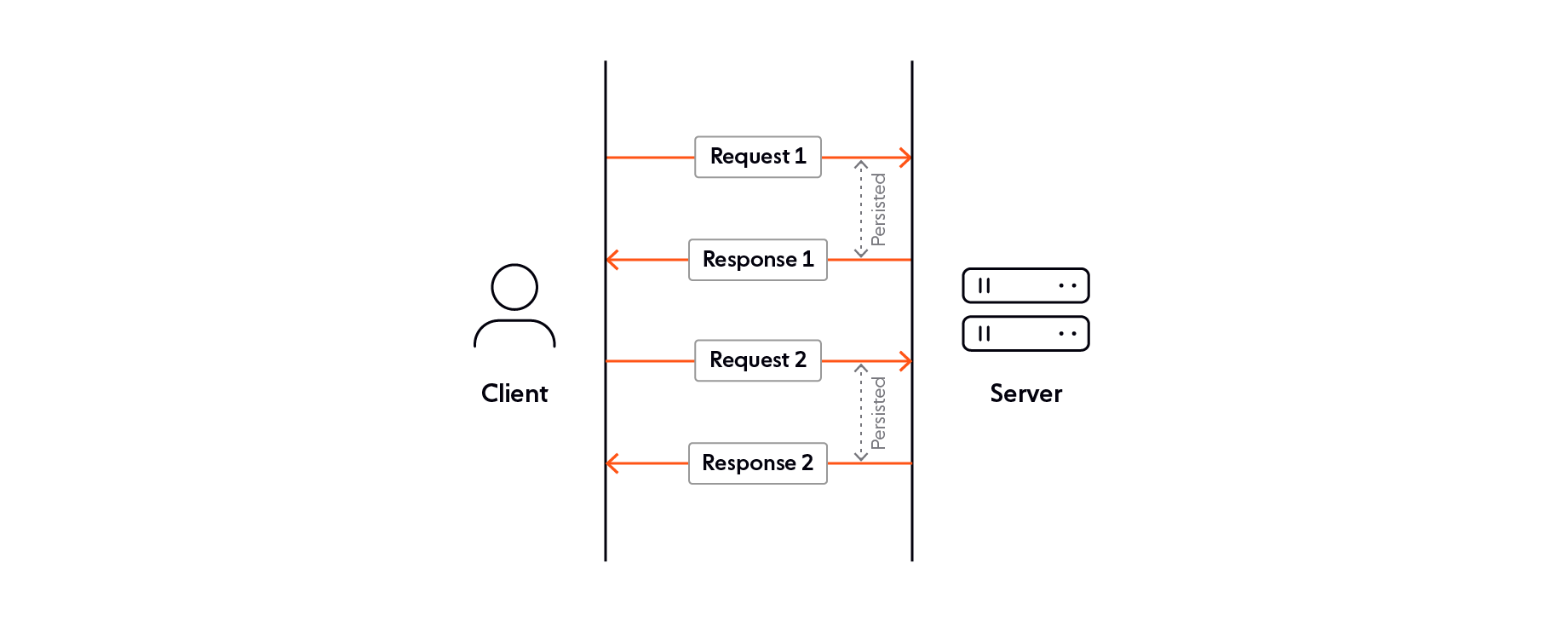

HTTP long polling solves the problem of building bidirectional applications on the web - a platform designed for a one-way world where clients make requests and servers respond. This is achieved by turning the request-response model on its head:

Client sends a GET request to the server: Unlike a traditional HTTP request, you can think of this as open-ended. It isn’t asking for a particular response, but for any response when it is ready.

Time passes: HTTP timeouts are tunable using the Keep-Alive header. Long polling takes advantage of that by setting a very long or indefinite timeout period, so the request stays open even though the server doesn’t have an immediate response.

The server responds: When the server has something to send, it closes the connection with a response. That could be a new chat message, an updated sports score, or a breaking news alert.

Client sends a new GET request and the cycle begins again.

Key to the success of HTTP long polling is that it does nothing to change the fundamentals, particularly on the client. Working within the web’s existing model, and using essentially the same infrastructure as the static web, long polling’s superpower is that it works almost everywhere. It’s also less wasteful. Although clients still open multiple HTTP requests, almost all of them result in data returned from the server.

However, it’s on the server that most of the work needs to happen. In particular, managing the state of potentially hundreds of thousands of connections is resource intensive.

Scalability considerations

From a scalability standpoint, long polling introduces notable limitations:

Each open request ties up server resources: Even in asynchronous environments, managing thousands or millions of concurrent long poll connections increases memory and compute load.

Timeouts and retries become bottlenecks: When under heavy load, delayed responses and retry storms can overwhelm backend systems.

Infrastructure becomes harder to scale predictably: Unlike protocols designed for persistent connections (like WebSockets), long polling doesn’t support multiplexing or connection reuse, leading to inefficient utilization.

These factors make long polling suboptimal for modern, business-critical realtime applications where scalability, latency, and reliability are core requirements.

So, is HTTP long polling a good solution?

When it comes to enabling servers to push data to web clients, HTTP long polling is a workaround. It bends HTTP slightly out of shape to give us a widely available way to let web servers push data to web clients. But does it still make sense in a world where multiple, dedicated realtime protocols are available?

The short answer is that, yes, HTTP long polling still has a role to play - but more as a fallback in most scenarios. To understand where long polling can be useful, we should look at what it does well and where it falls short.

Broad compatibility: Works with all modern browsers, HTTP clients, and proxy setups.

Firewall-friendly: Bypasses many restrictions that block WebSocket connections.

Simple to implement: Uses standard HTTP semantics and request/response flow.

Reliable fallback: Especially useful in environments where bidirectional protocols are unsupported.

Inefficient under load: Each client holds an open connection, which consumes server resources (threads, memory).

Higher latency than WebSockets: Especially when server response or timeout intervals are not finely tuned.

Complex client logic: Requires retry handling, exponential backoff, and timeout management.

Poor scalability: Difficult to horizontally scale long polling infrastructure for large user bases without significant operational overhead.

No guarantee of message delivery or order: Long polling doesn’t guarantee that messages will arrive in the right order - or even at all.

When should you use HTTP long polling?

Long polling remains a viable option in specific use cases. But its limitations become more pronounced as scale and latency requirements increase.

Use long polling when ...

WebSocket connections are blocked by proxies or firewalls — especially in enterprise or corporate networks.

You're working with older browsers or legacy client SDKs.

Your app sends infrequent or bursty realtime updates.

You need a quick, simple realtime layer for a prototype or MVP.

Avoid long polling when ...

You need to support thousands of concurrent users or more.

Your application demands low-latency, high-frequency updates (e.g. collaborative editing, gaming, IoT telemetry).

You aim for cost-efficient scaling in a cloud-native environment.

You want simpler client and server logic, especially for mobile devices.

What are the alternatives to HTTP long polling?

Depending on your product’s scale, latency sensitivity, and client environments, alternative realtime protocols may offer better performance, simpler integration, and lower operational overhead.

Here’s a high-level overview of alternatives you might want to consider:

Protocol | Characteristics | Use cases | Caveats |

Bidirectional, persistent, low latency communication | Chat, multiplayer collaboration, realtime data updates | WebSocket is stateful, meaning you’ll have to think about how to manage state if you scale horizontally | |

One way message push from server to client | Data updates, such as sports scores | Not suitable for two-way communication | |

Lightweight protocol for two-way communication. | Streaming updates from IoT devices with constrained bandwidth | MQTT requires a central broker, which could make scaling harder |

Building scalable, resilient realtime infrastructure

HTTP long polling has its place - but more modern approaches that overcome its downsides (primarily latency and difficult scaling) are available. So, we should think of HTTP long polling as one tool amongst many.

But realtime communication isn’t only about choosing the right protocol. You also need the infrastructure to back it up.

Although you can build your own, there are significant risks. Designing, building, and maintaining realtime infrastructure will redirect your engineering capacity away from working on your product’s core features. Our research shows that 41% of in-house realtime infrastructure projects miss deadlines. That can mean delays in getting to market, as well as the need to hone specialized engineering skills. Instead, you might want to consider a realtime platform as a service, such as Ably.

Our reliable, low latency, realtime platform as a service (PaaS) lets you choose the right method for your use case, whether that’s HTTP long polling, WebSocket, MQTT, Server Sent Events, or something else. Just as importantly, Ably’s global infrastructure takes that particular DevOps burden off your hands. You can focus on building your application and take advantage of:

A global edge network: Wherever your app’s users are in the world, Ably edge locations ensure <50 ms latencies right across our network.

Not only WebSocket: Ably selects the right protocol for your application’s needs and network conditions, choosing from options such as WebSocket, Server Sent Events, and MQTT.

Resilient delivery: Ably guarantees that messages arrive in order and on time.

99.999% uptime: Ably’s network routes around failure to ensure your application keeps on delivering.

Scale to meet demand: As demand grows, Ably scales from thousands to billions of messages.

Great developer experience whatever your tech stack: With SDKs for more than 25 languages and frameworks, integrations with common tooling, and industry leading documentation, Ably gives you the tools to become productive quickly.

Sign up for your free Ably account and start building today.

Recommended Articles

The Evolution of HTTP – HTTP/2 Deep Dive

This article covers the limitations of HTTP/1.0 and HTTP/1.1 and how those limitations led to the protocol's evolution to HTTP/2.

WebSockets and iOS: Client-side engineering challenges

Learn about the many challenges of implementing a dependable client-side WebSocket solution to provide iOS users with better and faster realtime experiences.

Socket.IO: How it works, when to use it, and how to get started

Learn what Socket.IO is, how it works, and when to use it for building realtime applications. Explore use cases, security, architecture, and best practices.