What is Socket.IO?

Socket.IO is a JavaScript library for building realtime, event-driven web applications. It enables bidirectional communication between clients and servers using WebSockets and fallback technologies.

If you’ve ever built chat apps, live feeds, or collaborative tools, you’ve likely encountered Socket.IO. But despite its popularity, many teams still wonder: how does it actually work, and when is it the right tool for the job?

How does Socket.IO work?

Socket.IO operates on top of HTTP and WebSocket protocols to provide:

Realtime communication: Low-latency messaging between client and server.

Event-based architecture: You emit and listen for events on both ends.

Automatic reconnection: Built-in logic to recover from temporary disconnects.

Fallback mechanisms: Falls back to long polling if WebSockets aren’t available.

Socket.IO allows bi-directional communication between client and server. Bi-directional communications are enabled when a client has Socket.IO in the browser, and a server has also integrated the Socket.IO package. While data can be sent in a number of forms, JSON is the simplest.

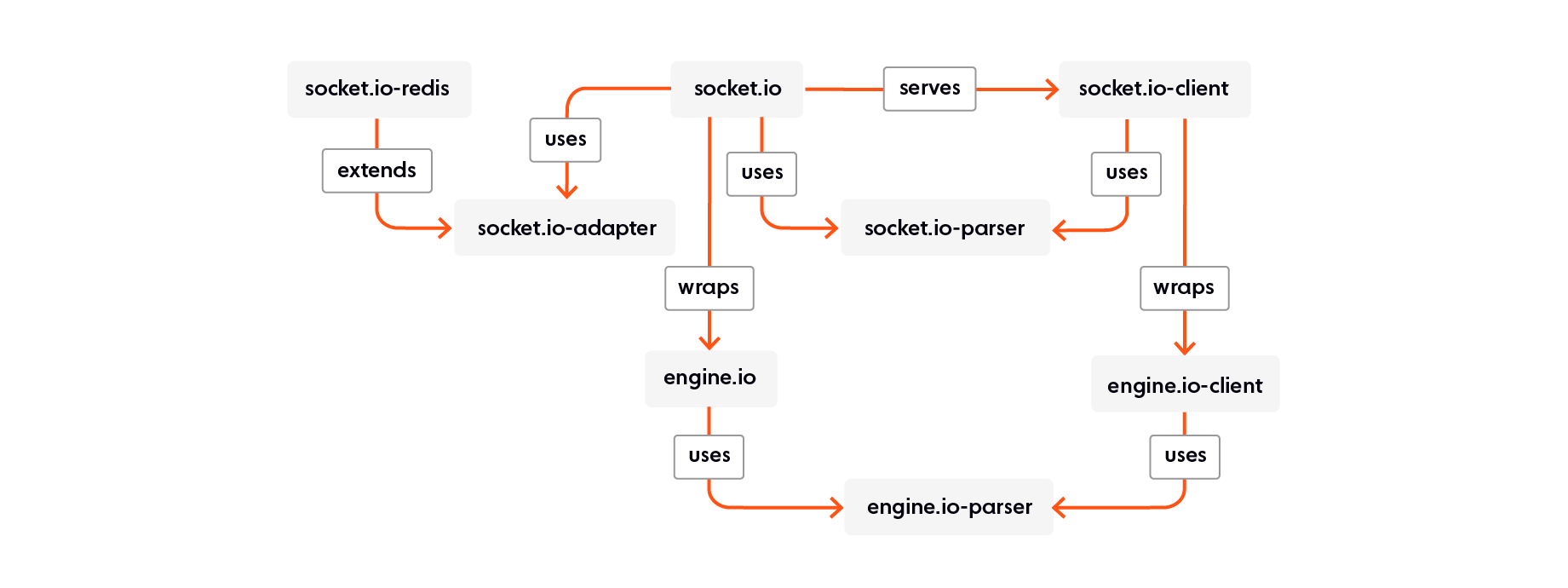

To establish the connection, and to exchange data between client and server, Socket.IO uses Engine.IO. This is a lower-level implementation used under the hood. Engine.IO is used for the server implementation and Engine.IO-client is used for the client.

👉 Want to scale a Socket.IO app to millions of users? Explore our guide on scaling strategies and bottlenecks.

Socket.IO advantages

Socket.IO is often a first choice for developers who need realtime capabilities quickly, and for good reason. Here are its primary advantages:

1. Event-driven architecture that mirrors business logic

Socket.IO’s event-based model enables clean, modular messaging patterns between clients and servers. This makes it easy to express domain-specific behaviors (e.g. orderPlaced, userTyping, matchFound) without coupling them to lower-level protocols.

Why it matters: Encourages separation of concerns and better domain modeling — critical for large, maintainable systems.

2. Built-in support for multiplexing and broadcasts

With support for namespaces and rooms, Socket.IO allows multiple logical channels over a single connection, and efficient broadcast to groups of clients.

Why it matters: Reduces connection overhead and improves routing efficiency — particularly valuable for multi-tenant apps, collaborative tools, and live-data platforms.

3. Disconnection detection and automatic reconnection

Out of the box, Socket.IO handles common connection issues by detecting disconnects and attempting reconnections with exponential backoff. Lifecycle events like connect_error, disconnect, and reconnect provide hooks for recovery logic.

Why it matters: Improves reliability for high-churn or mobile-heavy user bases, and reduces the need for custom reconnection logic in client code.

4. Horizontal scaling with adapter support

Socket.IO can be horizontally scaled using adapters such as Redis, NATS, or MongoDB to share state (e.g. rooms and message broadcasts) between nodes in a cluster.

Why it matters: Enables scale-out architecture, allowing teams to build load-balanced and fault-tolerant systems with commodity infrastructure — as long as they’re willing to manage state synchronization and adapter reliability.

5. Protocol fallback for broad device and network support

Socket.IO will attempt to use WebSockets first, but automatically falls back to HTTP long polling when necessary — particularly useful behind proxies, firewalls, or in legacy browsers.

Why it matters: Improves coverage across corporate networks, mobile carriers, and international regions where WebSockets are unreliable or blocked.

6. Fast time to market with JavaScript-first ecosystem

With native support for Node.js and seamless integration with the broader JavaScript ecosystem, Socket.IO allows full-stack teams to prototype and deploy features rapidly.

Why it matters: Ideal for lean teams shipping fast, or for projects where realtime is one of many features, not the whole product.

7. Mature community and ecosystem

With over a decade of usage, a large install base, and a wide range of community-driven plugins and examples, Socket.IO offers a stable foundation for realtime development.

Why it matters: Reduces the risk of platform lock-in or knowledge silos — especially for teams looking to avoid adopting niche or overly complex realtime stacks.

Limitations of Socket.IO (especially at scale)

While Socket.IO is a powerful and flexible library for adding realtime capabilities to applications, it presents several limitations — particularly as systems grow more complex, distributed, and mission-critical. Below is a breakdown of the most important trade-offs to consider for senior technical teams.

1. Memory leak risks and compression trade-offs

Socket.IO has a known history of memory leaks, especially under high-throughput conditions. One recommended mitigation is disabling perMessageDeflate, which compresses WebSocket messages — but doing so increases bandwidth consumption and undermines one of WebSocket's primary performance benefits.

Disabling long-polling can also reduce memory overhead, but this breaks fallback compatibility for users in restricted or legacy network environments.

Why it matters: You’re forced to choose between memory safety and efficient data transmission, or user accessibility across different networks — none of which are ideal.

2. Weak message delivery guarantees

Out of the box, Socket.IO offers at-most-once delivery. While message ordering is preserved, there’s no guarantee that all messages will arrive — particularly during disconnects or poor connectivity. Implementing at-least-once semantics requires manual setup (acknowledgments, timeouts, DB persistence, offset tracking), and exactly-once delivery isn’t natively supported at all.

Why it matters: If your app demands data integrity — think trading platforms, telemetry, collaborative editing — Socket.IO's delivery guarantees require non-trivial engineering work and still fall short of ideal.

3. Limited platform and language support

Socket.IO is tightly coupled to Node.js and its own wire protocol, meaning it cannot interoperate with standard WebSocket clients or servers. While official clients exist for Java, Swift, and C++, many community-supported implementations (e.g., for Go, Python, .NET) are outdated, limited in functionality, or unmaintained.

Why it matters: For polyglot teams, or projects needing consistent cross-language support, this creates fragility. You may face vendor lock-in, reduced features, or have to build and maintain your own client/server implementations.

4. Limited native security features

Socket.IO lacks several core security capabilities out of the box:

No end-to-end encryption support — this must be implemented manually.

No native token management — teams must build a parallel authentication service for token generation, validation, and refresh.

Why it matters: Security-sensitive use cases (e.g. fintech, healthtech) will require additional infrastructure to meet compliance or risk standards.

5. Single-region by design

Socket.IO is built for single-region deployments. Building a multi-region, globally available system using Socket.IO is technically possible, but not natively supported — and there's little public precedent for anyone achieving this in production at scale.

Challenges include:

Increased latency for geographically distant users

Downtime if your region experiences an outage

No built-in global data replication or routing

Why it matters: If sub-100ms latency, multi-region resilience, or uptime guarantees are non-negotiable, Socket.IO requires extensive custom architecture to deliver.

6. Sticky load balancing limits dynamic scaling

Socket.IO relies on sticky sessions (session affinity) when fallback transports like HTTP long-polling are in use. This ties each client to a specific server instance.

Implications:

Clients reconnect to the same (potentially overloaded) server

Dynamic auto-scaling becomes more difficult

Load cannot be easily redistributed during traffic spikes

To avoid this, some teams disable fallbacks and force WebSocket-only connections — but this reduces client compatibility.

Why it matters: Scaling Socket.IO in cloud-native environments requires careful trade-offs between reliability, performance, and reach.

7. External adapter dependencies introduce fragility

To scale horizontally, Socket.IO depends on external state-sync adapters like Redis. These become critical infrastructure components that can themselves become points of failure or performance bottlenecks.

Example: If your Redis server is down or under stress, room and namespace messages won’t propagate — and clients won’t receive expected updates.

Why it matters: Without multi-region failover or fault-tolerant architecture around these components, your system inherits their fragility.

When Socket.IO is (and isn't) a fit

Socket.IO is a strong choice when you need:

Speed of development

A familiar JavaScript-first environment

Control over event design and connection handling

However, if your use case involves:

High throughput across multiple regions

Strict latency or delivery guarantees

Complex multi-language server ecosystems

…then a more scalable, fault-tolerant platform may be better suited.

Best practices for using Socket.IO

If you’ve evaluated the trade-offs and decided that Socket.IO is the right fit for your application, there are several best practices that can help mitigate its limitations — particularly around scalability, reliability, and security.

These recommendations are essential for teams deploying Socket.IO in production environments where uptime, data integrity, and user experience matter.

1. Implement reliable messaging semantics

Out of the box, Socket.IO provides at-most-once delivery. To improve reliability:

Use acknowledgments (

socket.emit('event', data, callback)) to confirm delivery.Implement client-side retries with timeout logic for critical messages.

Assign unique IDs to events so you can deduplicate on receipt.

Persist events in a datastore for replay in the event of disconnection.

Track last-received offsets on the client to request missed messages on reconnect.

🔁 Aim for at-least-once semantics where loss is unacceptable. Exactly-once requires substantial additional infrastructure and isn’t natively supported.

2. Design for scale using horizontal adapters

Socket.IO can scale horizontally using Redis, NATS, or other adapters. To make this viable:

Use Redis with persistence and high availability (e.g. Redis Sentinel or Redis Cluster).

Monitor adapter latency and connection churn — they can become bottlenecks.

Consider message volume and room churn when tuning Redis memory settings.

⚙️ Your adapter infrastructure becomes a core dependency — treat it like a production-grade service, not a plug-and-play feature.

3. Optimize connection management

Socket.IO offers automatic reconnections and fallback support. To ensure stability:

Set appropriate heartbeat intervals and ping timeouts to detect stale connections.

Log and monitor

disconnectandreconnect_attemptevents for visibility into connection health.Use room-level segmentation to scope broadcasts efficiently and avoid message overload.

📶 Be especially cautious in mobile-heavy environments where connectivity is intermittent.

4. Balance Compression vs Memory Stability

Socket.IO’s perMessageDeflate setting (used to compress WebSocket messages) can cause memory leaks under heavy load. Mitigation strategies include:

Disable

perMessageDeflatein high-throughput environments if memory usage becomes unstable.Benchmark the impact of compression vs. CPU/memory trade-offs in your load profile.

Monitor heap usage and GC behavior during large broadcast events or spikes in concurrent clients.

🧠 There is no universal setting — load test different configurations before deploying.

5. Understand Fallback Transport Constraints

Socket.IO will fall back to HTTP long-polling when WebSockets aren’t available — which improves reach but introduces scaling friction:

Sticky sessions are required for fallback support — ensure your load balancer is configured properly (e.g. NGINX, ELB with session affinity).

Disable fallback only if you're confident that all your clients support WebSockets and you're prepared to lose coverage in strict networks.

🔄 Fallbacks increase compatibility but complicate autoscaling and introduce reconnection risks.

6. Secure Your Connection Lifecycle

Socket.IO does not include native authentication or encryption beyond TLS. For secure deployments:

Use middleware to verify tokens (e.g. JWT) on connection.

Handle token expiration and renewal through custom logic or external auth providers.

Consider implementing end-to-end encryption at the application layer if required by compliance or trust models.

🔐 Security must be layered on top — it is not a built-in feature of the protocol.

7. Monitor and Observe System Behavior

Observability is essential for any realtime system. With Socket.IO:

Track event volume, message latency, connection churn, and room growth over time.

Log all connection lifecycle events (

connect,disconnect,reconnect, etc.).Use distributed tracing and metrics collection (e.g. Prometheus + Grafana) across your adapter, app servers, and clients.

📈 Socket.IO doesn’t ship with observability tools — instrument it like any other critical production service.

Final thoughts: Should you use Socket.IO?

Socket.IO remains a powerful and pragmatic choice for adding realtime functionality to web applications — especially when speed to market, JavaScript-native workflows, and developer control are key priorities. Its familiar event-driven model, automatic reconnection logic, and broad community support make it a favorite for teams building internal tools, chat systems, collaborative features, and prototypes.

But as your application scales — across users, regions, and complexity — so too do the architectural and operational demands. Memory management, delivery guarantees, multi-language support, and global availability become more than just nice-to-haves; they become business-critical.

If you choose to build with Socket.IO, go in with your eyes open: implement the best practices outlined above, plan for scale from day one, and be prepared to invest in the infrastructure that makes realtime work reliably.

That said, if you're looking for a platform that abstracts the hard parts — like global routing, message guarantees, and elastic scaling — and just works out of the box, there are modern alternatives worth exploring.

Recommended Articles

Django Channels vs WebSockets: What’s the difference?

Confused about Django Channels vs WebSockets? Learn how they differ, when to use each, and why Django Channels isn’t a WebSocket replacement.

The Evolution of HTTP – HTTP/2 Deep Dive

This article covers the limitations of HTTP/1.0 and HTTP/1.1 and how those limitations led to the protocol's evolution to HTTP/2.

XMPP

XMPP is an XML-based messaging protocol for realtime data transmission between distributed systems.