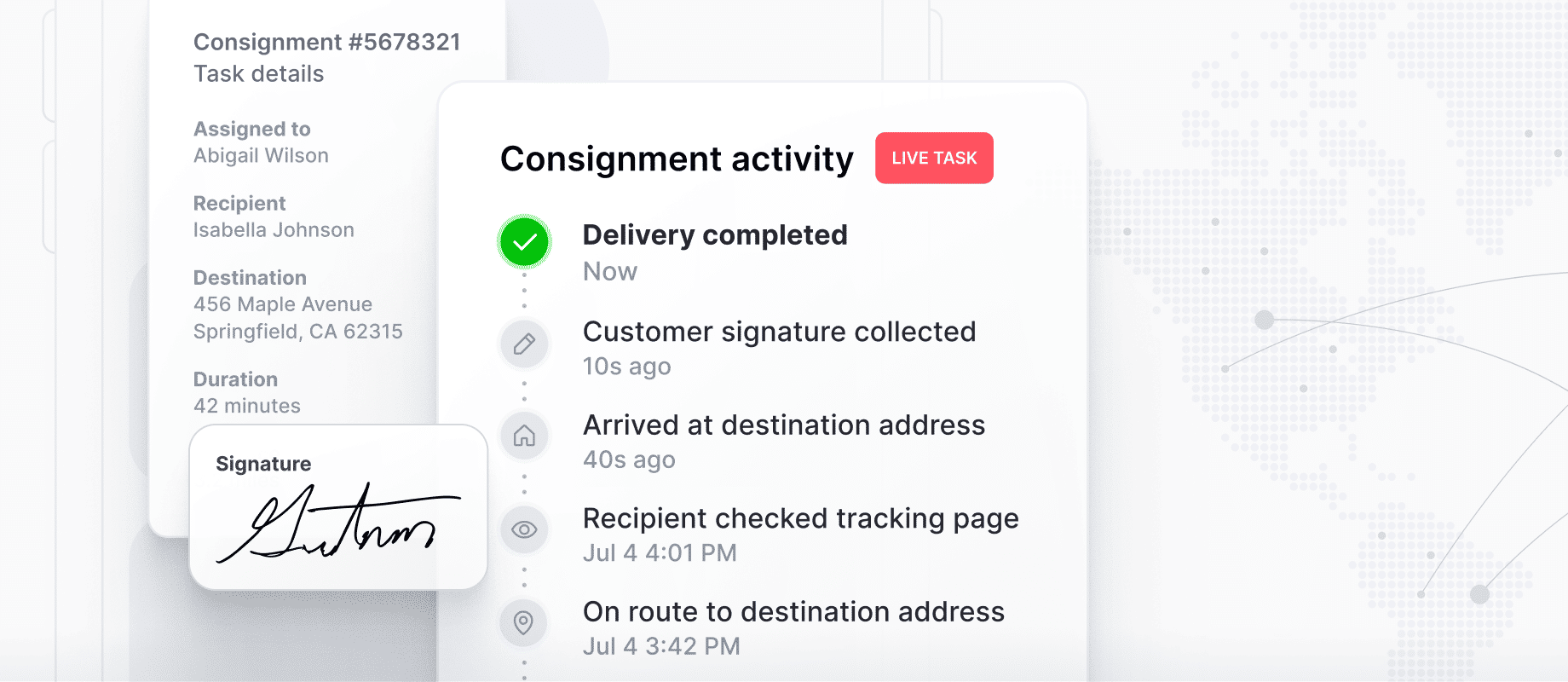

Amazon was founded in 1994, went public in 1997, and reached a market cap of $1.5 trillion in 2020. As a result of Amazon’s successes and a long tail of rapidly modernizing ecommerce businesses, consumers and businesses alike have transformed their expectations around transportation and logistics. Consumers, for example, expect up-to-the-minute updates on package delivery, and businesses require, among other features, realtime asset and vehicle monitoring.

On a rising tide, the expectations for updates have risen along with more assets in transit. 37% of all U.S. purchases are now made online but people expect even more updates that are even more granular. Realtime updates have become an essential feature for any company offering a transportation or logistics app.

The problem is that while most apps can likely handle updates, many cannot handle realtime updates. Latency, data integrity, and elasticity are all issues that companies and developers are familiar with but in a realtime context, a new approach is necessary to meet these challenges and through meeting them, deliver a compelling customer experience.

The challenges themselves are harder but the standards a realtime updates feature has to meet are much higher. If you’re offering a dashboard to help businesses track last-mile fulfillment, for example, even small moments of latency can compromise the experience.

In this article, we’ll explore why realtime updates matter and explain the technology necessary to provide an instantaneous experience. We’ll then explain what effective realtime updates look like, and walk through what it takes to build an infrastructure that makes them possible.

What are realtime updates and why do they matter?

Realtime updates are, at the simplest, data broadcasts that send updates from a backend to many different users all at once. The technical challenge comes from reliably and consistently delivering these updates at scale without error or delay.

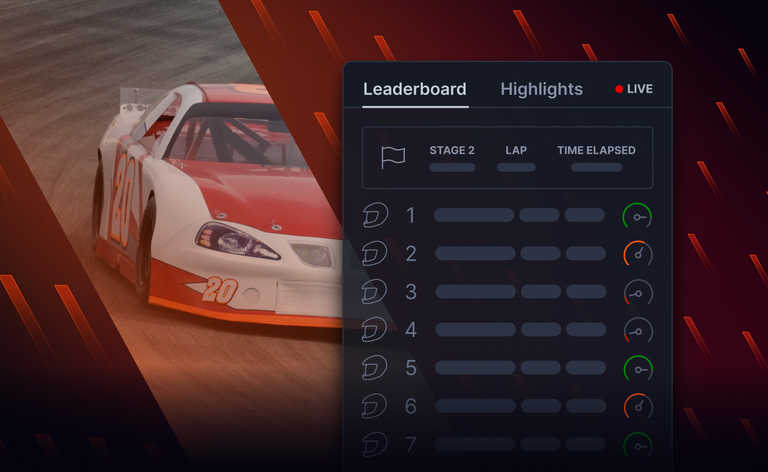

Realtime updates matter the most in situations where an update’s recency is essential to its value. And there’s no better example of companies in need of rapid updates than companies working in transportation and logistics.

Companies like UPS, FedEx, and DHL, for example, manage massive fleets of drivers and vehicles (who are themselves handling massive amounts of packages and parcels). UPS alone delivers 27 million packages per day and 25 billion per year.

But while these companies are some of the biggest players in the transportation and logistics world, there are many more companies involved in the transportation of assets and the monitoring of vehicle health, driver behavior, and fuel consumption.

In any of these contexts, realtime updates are extremely valuable but even slightly delayed or inaccurate updates become almost meaningless or misleading. Monitoring a driver in realtime makes a logistics dashboard useful, for example, but if updates lag behind the driver’s actual progress, then the usefulness is compromised.

The result is a difficult tension: People want realtime updates but their expectations of “realtime” tend to be so strict that delivering on the promise of this feature is often more difficult than even experienced engineers can predict.

What do successful realtime updates look like?

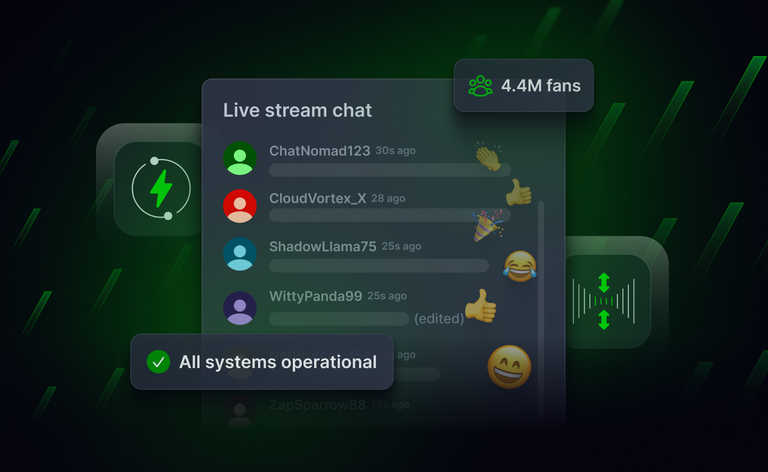

Realtime updates are delivered at speeds that feel instantaneous to users – which not only means they’re delivered rapidly but that they maintain that speed despite issues that would otherwise slow them down.

Reliability, scalability, and integrity are where realtime update features succeed or fail. The most basic element of realtime updates is a solved problem in the sense that even the first computers offered the ability to send information across a distance. But decades after that first email was sent from one side of the room to the other, it remains difficult for companies to meet the technical challenge of delivering realtime updates at scale.

Success requires meeting all these challenges and coordinating how you handle them. Even if the data stays the same – “The package has arrived” or “Driver needs to refuel before next destination” – the value of any one data point disappears rapidly.

If you’ve built the feature, minor delays can seem forgivable but for users, the difference between an update as expected and even a relatively small lag can be the difference between a functional app and an effectively broken one.

Realtime updates depend on three pillars

Sending data from one point to another isn’t difficult but doing so instantly, at scale, and without error is a challenge.

There are three primary pillars to this challenge:

- Maintaining low latency levels,

- Ensuring data integrity,

- And meeting scalability expectations.

The root problem, however, isn’t supporting any individual pillar. The root problem is meeting latency, data integrity, and scalability expectations reliably and at scale.

The challenge doesn’t scale linearly because problems compound and solutions in one area might create problems in another. For example, reliability will become more and more difficult as a company adds users. Every new user will want to rely on new updates arriving instantly even as the user base grows.

You might address that problem by adding servers to support greater demand but if your system is replicating data across different data centers, how do you guarantee low latency? How do you synchronize message delivery? How can you ensure updates don’t repeat?

Low latency

If a truck driver runs into unexpected bad weather and an entire eighteen-wheeler of cargo is going to be delayed, a dashboard that provides realtime updates needs to follow through on maintaining low latency levels.

In other contexts, latency isn’t as make-or-break and companies can take the time to assemble more servers to handle greater demand. But if a company is providing realtime updates at scale, the typical approach doesn’t work and for companies moving fleets of vehicles and assets, updates aren’t a nice-to-have.

From the perspective of a user expecting realtime updates, latencies need to be in the low hundreds of milliseconds for the experience to feel “instantaneous” or “live.” If an update takes any longer to arrive, the feature won’t feel realtime and the experience will be compromised.

Maintaining data integrity

Even if a logistics company, for example, can offer a realtime updates feature that works at scale and consistently feels instant, the feature will still fail without data integrity.

The term “data integrity” captures all the problems associated with transmitting updates that are accurate to their source, including ensuring each update is only sent once and that each series of updates is in the correct order.

Like latency, data integrity is essential for transportation and logistics companies. If a user is managing a fleet of vehicles, realtime updates that reflect the fleet’s minute-by-minute movement make users feel in control. But if one update repeats the information from another update, then those same users can become confused. And if a contradictory update arrives then the whole experience feels chaotic and the user might as well close the app.

Operating at scale

For many companies, scalability is important but for the most part, only requires them to add resources to support user growth.

But in transportation and logistics, the demand for realtime updates isn’t always predictable – even if the standards for update delivery are always high. If a company opens up a new office, for example, and the office equipment supplier they use offers realtime shipping updates, that company will expect realtime updates even though the demand is sudden and significantly higher than usual.

Realtime is realtime and the expectations of users persist as circumstances change. Updates need to feel instant even as traffic spikes and user demand surges.

How transportation and logistics companies can deliver realtime updates at scale

Companies trying to provide realtime updates often have more trouble than they anticipate because they’re familiar with problems like scalability, reliability, and integrity, but in a realtime context, new strategies are necessary for success.

If a social media app crashes, users will be annoyed but most won’t be upset. Even if a banking app has unexpected downtime, users likely won’t switch apps or banks unless the problem is extended or frequent. But if an app promises realtime capabilities, similar scalability and reliability issues can be much more frustrating.

All this makes building an in-house realtime feature difficult and expensive. In our State of Serverless WebSocket Infrastructure report, for example, we found that it typically takes companies 10.2 person-months to build a realtime infrastructure project. Even then, this time investment is hard to predict because 41% of respondents reported missing deadlines and 46% said that costs grew so much that the project’s success was at risk.

Businesses like Toyota, HubSpot, and BlueJeans turn to Ably because we make it easy to deliver and support realtime experiences – all without having to build the infrastructure themselves.

To learn more, read about how you can use Ably’s APIs and SDKs to broadcast realtime data with low latency and high integrity.

This is the first in a series of four blog posts about the three pillars for realtime updates in transportation and logistics apps. In the other posts, we look at why low latency, data integrity, and elasticity are so important when you're trying to deliver realtime updates to end users at scale.