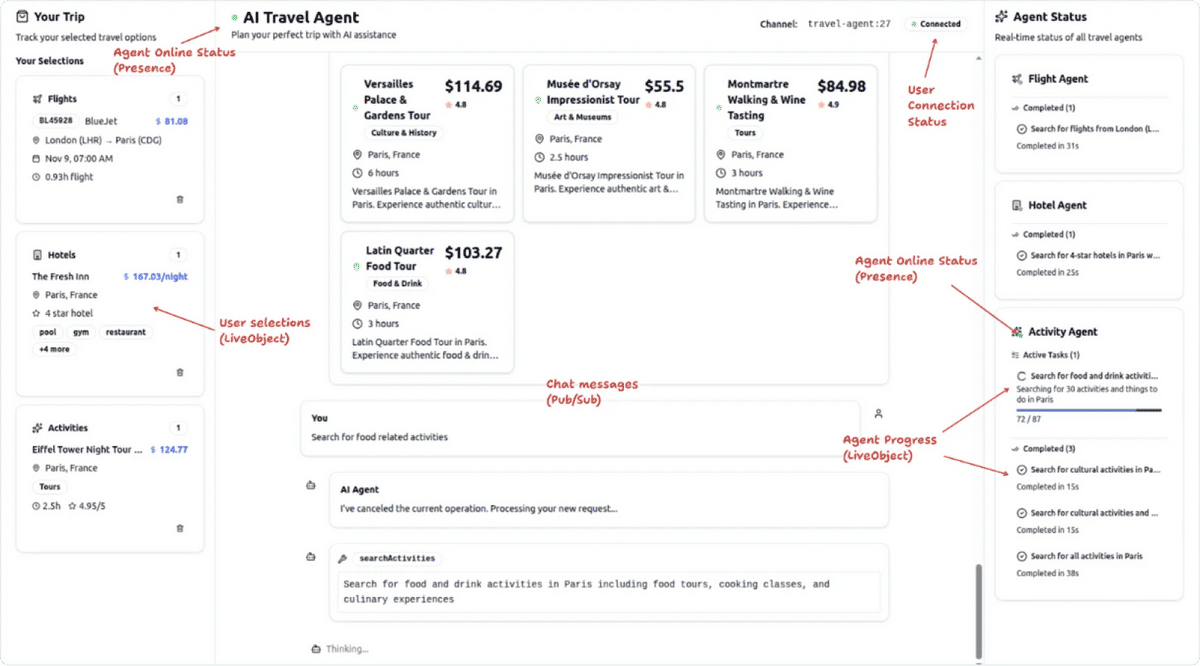

An Ably AI Transport demo

When you're building agentic AI applications with multiple agents working together, the infrastructure challenges show up fast. Agents need to coordinate, users need visibility into what's happening, and the whole system needs to stay responsive even as tasks branch out across specialised workers.

We built a multi-agent travel planning system to understand these problems better. What we learned applies well beyond holiday booking.

The coordination problem

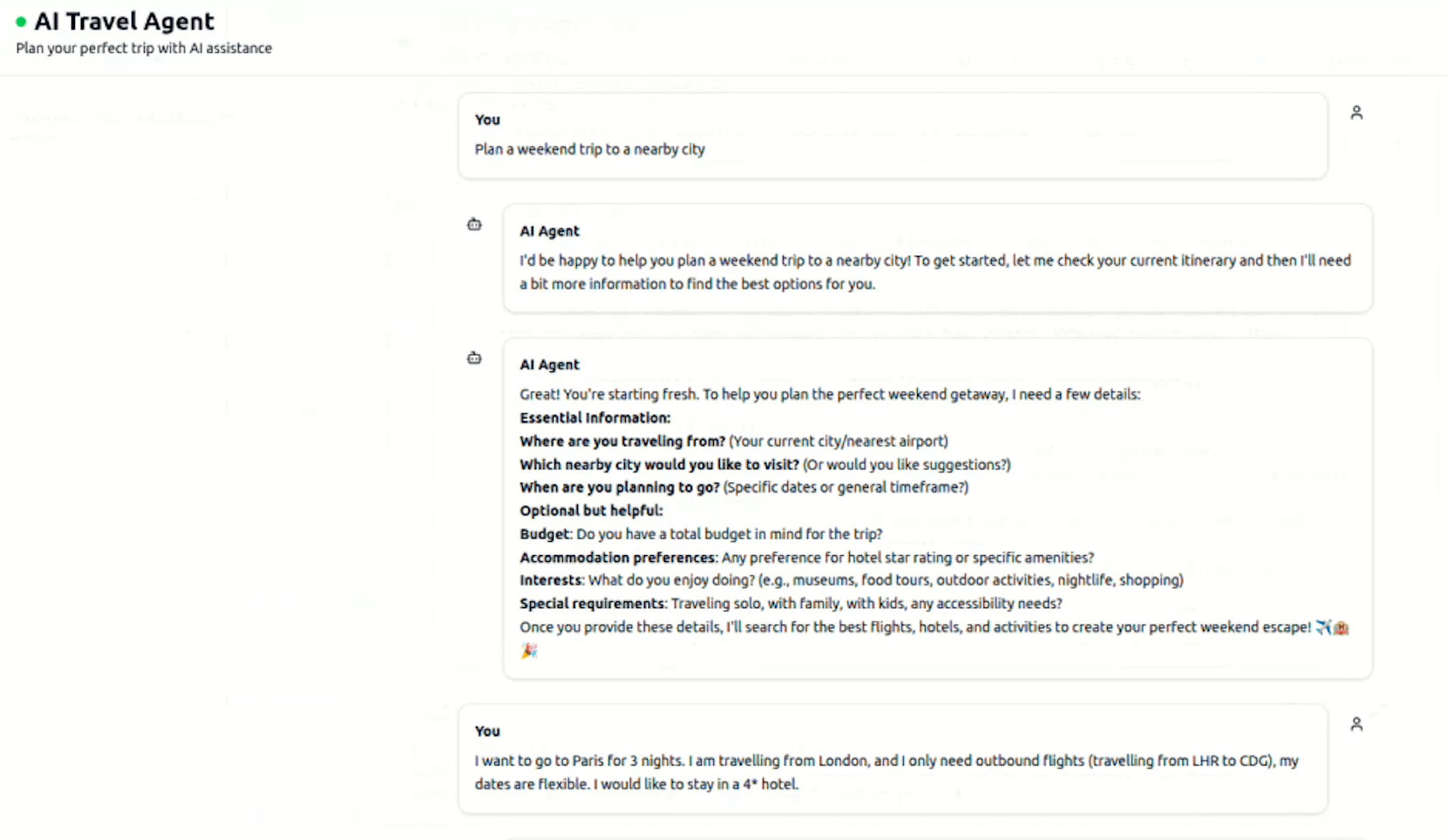

The demo uses four agents: one orchestrator and three specialists (flights, hotels, activities). When a user asks to plan a trip, the orchestrator delegates sub-tasks to the specialists. Each specialist queries data sources, evaluates options, and reports back. The orchestrator synthesises everything and presents choices to the user.

This mirrors how most teams are actually building agentic systems. You don't build one massive agent that tries to do everything. You build focused agents, give them specific tools, and coordinate between them.

The infrastructure question is: how do you keep everyone (the agents and the user) synchronized as work happens?

Why streaming alone isn't enough

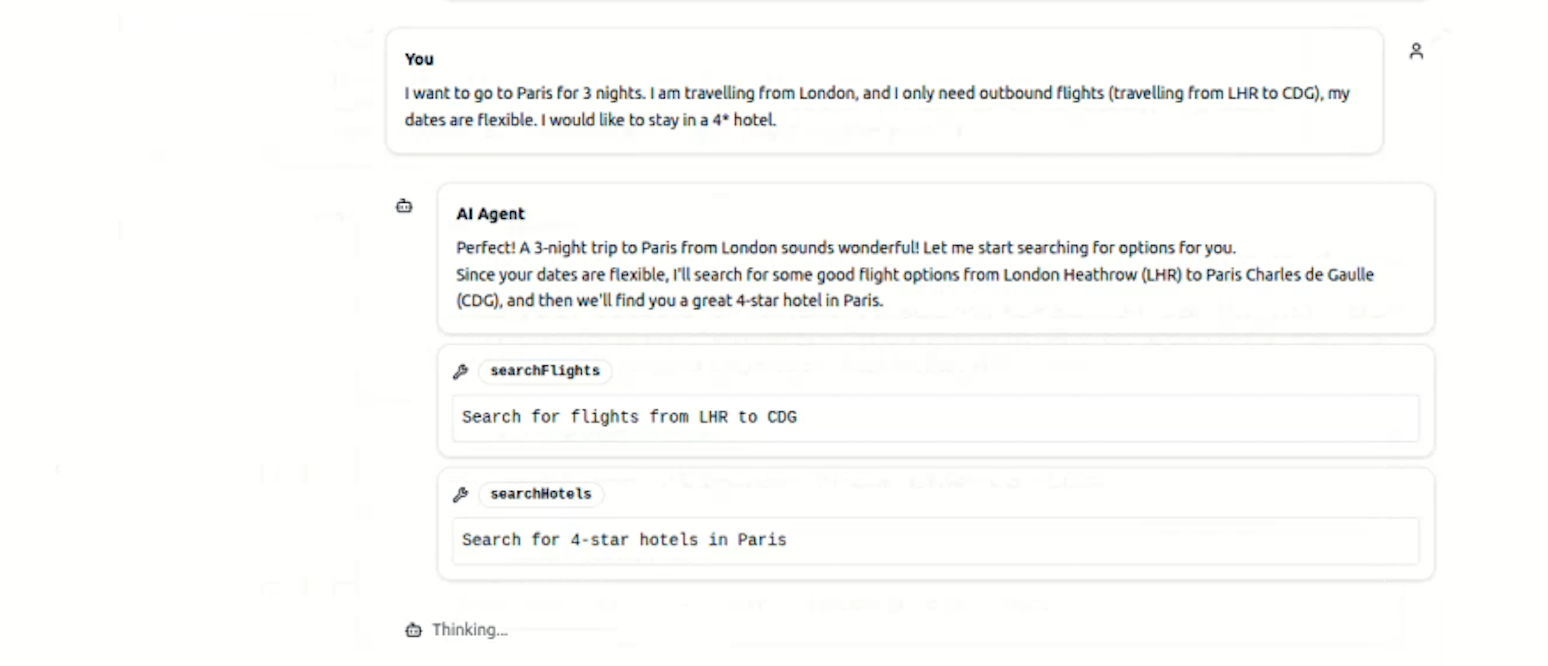

Token streaming solves part of this. The orchestrator can stream its responses back to the user so they're not waiting for complete answers. That's table stakes now for any AI interface.

But streaming tokens from the orchestrator is only part of the problem. Users want visibility into the behaviour of each specialised agent – through their own token streams, structured updates like pagination progress, or the current reasoning of an agent working through a task.

In our AI Transport demo, we also use Ably LiveObjects to publish progress updates from each specialist agent. The user sees which agent is active (tracked via presence), what it's querying, and how much data it's processing. These aren't logs or debug output. They're structured state updates that drive the UI. The agent even decides how to represent its progress to the user, taking raw database query parameters and turning them into natural language descriptions through a separate model call.

This requires infrastructure that can handle multiple publishers updating different parts of the shared state concurrently. The flight agent publishes its progress. The hotel agent publishes its progress. The orchestrator streams tokens (and it doesn't need to care about intermediate progress updates from the specialized agents). All on the same channel, all staying in sync.

State that reflects reality, not just conversation

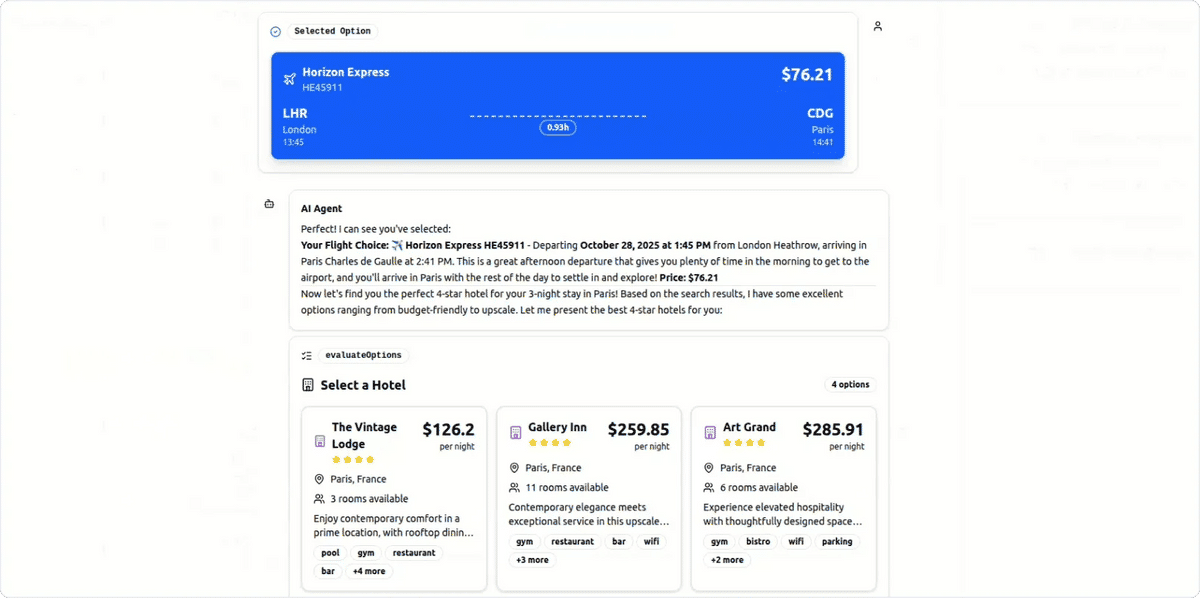

Chat history creates a limited view of what's actually happening. If a user changes their mind, deletes a selection, or modifies something outside the conversation thread, the agent needs to know about it.

We use Ably LiveObjects to maintain the user's current selections (flights, hotels, activities) and agent status. This creates a source of truth that exists independently of the conversation. The orchestrator can query this state directly through a tool call, even if nothing in the chat history explains the change.

The interesting bit: agents can subscribe to changes in this data, so they see updates live. While you could store this in a database and have agents query it via tool calls, the ability to subscribe means agents can react to user context in real time (what the user is doing in the app, data they're manipulating, configuration changes they're making).

When the user asks "what's my current itinerary?", the agent doesn't rely on conversation history. It checks the actual state. If the user deleted their flight selection, the agent sees that immediately.

This separation matters more as systems get complex. The conversation is one interface to the system. The actual state (what's selected, what's in progress, what's completed) needs to exist independently. Agents, users, and other parts of your system all need reliable access to current state, not a reconstruction from message history.

Synchronising different types of state

Not all state is created equal, and your infrastructure needs to handle different patterns:

Structured, bounded state works well with LiveObjects. Progress indicators (percentage complete, items processed), agent status (online, processing, completed), user selections, and configuration settings all have predictable size limits. Clients can subscribe to changes and re-render UI efficiently. Agents can read current state without parsing through message history.

Unbounded state like full conversation history, audit trails, or complete reasoning chains still belongs in messages on a channel. You're appending to a growing log rather than updating bounded data.

Bidirectional state synchronization enables richer interactions. You can sync agent state to users (progress updates, ETAs, task lists), let users configure controls for agents (settings, preferences, constraints), and give agents visibility into user context (where they are in the app, what they're doing, what data they're viewing). Each of these can use structured data patterns for efficient synchronization.

The key is knowing which pattern fits which data, and having infrastructure that supports both.

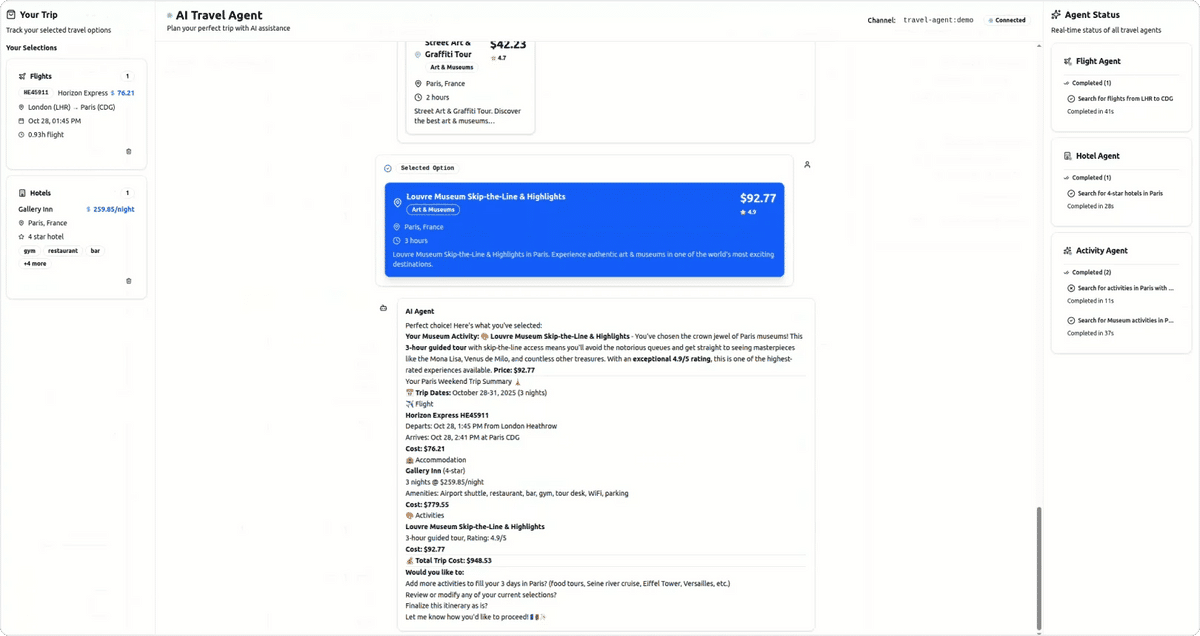

Decoupling internal coordination from user-facing updates

The agents in our demo communicate with each other over HTTP using agent-to-agent protocols. That's appropriate for internal coordination. It's synchronous, it's request-response, it follows established patterns.

The user-facing updates go over Ably AI Transport. That's where you need state synchronization and the ability for multiple publishers to update different parts of the UI concurrently.

This decoupling matters. Each agent can independently decide how to surface its progress updates and state to the user, while the user maintains a single shared view over updates from all agents.

We also let specialist agents write directly to LiveObjects, bypassing the orchestrator. When the flight agent has progress to report, it writes it. The user sees it. The orchestrator never touches that data (it only needs the final result). This avoids additional coordination and keeps the architecture simpler.

Handling interruptions

Users change their minds. They interrupt. They refine requests mid-task. Your infrastructure needs to support this without rebuilding everything from scratch.

In the demo, you can barge in and interrupt the agent while it's working. The system detects the new input, cancels the in-flight task, updates the state, and kicks off a new search. The UI shows the cancellation, the new request, and the new progress, all without breaking the conversation.

This works because state updates are events on a channel. The agents listen for new user input even while they're processing. When they see it, they can decide whether to cancel current work, adapt it, or complete it first. The infrastructure doesn't dictate this logic (it enables it).

What presence actually tells you

Before any interaction starts, the UI shows which agents are online. This comes from Presence. Each agent enters presence when it starts up and updates it as its status changes.

Presence serves multiple purposes. Agents can see the online status of users and take action if a user goes offline (canceling tasks or queuing notifications – essential from a cost optimization perspective). In multi-user applications, users can see who else is online in the conversation. And for your operations team, it's observability built into the architecture. This answers a basic question for users: is this system actually working right now?

The enterprise patterns that emerge

This travel demo is deliberately simple, but the patterns map directly to enterprise use cases:

Research workflows where multiple agents pull from different data sources (financial databases, customer records, market data) and coordinate findings. Users need to see progress across all of them, not wait for a final answer.

Document generation where one agent structures the outline, others fill in sections, another handles compliance checks. The state (which sections are complete, which are being reviewed, what's been approved) needs to stay synchronized as different agents work in parallel.

Customer support routing where classification agents determine issue type, specialist agents handle resolution, and orchestration agents manage escalation. Status updates need to flow to support reps, customers, and dashboards in real time.

The common thread: multiple agents, concurrent work, shared state, and humans who need visibility and control. The infrastructure that makes a travel planner responsive and reliable is the same infrastructure that makes these systems work.

What this requires from infrastructure

You need a reliable transport layer that allows concurrent agents and clients to communicate in realtime. This isn't just about pub/sub – it's about robust infrastructure, high availability, and guaranteed delivery.

You need state synchronisation that works for both structured data and message logs. Having access to both patterns depending on your needs is critical–bounded state objects for UI updates and configuration, unbounded message streams for conversation history and audit trails.

You need presence so you know what's actually online and available. You need connection recovery so users don't lose context when networks flicker.

Most importantly, you need this to work at the edge – in browsers and mobile apps, not just between backend services. That's where your users are. That's where responsiveness matters. The transport layer needs to be robust enough to handle the reality of client connectivity: spotty networks, mobile handoffs, browser tabs backgrounded and resumed.

The hard part of building multi-agent systems isn't the LLMs. The models are getting better every month. The hard part is the coordination, the state management, the visibility, and the reliability as these systems get more complex.

This is why we built AI Transport. We saw teams struggling with these exact problems: cobbling together WebSocket libraries, building their own state synchronization, dealing with reconnection logic, and watching their systems break under the messiness of real client connectivity. AI Transport gives you the infrastructure layer these systems need, built on Ably's proven reliability at scale, so you can focus on your agents instead of your transport layer.

Building agentic AI experiences? You can ship it now

This demo was built with Ably AI Transport. It's achievable today. You don't need to rebuild your stack to make it happen.

Ably AI Transport provides all you need to support persistent, identity-aware, streaming AI experiences across multiple clients. If you're working on agentic products and want to get this right, improve the AI UX, we'd love to talk.