Complex user tasks often need multiple AI agents working together, not just a single assistant. That’s what agent collaboration enables. Each agent has its own specialism - planning, fetching, checking, summarising - and they work in tandem to get the job done. The experience feels intelligent and joined-up, not monolithic or linear. But making that work means more than prompt chaining or orchestration logic. It requires shared state, reliable coordination, and user-visible progress as agents branch out and converge again. This post explores what users now expect, why traditional infrastructure falls short, and how to support truly collaborative AI systems.

What’s changing?

The shift from simple question-response to collaborative AI experiences goes beyond continuity or conversation. It’s about delegation. Users are starting to expect AI systems that can take a complex request and break it down behind the scenes. That means not one big model doing everything, but a network of agents, each focused on a part of the task, coordinating to deliver a coherent outcome. We’ve seen this in tools like travel planners, research assistants, and document generators. You don’t just want answers, you want progress, structure, and coordination you can see. The AI system shouldn’t just feel like a chat thread, it should feel like a team quietly getting on with things while keeping you informed.

What users want, and why this enhances the experience

When users interact with a system powered by multiple agents, they want to feel the benefits of parallelism without the overhead of managing complexity. If one agent is fetching flight data, another handling hotel options, and a third reviewing visa requirements, the user doesn’t care about the internal plumbing. They care that their travel plan is evolving visibly and coherently. They want to see that agents are working, understand what’s happening in realtime, and be able to intervene or revise things if needed.

Crucially, users expect the state of their task to reflect reality, not just the conversation. If they change a hotel selection manually, the system should adapt. If an agent crashes or stalls, the UI should show it. The value isn’t just in faster results, it’s in reliability, transparency, and the sense that multiple agents are genuinely collaborating, with each other and with the user - toward a shared goal.

To deliver this, agent systems need to stay in sync. State needs to be shared across agents and user sessions. Progress needs to be surfaced incrementally, not hidden behind a final answer. And context must be preserved so agents don’t overwrite or duplicate each other’s work. That’s what turns a bunch of isolated model calls into a coordinated assistant.

Why this is proving challenging

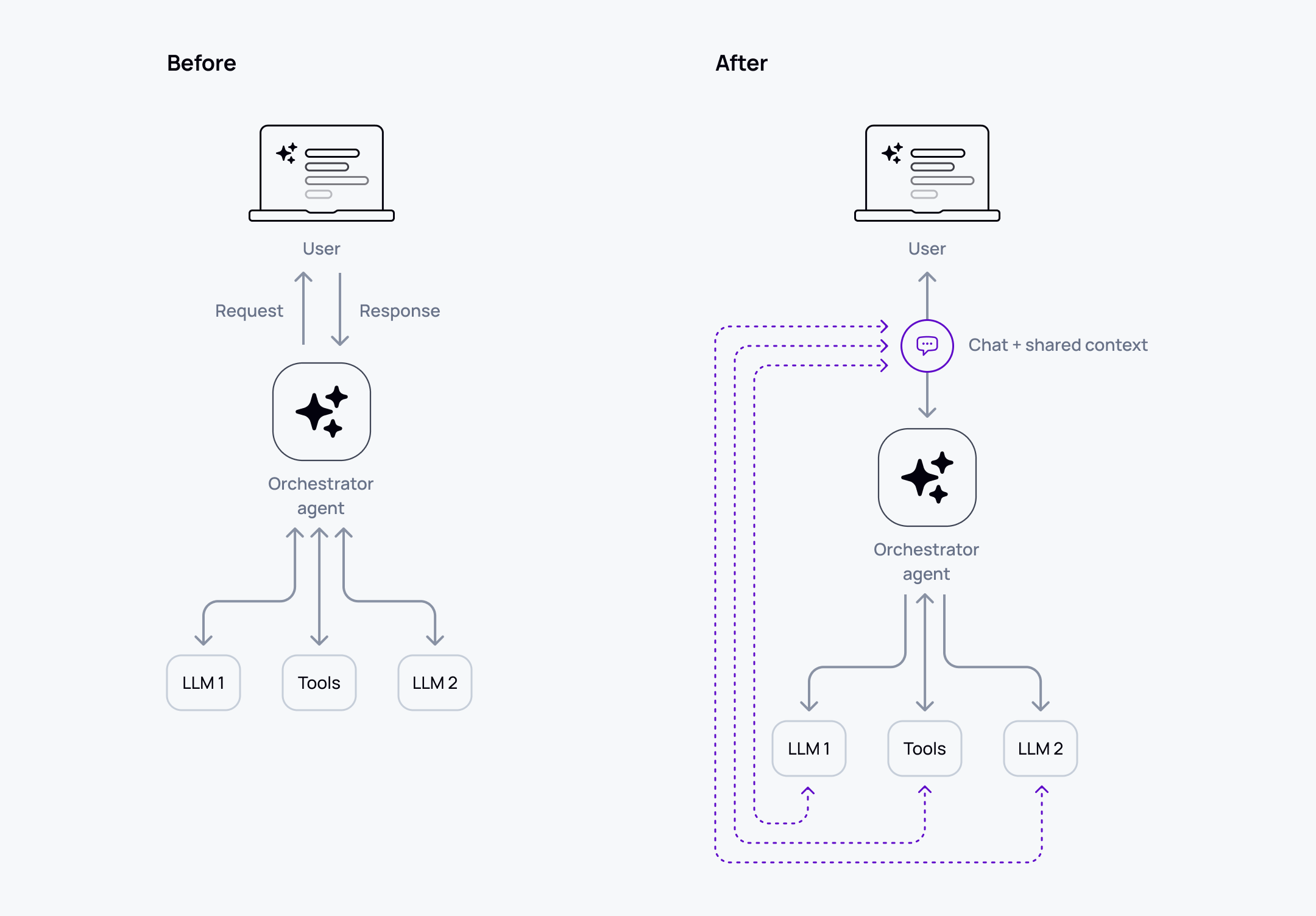

Multi-agent systems can work today, but the default pattern most tools push you toward is an orchestration-first user experience. Even when multiple agents are running behind the scenes, their activity is typically funnelled through a single orchestrator that becomes the only “voice” the user can see. That hides useful progress, creates a single bottleneck for updates, and limits how fluid the experience can feel.

That’s because traditional LLM interfaces assume a single stream of input and a single stream of output. Orchestration frameworks may invoke multiple agents in parallel, but the UI still tends to expose a linear, synchronous workflow: the orchestrator collects results, then reports back. If the user changes direction mid-process, or if an agent needs to react immediately to something in shared state, you’re often forced back into “wait for the orchestrator” loops.

The underlying infrastructure assumptions reinforce this. HTTP request/response cycles work well when one component is responsible for coordinating everything, but they make it awkward for multiple agents to maintain an ongoing, direct connection to the user and to shared context. Token streaming helps, but it usually represents one agent’s output to one user - not concurrent updates from a group of agents reacting in real time to a changing state.

Ultimately, the challenge isn’t that orchestration fails. It’s that it constrains app developers. Most systems don’t give you fine-grained control over which agent communicates what, when, and how, or an easy way to reflect multi-agent activity directly in the user experience. To build confidence and responsiveness, clients need to know which agents are active, what they’re doing, and how that activity relates to the shared, realtime session context - without everything having to be mediated by a heavyweight orchestrator.

Why you need a drop-in AI transport layer

To make multi-agent collaboration work in practice, you need infrastructure that handles concurrency, coordination, and visibility - not just messaging.

The transport layer must support persistent, multiplexed communication where multiple agents can publish updates independently while still participating in the same user session. That gives app developers fine-grained control over the user experience: which agents speak to the user, when they speak, and how progress is presented. Orchestrators can still exist, but they don’t have to mediate every user-facing update.

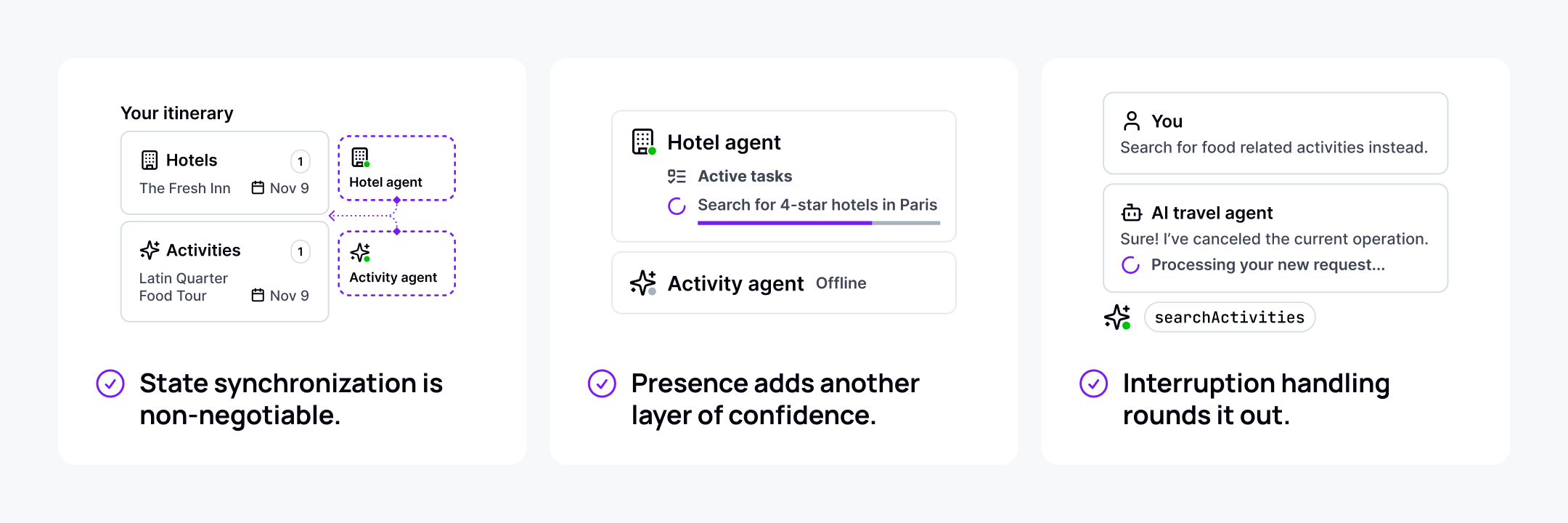

State synchronisation is non-negotiable

Structured data, like a list of selected hotels or the current trip itinerary, should live in a realtime session store that agents and UIs can both read from and write to. This creates a single source of truth, even when updates happen asynchronously, across devices, or outside the chat interface

Presence adds another layer of confidence

When users see which agents are online and working, it sets expectations and builds trust. If an agent goes offline, the system should detect it, not leave the user guessing. This becomes even more important as these systems scale up in production environments where reliability is critical.

Interruption handling rounds it out

Users will change their minds mid-task. Your system needs to respond without the orchestrator agent tearing down and restarting everything. That means listening for user input while processing, canceling or rerouting tasks, and updating the shared state cleanly so individual agents can pick up where they left off or switch strategies on the fly.

How the experience maps to the transport layer

Making it work today

Multi-agent collaboration is already happening in planning tools, research systems, and internal automation workflows. The models are not the limiting factor. The hard part is the infrastructure that keeps agents in sync, shares state reliably, and exposes progress to users in real time.

Ably AI Transport gives you the infrastructure needed to support this pattern. Realtime channels, shared state objects, presence, and resilient connections provide the foundations for agents that coordinate reliably and surface their work as it happens. No rebuilds, no custom multiplexing, no home-grown state machinery.

Sign-up for a free developer account and try it out.