Refresh the page, lose signal, switch tabs - the AI conversation just keeps going. That’s what reliable, resumable token streaming makes possible. No restarts, no lost context, just the same response picking up right where it left off. It keeps users in flow and builds trust, making conversations feel seamless. Even better, it unlocks things like switching devices mid-stream without missing a beat. This post explains why users expect it, why it’s hard to build, and what your infrastructure needs to make it work.

The new expectation for seamless AI interactions

As AI becomes woven into everyday apps, users have rising expectations for seamless interactions. An emerging baseline is that an AI conversation or generated answer should not be fragile. Reliable token streaming that survives crashes or reloads is quickly becoming expected behaviour. People now assume an ongoing AI response will continue uninterrupted despite a temporary failure. If your browser tab crashes or you hit refresh accidentally, you’d expect the AI to keep going in the background and resume when you return - just like a video resumes where you left off.

This expectation isn’t theoretical; it’s showing up as a real demand from users and developers. Many have noticed the annoyance of a chatty AI that goes silent after a network blip and forces you to retry the prompt from scratch. Forward-looking teams are already experimenting with ways to make AI streams more resilient. In short, the bar for a smooth AI experience is rising: reliable, resumable streaming is moving from a nice-to-have to a must-have.

What users want, and why this enhances the experience

Users want AI conversations that continue uninterrupted despite failures or reloads. They don’t want to babysit the AI or repeat themselves due to technical faults. Consider this scenario: You’re halfway through a detailed AI response when the page reloads or the network drops. When things come back, you expect the conversation to pick up right where it left off. The same question, same response, no rewind. That’s the baseline now: the AI should just handle it. In practice, this means users now expect a few key behaviours:

- Streamed responses resume instantly after a reload. The AI’s answer picks up exactly where it stopped when you refreshed.

- Incomplete prompts persist across failures. If you submit a question and the app crashes or you go offline, the AI still finishes the answer. You don’t lose your query or its partial response.

- Reconnection doesn’t trigger full re-generation. Coming back online or reopening the app doesn’t make the AI start the answer over from scratch; it continues as if nothing happened.

Under the hood, delivering these behaviours requires that the AI’s generation process not be tied to a single fragile connection. Even if the user disconnects, the system must carry on generating tokens so it can seamlessly resume later. In other words, the conversation’s state should survive independently of the user’s browser tab or device session. This greatly enhances the user experience by ensuring the AI is always “in sync” with the user, no matter the hiccups along the way.

Why this is proving challenging

Building seamless, resumable streaming sounds simple on the surface: keep the tokens flowing, even when something goes wrong. But under the hood, it’s anything but. Most web stacks were never designed for this kind of continuity, and the gaps show quickly when you try to implement it. There are a few core failure points that make reliable streaming difficult to get right:

Stateless protocols like HTTP drop the stream on failure

Most web interactions (think HTTP requests, REST APIs) are stateless and short-lived. If you’re streaming an AI response over a standard HTTP connection and it drops, that request is gone. HTTP has no native concept of resuming a half-finished response. It wasn’t designed for long-lived, continuous streams. This makes it fundamentally ill-suited to delivering multi-turn, token-based output with realtime guarantees.

Streaming logic often lives only in the browser

Many apps place the responsibility for handling AI output in the client - usually the browser tab. If that tab crashes or is closed, any awareness of the current conversation state disappears. Unless the server is explicitly maintaining progress (e.g. buffering the partial response), the result is a hard reset. Even a minor network blip or page reload can cause the entire generation to be lost, forcing the user to re-issue the prompt and wait again. From the developer’s side, this means wasted tokens and potentially double the LLM costs for the same request.

Server infrastructure rarely stores stream state by default

Even when WebSockets or similar protocols are used, many backends treat streaming as fire-and-forget. Once tokens are emitted, they’re not stored. If a client reconnects and asks “what did I miss?”, the server has no answer unless a stateful resume mechanism is in place. That means tracking client progress, buffering streamed output, handling retries, and ensuring correct ordering. None of which are trivial to bolt on after the fact. Building this kind of infrastructure requires careful design, and is one reason robust streaming support remains rare despite user demand.

These three limitations add up to a brittle default behaviour: A typical implementation ends up tying AI generation directly to a single client connection. If that connection drops due to a refresh, crash, or network issue, the stream is lost. Undelivered tokens vanish, and the user is left with an incomplete response and no way to recover. They have to restart the prompt and wait all over again. The result is a jarring, inefficient experience that breaks user trust.

Why you need a drop-in AI transport layer

Making streaming resilient isn’t something you can bolt on later. It needs to be handled at the transport layer, by infrastructure that treats continuity as the default. This isn’t one feature, it’s a set of behaviours working together to keep streams intact even when clients disconnect, reload, or crash. At a minimum, the transport should handle:

Persistent streaming connections

Instead of one-request-per-response, the client should use a persistent connection (e.g. WebSocket) that stays open for streaming. A persistent channel enables realtime, bi-directional, delivery and helps guarantee in-order arrival of tokens.

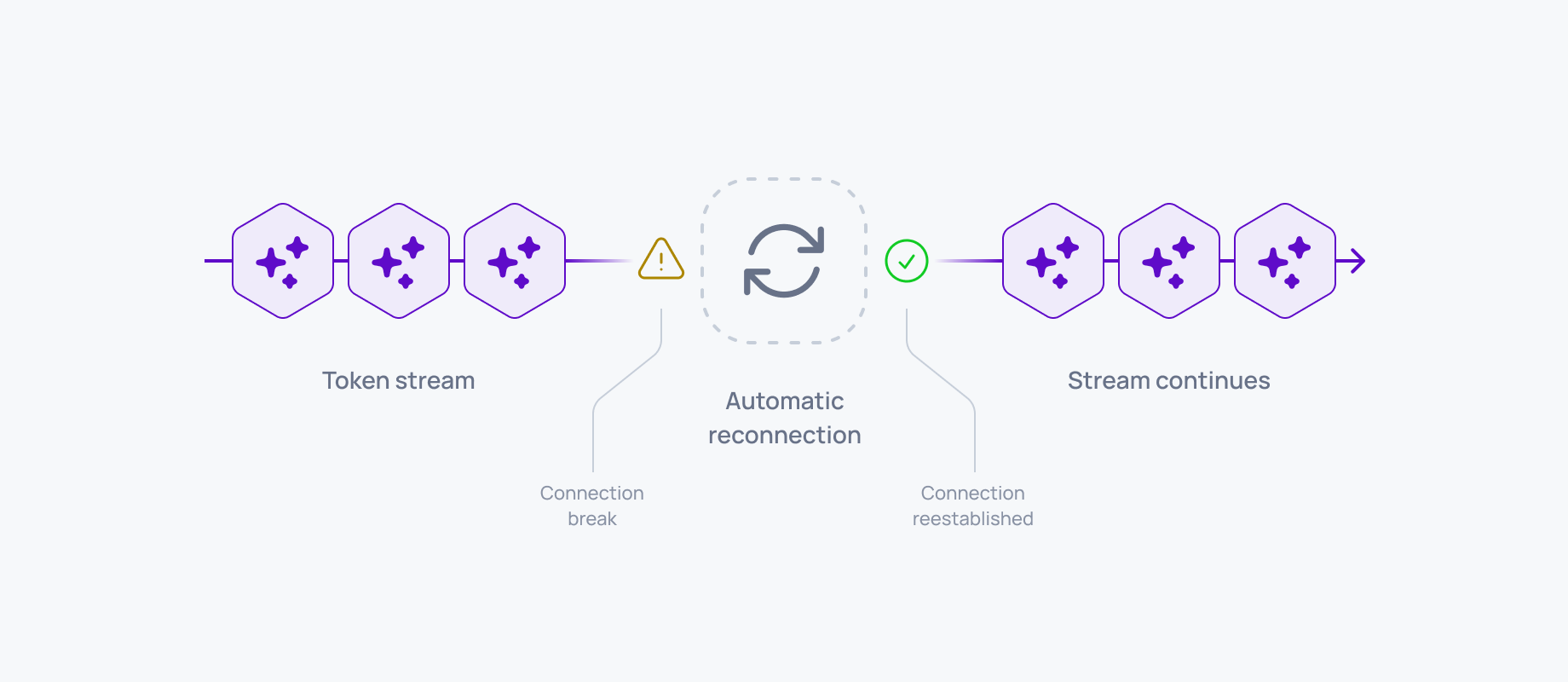

Unlike stateless HTTP calls, a WebSocket connection can continuously push data to the client without needing to re-establish a new HTTP request for each chunk. This drastically reduces the chance of interruption. And if the connection does break, the protocol can attempt automatic reconnection quickly, avoiding the overhead of starting a whole new session from scratch. In short, a long-lived connection is the foundation for uninterrupted token streams.

Server-side output buffering and replay

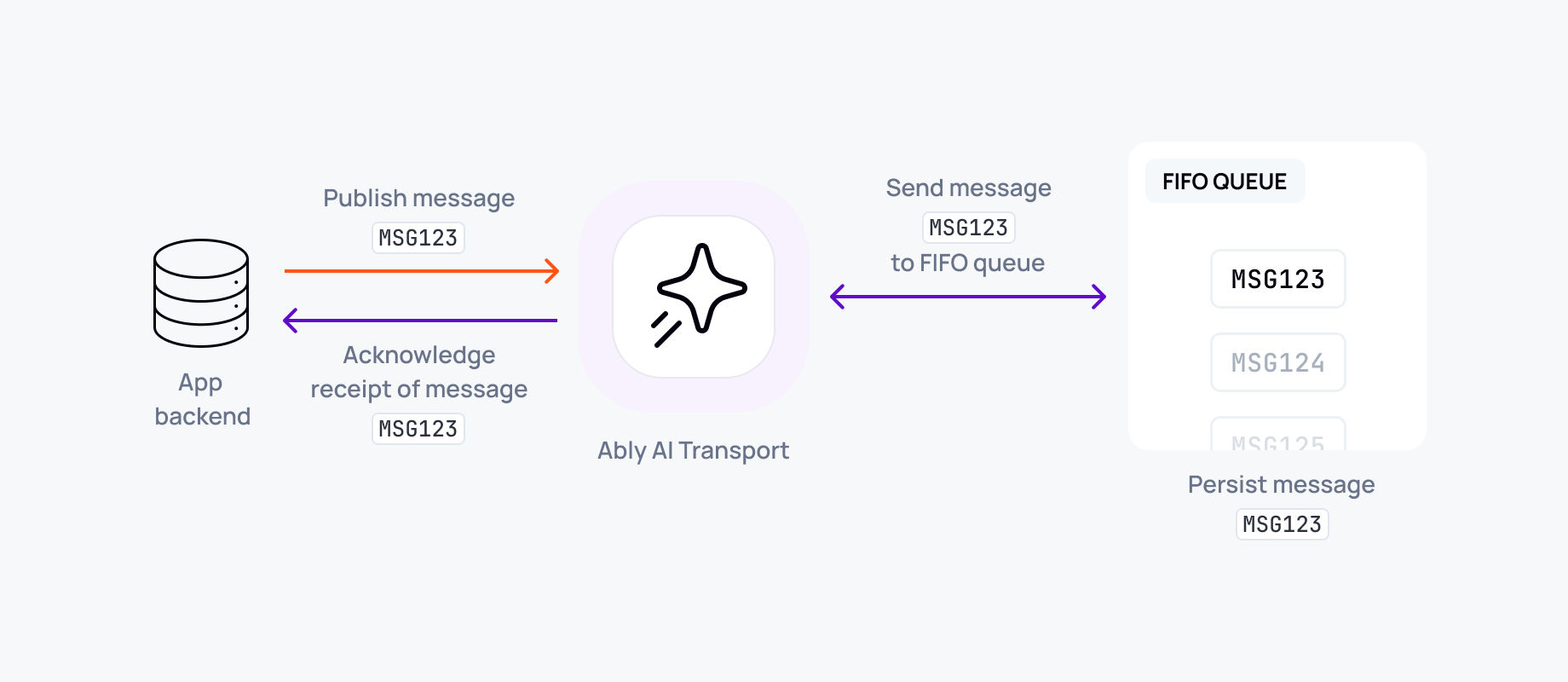

A resilient transport layer buffers the AI’s output on the server side as it’s being generated. Every token or chunk is stored (in memory or a fast store) at least until it’s safely delivered. Why? Because if the client disconnects momentarily, those tokens must still be available to send later. Ably’s messaging platform, for instance, persists messages while the client is reconnecting, guaranteeing no data is lost in transit. This buffered backlog enables catch-up: when a client reconnects, the transport can replay any missed tokens from the buffer before returning to live streaming.

The user doesn’t see gaps in the text, because the system fills in everything that was generated while they were away. Without server-side buffering, there’s simply no way to recover what was produced during a disconnect.

Session tracking across client restarts

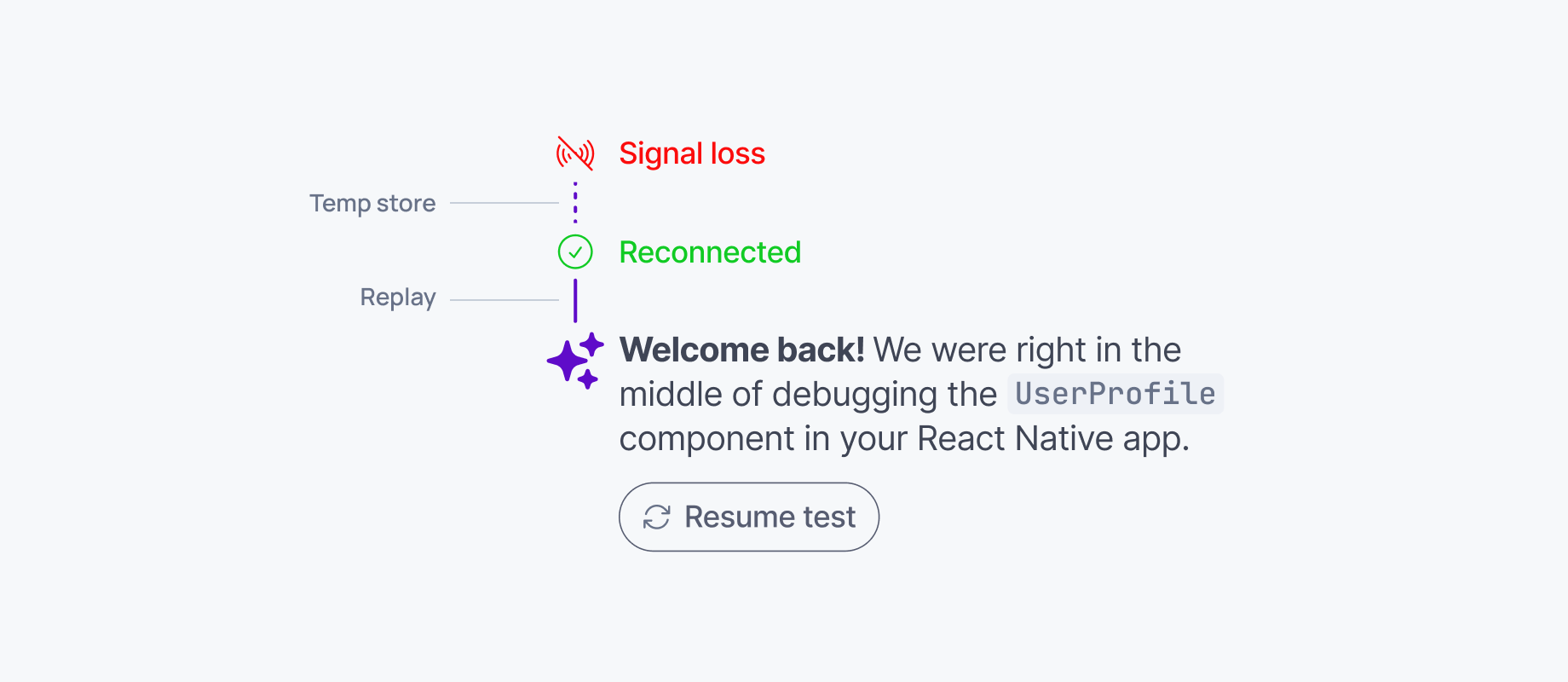

To resume a stream, the system needs to know who’s reconnecting and where to pick things up. That means tracking session state across connections. That typically means using a stable session or conversation ID that stays the same even if the page reloads or the device changes.

When the client reconnects, it should tell the server what it last received (for example, “I got up to token 123”). The server then uses that information to send only the tokens the client missed. This handshake (where the client shares its last-seen message ID) is what lets the stream continue cleanly, without starting over.

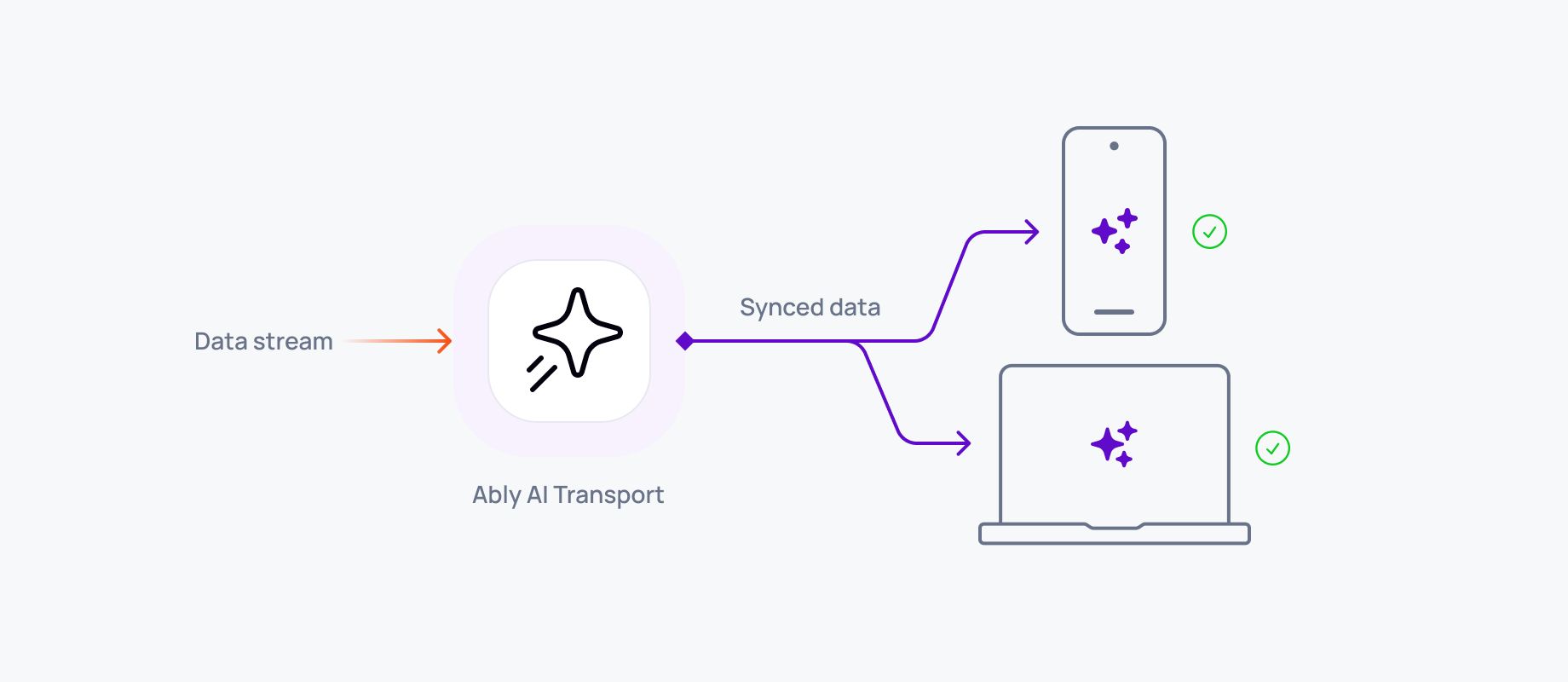

Platforms like Ably support this by using resume tokens or last-event IDs. The client includes that token on reconnect, and the stream resumes from exactly the right point. As a result, even after a crash, refresh, or switching devices, the AI response carries on with no manual sync needed.

Ordered delivery guarantees and reconnection state

Maintaining the correct order of tokens is critical. We can’t have jumbled or duplicated text when a stream resumes. The transport layer must guarantee that messages (tokens) are delivered in order, exactly once. This involves assigning each chunk a unique sequence identifier and ensuring the client never processes the same chunk twice even in the face of retries.

Upon reconnection, the system should replay missed tokens in the original order, with none missing and none repeated. Achieving this reliably often means the server needs to temporarily queue messages and only release them in sequence. Ably’s approach, for example, replays any messages the client missed during a disconnection and ensures no gaps or duplicates in the data. In practice, that means when your AI resumes its answer after a drop the user sees a continuous, correctly ordered completion as if no disconnect ever happened.

All of this points to a deeper architectural issue. To make streaming reliable, you need to rethink where state lives and how streams are managed across connections.

Putting it all together

A drop-in transport layer for AI needs to manage these concerns transparently. It keeps a persistent pipe open, buffers the token stream, tracks session offsets, and enforces ordering and exactly-once semantics. For the developers, this means you don’t have to build custom state management for every AI session – the transport layer provides the assurances that “your AI will keep streaming, no matter what.” Essentially, it’s infrastructure that transforms the unreliable web into a dependable conduit for AI data.

How the experience maps to the transport layer

To better illustrate, here’s how specific user expectations translate into transport-layer requirements and technical implementations:

Delivering reliable, resumable streaming today

Reliable, resumable token streaming isn’t theoretical anymore. You can ship it now. You don’t need to redesign your whole architecture or stitch together a fragile set of custom reconnection hacks.

Ably AI Transport gives you the infrastructure required to keep AI responses flowing, even when the client drops. Persistent connections, ordered replay, automatic resume, and delivery guarantees come as part of the platform. Your generation process keeps running, and users see the response continue exactly where it left off when they return.

If you’re building Gen-2 AI experiences and want streaming that survives reloads, outages, and device switches, we’d be happy to help.