If you’re building realtime application, you’re likely already familiar with the benefits of WebSockets—persistent, bidirectional, low-overhead communication, and near-universal availability.

But even if WebSocket is already on your radar, you might not yet have looked into WebSocket architecture best practices. Particularly if you’re more used to building request-response based applications with HTTP, then working with WebSocket requires some changes in your assumptions and the tools you use.

The good news is that this change is fairly straightforward. In this guide, we’ll explore the key considerations for designing an application architecture with WebSocket. We’ll cover both architectural and operational best practices to ensure scalability and long-term reliability. And, crucially, we’ll look at how WebSocket’s architectural needs differ from system design for HTTP.

A brief overview of WebSocket vs HTTP

HTTP’s stateless, request-response model is effectively the default pattern for client-server and intra-application communication. And so much of the tooling we use to build applications is designed around those assumptions.

So, before we get into WebSocket architecture best practices, let’s put WebSocket and HTTP side by side to underscore how they differ.

WebSocket | HTTP | |

Connection type | Persistent, full-duplex, bidirectional | Stateless, request-response, unidirectional |

Communication model | Continuous, realtime communication between client and server | Client sends request, server responds with data |

Overhead and latency | Low overhead after initial handshake Lower latency due to open connection | Each request opens a new connection, adding overhead Higher latency due to need for constant connection setup |

Use cases | Realtime apps (chat, gaming, live notifications) | Traditional web apps, fetching resources, API calls |

Scalability complexity | More complex, requires management of persistent connections | Easier to scale due to stateless, short-lived connections |

Message delivery | Supports immediate message delivery both ways | Client initiates requests, server can only respond Long polling offers a workaround |

State management | Requires server to manage connection state | No state management needed between requests |

Resource usage | More resource-intensive due to long-lived connections | Less resource-intensive as connections are short-lived |

In summary, the key difference with WebSocket is that it provides persistent, stateful connections, allowing both the client and server to send messages at any time. This is what makes it great for realtime communication, but it also means we face new challenges.

Challenges of WebSocket architecture

Every protocol and design pattern comes with its own set of pros and cons. For instance, HTTP’s statelessness makes it easy to scale horizontally, but it also requires workarounds—such as long polling—to support the needs of web applications.

Similarly, WebSocket system design is all about understanding the protocol’s characteristics well enough to know both the challenges it presents and how to account for them in your application architecture.

Let’s look at three of the main challenges that WebSocket presents.

Challenge | What it means | Why it matters |

Persistent connections | Connections stay open, consuming server, client, and network resources | Limits vertical scaling because server capacity is reached faster Horizontal scaling (adding more servers) is necessary as user numbers grow |

Stateful connections | Each connection maintains state between client and server | Harder to load balance, as connections must persist to the same server, unlike stateless connections where clients can connect to any server Requires more resources to track and maintain connection state |

Connection management | WebSocket doesn’t automatically reconnect if the connection drops | Requires manual reconnection management, such as heartbeats and pings Need to manage potential data loss during disconnections and pay attention to factors such as message ordering |

None of these makes WebSocket any less suitable for building realtime application architectures. In fact, they’re the very thing that makes them so well suited. But the trade-off is that you need to work them into your application architectures. And that’s especially true as your application scales.

How these challenges evolve with scale

As an industry, we have well-practiced ways of scaling HTTP connections. That’s because each HTTP request is independent. You can simply add more servers and use a load balancer to distribute incoming traffic.

WebSocket’s long-lived, stateful connections require more intentional planning. That’s not to say it’s impossible or even especially hard. But you do need to cater to the specifics of WebSocket’s architecture to scale as your system grows.

Here’s why scaling WebSocket requires a different type of planning than scaling HTTP traffic:

State: Each client must stay connected to the same server to maintain session state, which complicates load balancing. Unlike stateless HTTP, WebSocket connections can’t be freely distributed across servers. As your system scales, this can translate challenges around:

Hot spots: Too much load may get directed to a few instances, leading to uneven resource usage and potential bottlenecks.

State synchronization: Redistributing the load when clients reconnect can ease hot spots, but it introduces the need to synchronize state across servers, adding complexity to your architecture.

Failover difficulties: One key benefit of distributed systems, redundancy, becomes harder to achieve with WebSocket. If a server fails, switching clients to a new server without losing session data requires inter-server data synchronization, further complicating your architecture.

Message integrity and ordering: As more servers and redundancy are introduced, keeping messages in the right order and ensuring they’re delivered without duplication becomes a challenge, especially for critical realtime applications. Many WebSocket-based systems accept that duplication will happen and handle it with deduplication. Others, such as Ably, implement strict processes to guarantee exactly-once delivery.

Backpressure management: With thousands or millions of connected clients, the flow of data around your WebSocket system can scale to billions or trillions of messages. To avoid overload, you’ll need to implement flow control systems such as queues and rate limits.

N-Squared problem: As the number of connections increases, the potential interactions between clients grow exponentially. For example, in a realtime chat system, if each user can message any other user, the number of potential message paths scales quadratically. With 100 users, you have 10,000 possible interactions. With 1,000 users, that number jumps to 1,000,000. Now, consider that each message could trigger multiple responses or reactions, each of which creates additional traffic. These interactions don’t just increase linearly. They grow dramatically. That creates significant strain on server resources and network bandwidth if left to grow without optimization.

There are WebSocket implementations handling billions of messages between millions of clients. So, how do they do it?

WebSocket architecture best practices

To overcome WebSocket’s challenges, there are a few architectural and operational best practices that can make a big difference. Each approach addresses specific issues, but they also come with trade-offs that you’ll need to factor in.

Let’s start with three architectural best practices for scaling WebSocket-based systems.

Sharding

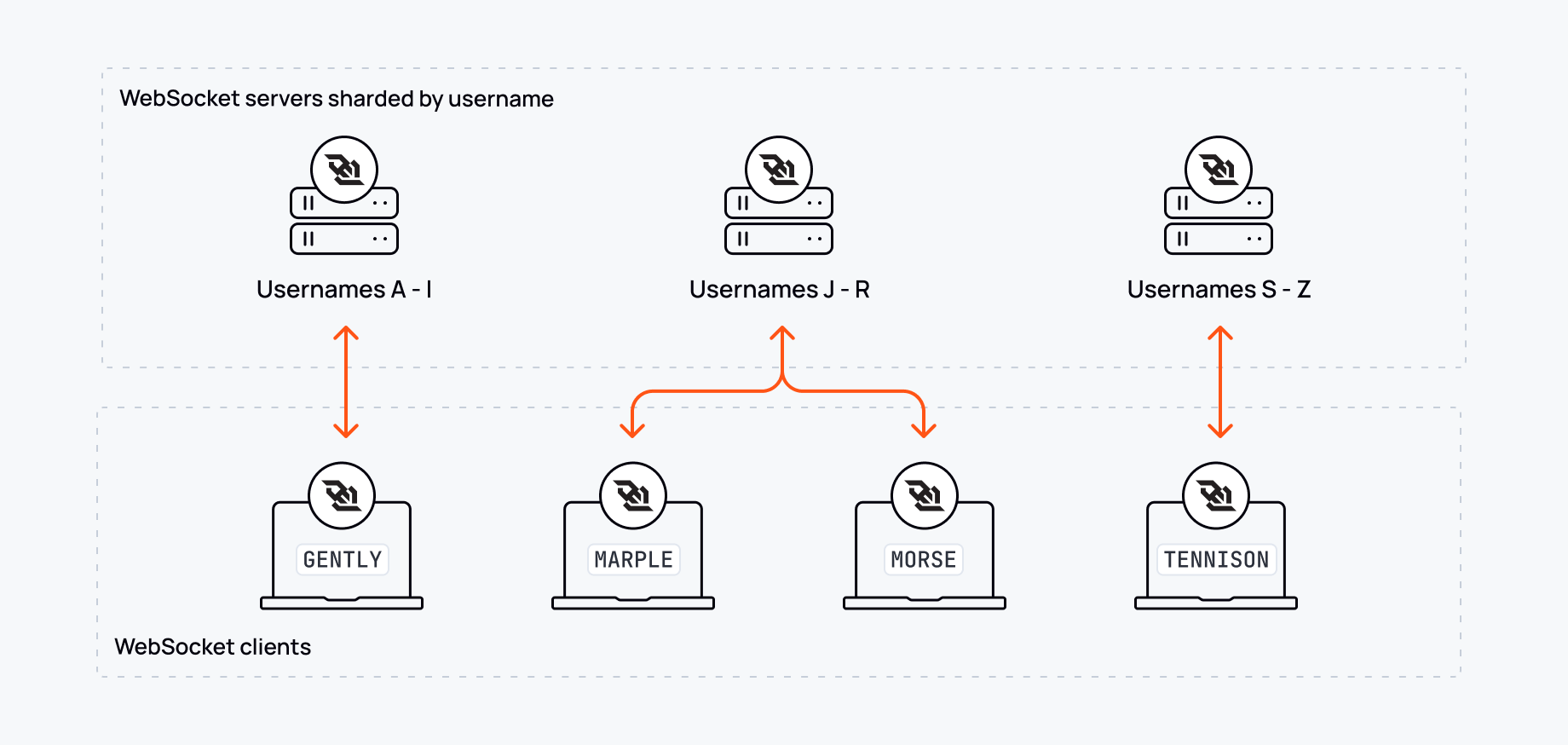

Sharding is an idea borrowed from databases, where it enables scaling by splitting large datasets into smaller pieces (or shards) and assigning each piece to a different server. For WebSocket, the idea is similar: sharding breaks the client namespace into smaller segments, with each server managing a portion of that namespace.

For example, you might assign all clients whose username starts with ‘A’ to one server, those starting with ‘B’ to another, and so on. However, this can lead to hot spots since certain letters are more common in some languages and regions than others.

So, how does sharding WebSocket shape-up in practice?

Pros of sharding:

Load distribution: Balances load across multiple servers.

Horizontal scalability: It’s easy to add and remove shards as needs change.

Fault isolation: Failures in one shard shouldn’t impact others.

Cons of sharding:

Cross-shard complexity: Maintaining consistency between shards adds complexity.

Hot shards: Some shards may become overloaded and need rebalancing.

Shard redistribution: When you need to add or remove shards, the system needs to update the sharding scheme on the client-side and then redistribute sessions across shards.

Siloed state: Harder for clients connected to different servers to communicate. Also harder to move clients between shards without losing state.

Sharding’s biggest issue is the risk of creating silos within your system. For example, in a chat application, if users from the US all connect to shard 1 and users from Japan connect to shard 2, what happens when someone from Tokyo wants to chat with someone in Chicago? In effect, the sharded system means that you have two or more entirely separate chat servers and there’s no way for those two people to chat with each other.

The solution is to share state updates between shards. That way, users shouldn’t ever notice that the sharding exists. But it introduces additional complexity. Sharing state across shards can lead to data integrity and ordering issues. And, of course, some part of each shard’s resources will be dedicated to backend synchronization, meaning you’ll need more shards than otherwise.

Any time you introduce more than one WebSocket server, you’ll need to consider state synchronization between those servers. But issues like hot shards and resharding are more likely to occur in sharded systems. Using a load balancer, instead of a sharding scheme, is one way to address that.

Sticky sessions with stateful load balancing

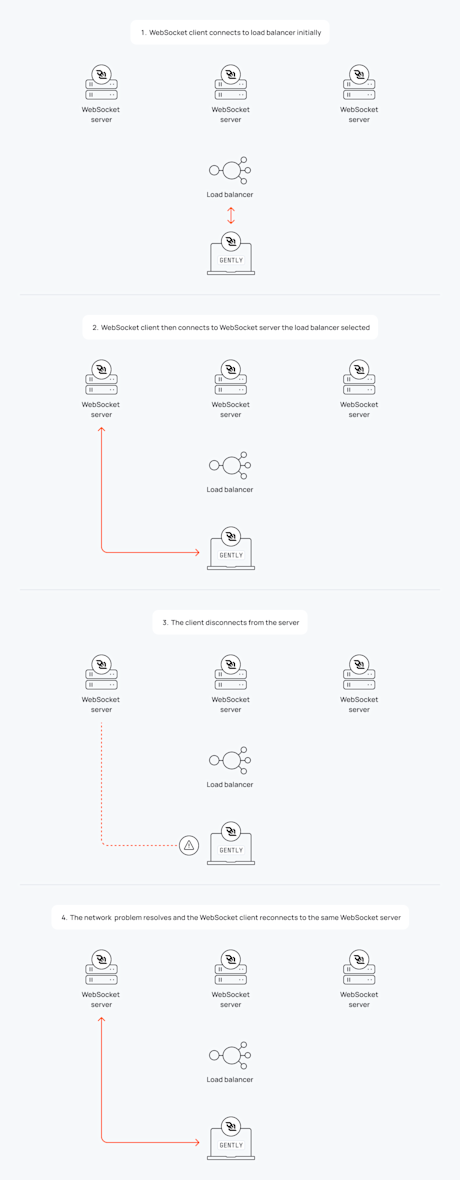

If traditional HTTP load balancing isn’t well-suited to WebSocket because it’s designed for short-lived, stateless connections, is there a way to adapt the approach to suit WebSocket’s persistent connections? One way is to make sure that specific clients connect to the same server every time they reconnect. These are sticky sessions.

Sticky sessions are different to shards because the stickiness lasts only so long. Usually for the duration of a session. That way, the client doesn’t need to know the sharding scheme but just where to find the load balancer.

Here’s how it works: once a client establishes a connection with a server, any subsequent reconnections are directed to the same server. This avoids the need to synchronize session state across multiple servers. While this helps maintain session continuity, it also introduces some challenges. The load balancer needs to track which client belongs to which server, which adds complexity and can make that load balancer into a single point of failure. And if the assigned “sticky” server goes down, there’s a chance of some data loss depending on how robust the inter-server data sharing is.

So, what are the pros and cons of sticky sessions?

Pros of sticky sessions:

Scalability: Easily scale horizontally by adding or removing servers.

Session affinity: Clients reconnect to the same server, keeping session state intact.

Greater flexibility: There’s no need to maintain a sharding scheme or to reshard when scaling.

Cons of sticky sessions:

Session persistence overhead: Managing sticky sessions requires an additional tool in the form of the load balancer.

State sharing: Like sharded systems, there’s an additional resource overhead to share data between server instances.

Single point of failure: If the load balancer fails then that shouldn’t affect open connections but could prevent clients from reconnecting. You can add more load balancers to reduce this risk.

Load balancing inefficiency: Poor load distribution can lead to server overload.

Sticky sessions have a lot in common with sharding. However, the load balancer simplifies the architecture and makes it easier to scale up or down by shielding the clients from the precise make-up of the back-end.

Implementing a pub/sub architecture

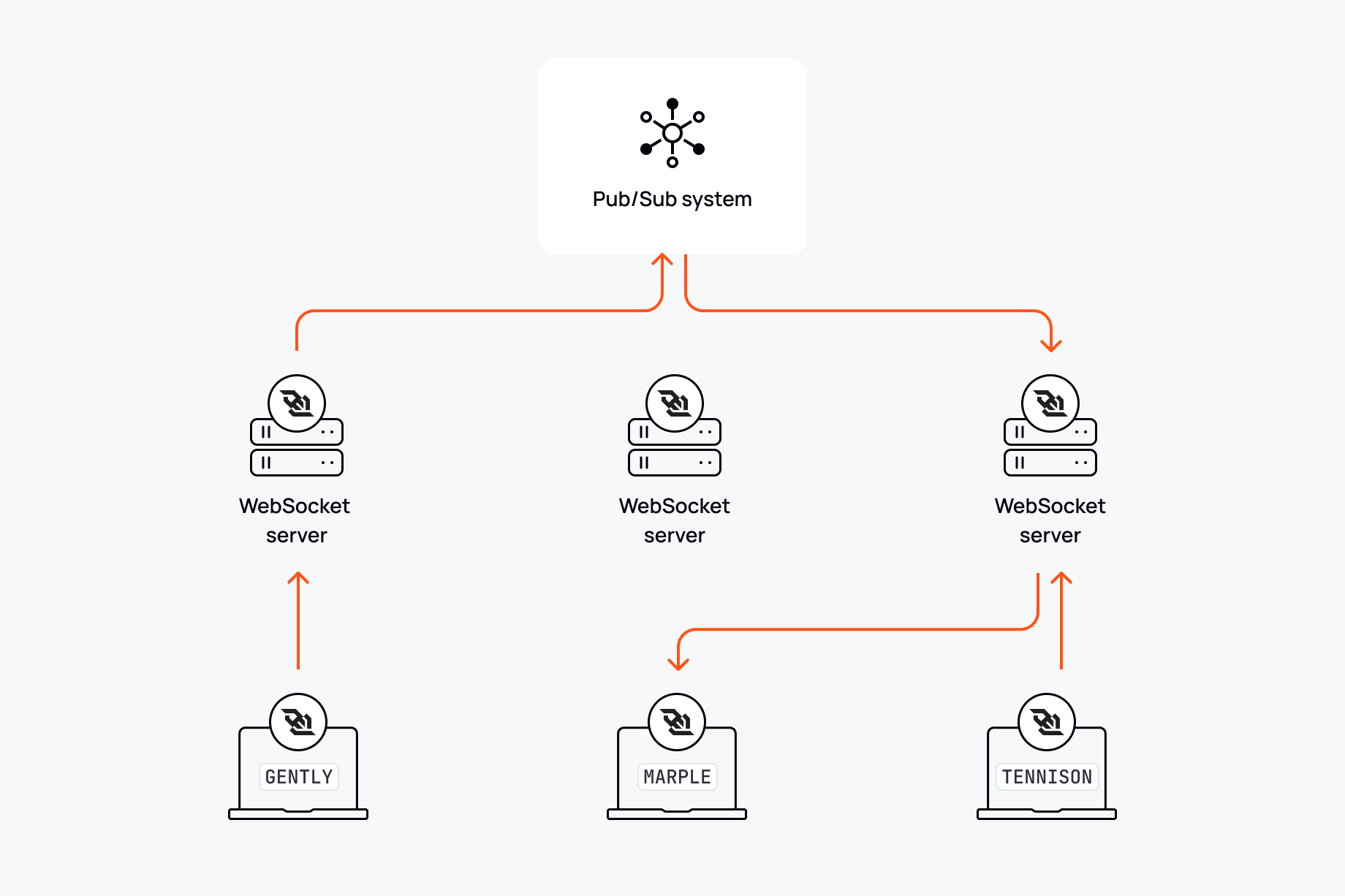

Pub/sub (publish/subscribe) helps scale WebSocket architectures by taking the problem of inter-server communication and making it the hero of the story. Rather than thinking of keeping servers in sync as a complication of scaling, pub/sub makes it a feature. In this approach, instead of each server needing to constantly share state with others, messages are published to a central system and then distributed to subscribers. This way, WebSocket servers focus on managing connections, while the pub/sub system takes care of ensuring that messages get to the right clients.

For example, in a realtime chat app, rather than having every WebSocket server manage both connections and message routing, the servers handle the connections and leave the pub/sub system to broadcast messages to all clients subscribed to a chat room or channel. By offloading this responsibility, pub/sub allows your architecture to scale without requiring every server to stay perfectly synchronized.

Pros of pub/sub:

Decouples connections from messaging: Only handles connections, leaving messaging to the pub/sub system.

Scalability: Can scale to handle large message volumes.

Reliability: Pub/sub systems typically offer fault tolerance and message retries.

Cons of pub/sub:

Increased complexity: Adds an extra layer of infrastructure to manage.

Latency overhead: Pub/sub adds extra latency, especially if not optimized.

Bottlenecks: The pub/sub system itself can become a bottleneck if not well managed.

Pub/sub offers a scalable way to handle message distribution by turning inter-server communication into a strength rather than a complication. By decoupling message handling from connection management, it allows your WebSocket servers to focus on what they do best—managing connections—while the pub/sub layer ensures messages reach the right clients.

But architecture is only a part of the solution to scaling WebSocket-based systems. There are also several operational best practices to consider.

WebSocket operational best practices

With a solid architectural foundation in place, the next challenge is ensuring your WebSocket system runs smoothly in real-world conditions. Operational best practices are essential for optimizing performance, especially as traffic scales.

Optimize connections

Making the initial connection is just the first step. Throughout the duration of a connection, you need to make sure that it’s making good use of your system’s resources and delivering a smooth experience to end users, with techniques such as:

Keep-alive mechanisms: Implement pings or heartbeat messages to detect and prevent connection drops. WebSocket doesn’t natively handle reconnections, so you’ll need a method to take care of re-establishing connections once you’ve detected a problem

Connection timeouts: Define idle timeouts to close inactive connections and free up resources. Choosing the right timeout will depend on your application.

Graceful degradation: Ensure the system reduces performance under high load rather than dropping connections abruptly.

Implement backpressure

Not every component of your system will have the same resources or process data at the same speed. For instance, if you have a high-frequency sensor system that sends thousands of readings per second, the microservice to process that data might have capacity to run at a lower pace. Without backpressure management, the processing service would get overwhelmed, potentially dropping data. By implementing techniques like throttling or buffering, you can regulate the data flow, ensuring the downstream components receive information at a rate they can handle without compromising the system's stability.

Throttle greedy clients: Limit the rate of messages from resource-hungry clients to maintain system balance.

Buffering and flow control: Handle sudden bursts of traffic with buffering and ensure data is only sent when the receiver can handle it.

Prioritize critical traffic: In realtime apps, prioritize essential messages like control data, while throttling less important traffic.

Mitigate the N-Squared problem

As we touched on earlier, the number of messages in a system increases quadratically with the number of new clients. That can lead to enormous volumes of data. But there are easy to implement ways to make the increase more linear.

Batching: Send multiple messages together in a single batch to reduce network overhead.

Aggregation: Effectively, this is a form of compression. Rather than sending a hundred thumbs-up reactions, send a message such as: thumbs-up x 1000

Reduce cross-communication: Minimize the number of nodes or clients that communicate directly by using intermediary layers to distribute updates.

Ensure message integrity

As your application architecture grows, the question of whether messages have arrived becomes more complex. It’s no longer a simple yes or no—it’s about how many times they arrive, whether they’re in the correct order, or if they’ve disappeared into the ether.

Message guarantees come in three main forms:

At-most once: Fire-and-forget that’s easy to implement but only suited to messages you don’t care about.

At-least once: Your message will arrive but you might have to handle deduplication on the client.

Exactly once: Your message will arrive the precise number of times you request.

And there’s message ordering. If you have multiple servers for fault tolerance, there’s every chance that first-in first-out ordering gets mixed up and you could find that messages arrive out of order. That is unless you explicitly build ordering mechanisms into your system.

So, how might you do that? Some of the techniques involved are:

Acknowledge and retry: Build in mechanisms to acknowledge message receipt and retry any failed deliveries.

Use sequence numbers: Track message order using sequence numbers, ensuring correct processing.

Deduplication: Prevent duplicate messages by implementing deduplication logic on either the client or server.

Monitor and then scale in response to demand

Like any system, proactive observation of its health allows you to identify problems before they impact users.

Realtime monitoring: Use tools to monitor latency, throughput, and connection health, setting up alerts for potential issues.

Autoscaling: Automatically adjust server capacity to handle fluctuations in traffic demand without manual intervention.

Design for fault tolerance

Building a fault tolerant system is both an architecture and operational issue. On the operational side, you need to consider:

Reconnection logic: Implement mechanisms to handle dropped connections without losing data, restoring state upon reconnection.

Session recovery: Store session data outside of the WebSocket server to ensure it can be restored in case of server failure.

Failover mechanisms: Plan for rerouting traffic to healthy servers in case of server or data center failures.

Should you build or buy the functionality to scale WebSocket?

Creating and maintaining an application architecture that relies on real-time communication requires more planning and engineering effort than a traditional request-response model. As we’ve seen, maintaining state across multiple clients and servers consumes significant computing resources and places greater demands on your engineering team. As you scale, these challenges don’t just increase—they grow non-linearly. It’s not just the potential for a quadratic explosion in traffic due to the n-squared problem, but also the need to maintain a responsive, fault-tolerant backend across multiple servers.

So the question becomes whether or not you should build your own solution in-house or, instead, take advantage of a ready-made platform that solves both the problems you have today and that you might have as your application grows.

The reality is:

Realtime communication is time consuming: Building and maintaining a distributed, realtime system will suck-up engineering resources and money that you could otherwise put into delivering the features your users need. Our research shows that projects like this rarely come in on time or under budget.

It’s also hard: Without extensive prior experience, even the best engineering teams can find it hard to build realtime communications platforms that scale. As we saw above, this isn’t just about implementing WebSocket but it could be a case of building a pub/sub messaging system.

Control is an illusion: One reason for developing a system in-house might be to build precisely what you need. But time and resource constraints mean that teams often end-up compromising and building a lesser solution. Using a realtime platform-as-a-service (PaaS) you can have the best of both worlds, in that you build the solution you need with building blocks created and maintained by specialists.

Create scalable, realtime, WebSocket application architectures with Ably

At Ably, our realtime WebSocket-based solution can help you get to market faster, focus your resources on solving your users’ needs, and reduce your maintenance burden, all while delivering lower latencies and higher data integrity than most teams can build in-house.

Our global edge network gives you under 99ms round trip times, 8x9 message survivability, and 100% message delivery guarantees. Try it for yourself today.

Recommended Articles

WebSocket topic page

Get a better understanding of WebSockets are, how they work, and why they're ideal for building high-performance realtime apps.

The challenge of scaling WebSockets [with video]

Learn how to scale WebSockets effectively. Explore architecture, load balancing, sticky sessions, backpressure, and best practices for realtime systems.

WebSockets vs HTTP: Which to choose for your project in 2024

An overview of the HTTP and WebSocket protocols, including their pros and cons, and the best use cases for each protocol.