Instead of the browser continuously polling the server on the off chance there’s new information, Server-Sent Events (SSEs) enable the server to push data to the browser as soon as there’s new information available.

For example, breaking news updates, the latest Bitcoin price, or your food delivery driver’s location on the map.

Breaking news, tickers, and location tracking - Sever-Sent Events are ideal for these types of use-cases

Why not use WebSockets?

Another way you might have achieved realtime updates like this in the past is with WebSockets.

WebSockets are great because they enable full-duplex bidirectional communication with low latency.

This makes them ideal for scenarios where information needs to flow simultaneously in both directions like a multiplayer game, multiplayer collaboration, or rich chat experience.

However, the work required to implement, manage, and scale the custom WebSocket protocol is non-trivial.

It’s helpful to remember that while some apps need bidirectional, many do not - most apps out there are mainly read apps.

Said another way, the server sends the majority of messages while the client listens and once in a while sends updates.

For situations like the ones above where you mostly need to send updates one-way from the server to the client only, SSEs provide a much more convenient (equally efficient) way to push updates based on HTTP.

For those times when the client needs to send data to the server, you can use a separate HTTP channel to POST data from the client (maybe using the fetch API):

While SSE opens a continuous connection so the server can send new data without the client needing to request it, a separate short-lived HTTP connection can be opened to occasionally POST data to the server without the need for WebSockets

Server-Sent events: Why they’re ready for prime time

Introduced in 2011, SSEs never really made it out from under WebSocket’s shadow and into the mainstream.

Why?

Historically, SSEs had some limitations holding them back. However, recent advancements to the web platform means it’s time to take another look:

Browser support: Microsoft Edge lacked SSE support until January 2020. A few years later, SSEs are now available in ninety-eight percent of browsers.

Connection limit: HTTP/1 limited the number of concurrent HTTP connections per domain to six. HTTP/2 eliminates this connection limit and is available in more than ninety-six percent of browsers and all modern HTTP servers.

SSEs always had potential, but these limitations nudged developers to alternatives like WebSockets, even though they’re more work to implement when you only need one-way updates.

In light of the advancements above, enthusiasm for the technology is at an all-time high.

Companies like Shopify and Split use them in production to send millions of events.

And every time we see someone write about SSE, developers race to the comments to sing their praises, often elaborate to explain they wish they knew about it sooner.

Maybe this is the first time you’re hearing about SSE and want to learn more, or perhaps you’ve encountered it before and are ready to give it another look.

No matter where you’re starting from, this page will teach you all about SSEs - what they are, where they excel, and the practical considerations you should be aware of around security, performance, and how smoothly they scale.

What are Server-Sent Events, exactly?

Server-Sent Events is a standard composed of two components:

An EventSource interface in the browser, which allows the client to subscribe to events; and,

the “event stream” data format, which is used to deliver the individual updates.

In the upcoming sections, let’s take a closer look at each.

EventSource interface

The EventSource interface is described by the WHATWG specification and implemented by all modern browsers.

It provides a convenient way to subscribe to a stream of events by abstracting the lower-level connection and message handling.

Here’s a Server-Sent events example:

const eventSource = new EventSource("/event-stream")

eventSource.onmessage = event => {

const li = document.createElement("li")

const ul = document.getElementById("list")

li.textContent = `message: ${event.data}`

ul.appendChild(li)

};Above, we call the EventSource constructor with a URL to the SSE endpoint before wiring up the event handlers.

There’s no need to worry about negotiating the connection, parsing the event stream, or deciding how to propagate the events. All the implementation logic is handled for us.

Apart from tucking away the underlying logic, EventSouce has a couple other handy features:

Automatic reconnection: Should the client disconnect unexpectedly (maybe the user went through a tunnel), EventSource will periodically try to reconnect.

Automatic stream resume: EventSouce automatically remembers the last received message ID and will automatically send a Last-Event-ID header when trying to reconnect.

Event stream protocol

The event stream protocol describes the standard plain-text format that events sent by the server must follow in order for the EventSource client to understand and propagate them.

According to the specification, events can carry arbitrary text data, an optional ID, and are delimited by newlines.

Here’s an example event that contains information about Bitcoin’s price:

id: 42

event: btcTicker

data: btc 2002867How do they work?

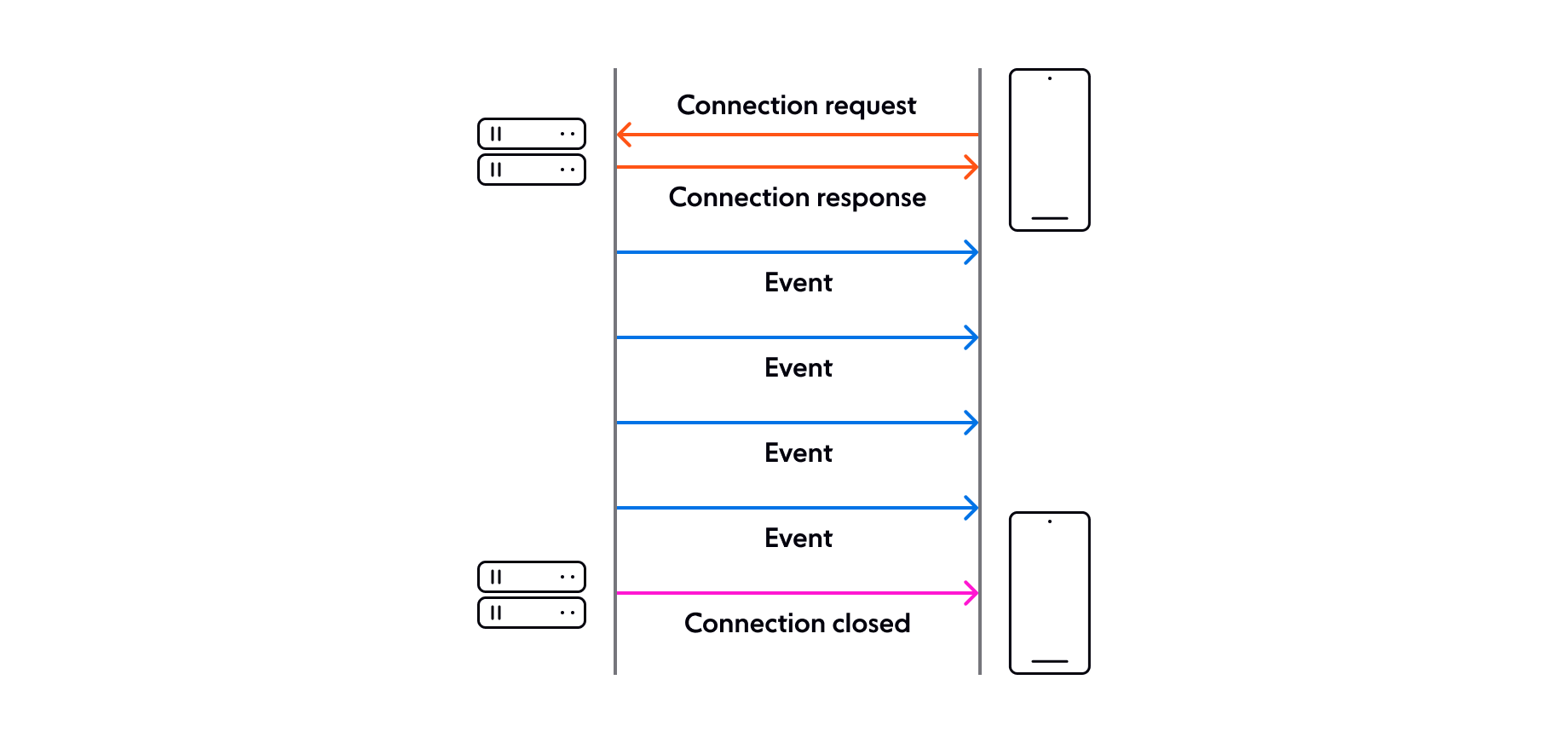

Every SSE connection begins by initiating the EventSource instance with a URL to the SSE stream.

Under the hood, EventSource initiates a regular HTTP request, to which the server responds with the official SSE "text/event-stream" Content Type and a stream of event data.

This connection remains open until the server decides it has no more data to send, the client explicitly closes the connection by calling the EventSource.close method, or the connection becomes idle. To circumvent a timeout, you can send a keep-alive message every minute or so.

Server-Sent Events use cases

SSE are particularly useful when you need to subscribe to (often frequent) updates generated by the server.

Here are some scenarios where you might use SSE:

Realtime notifications: SSE allows the server to broadcast notifications as soon as they occur. This is handy for news and social feeds, live event broadcasting, or any app where users need to receive immediate notifications.

Live data updates: Whether we’re talking about stock and crypto tickers, sports scoreboards, realtime analytics, or your Instacart driver’s location on the map, SSE is well-suited for scenarios where users need to see the latest data as it becomes available.

Progress updates: SSE can be used to send periodic updates to the client about the status of a long-running process, allowing the user to monitor the state of the operation.

IoT: SSE can be used in IoT scenarios where devices need to receive realtime updates from the server. For example, in a home automation system, SSE can be employed to push updates about the status of sensors, alarms, or other IoT devices.

Browser and mobile compatibility

EventSource is available in more than ninety-six percent of web browsers. Chrome, FireFox, and Safari have had support since 2012, while Edge only caught up in January 2020. Internet Explorer never supported SSE so a polyfill must be used.

Although EventSource is a browser API, because SSE is based on HTTP, it’s possible to consume a SSE stream on any device that can make a HTTP request - that includes Android and iOS, for which there are even open source libraries available that make consuming an event stream just as easy as EventSource.

For the icing on the cake, SSE supports a technology called connectionless push which allows clients connected on a mobile network to offload the management of the connection to the network carrier to conserve battery life. This makes SSE an all-around attractive option for mobile devices where battery life is a key concern. We wrote a bit more about this in our post comparing SSE, WebSockets, and HTTP streaming if you’d like to learn more.

Authentication and security

Like any web resource, an event stream might be private or tailored for a specific user.

Since EventSource initiates the connection with a HTTP request, the browser automatically sends the session cookies along with the request, making it possible for the server to authenticate and authorise the user.

A common problem developers run into with EventSource is that their apps use tokens instead of cookies and there isn’t an obvious way to pass this token along with the initial request.

One option would be to pass the token in the query string but sending sensitive data in a query string is not generally recommended due to security concerns.

The only accepted practices are to use a third-party library like EventSource by Yaffle or implement your own EventSource client. According to a developer from the Chrome team, unfortunately, EventSource is unlikely to ever support tokens natively.

Server-Sent Events performance

If high throughput and low latency are your primary performance considerations, SSE holds their own against any option out there.

To give you some idea, Shopify uses SSE to power their realtime data visualisation product. While they don’t reveal the specific throughput, they explain they ingested 323 billion rows of data in a four day window.

Our customer Split use SSEs with our realtime platform to send more than one trillion events per month with an average global latency of less than 300 milliseconds.

We mention this only to give you an idea of what’s possible with the right platform.

Scaling Server-Sent Events

SSE can theoretically scale to an infinite number of connections. However, the scalability of SSE in practice depends on several factors including the hardware resources available, efficiency of your server implementation, and the wider system design and architecture.

A Principal Staff Software Engineer at LinkedIn wrote about their team’s experience scaling SSE. After seriously beefing up their server hardware and tinkering with kernel parameters, they managed to support hundreds of thousands of persistent connections on one machine.

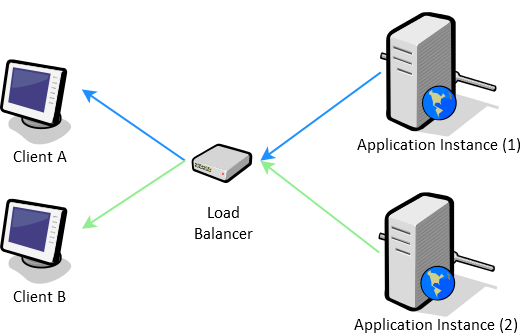

Regardless of the extent to which you optimise your code, eventually you’ll reach the limit of any one server and arrive at the need to scale out horizontally.

Using load balancing techniques with a proxy like Nginx, you can add more servers and distribute the load across them to ensure new connections are handled efficiently.

Scaling SSEs through load balancing is a more manageable option compared to scaling WebSockets, however, it’s important to note that this still requires careful planning.

In particular, maintaining data consistency will always become more complex when multiple server instances are involved.

Tomasz Pęczek gives a good example.

Imagine, for instance, an event resulting from an operation on instance 1 needs to be broadcasted to all clients (so also client B):

Illustration by Tomasz Pęczek

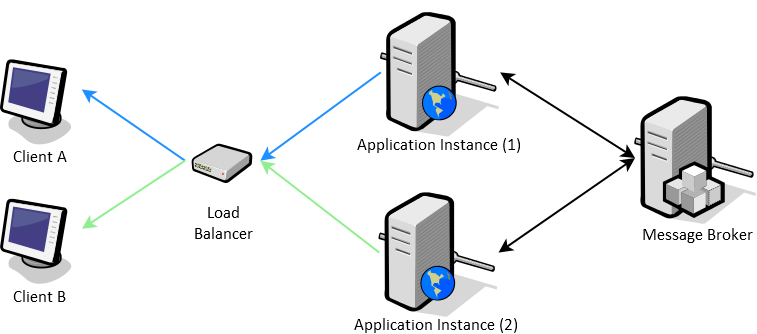

To solve this problem, you need to introduce an additional component: A shared resource like a message broker to send the message to all subscribers.

Illustration by Tomasz Pęczek

Using a broker like Redis, each instance can subscribe to the broker. The broker sends the message to all subscribers and they send it to one, some, or all clients.

What started as a simple one-way stream has now evolved into a more complicated overall system architecture that requires careful design and documentation to ensure scalability without compromising on availability, latency, or maintainability. And that is yet to speak of the challenges achieving message durability while scaling out.

Server-Sent Events and Ably

Ably (hello!) is a platform used by companies like HubSpot and Split to reliably deliver realtime updates with the minimum possible latency.

While many of our customers use the official Ably SDK based on WebSockets, we built an SSE adapter for anyone seeking a lightweight method of streaming events using the open SSE standard.

Our experience building the adapter inspired this post, and since you’re interested in efficient realtime updates as well, we thought you might like to know a bit more about it in just a few lines.

All you need to do is plug the Ably adapter into the EventSource client like so:

const apiKey ='xVLyHw.U_-pxQ:iPETgh3Vm16oUms5JgYXCYgPV8naidwIWwdhwBfI3zM'

const url ='https://realtime.ably.io/event-stream?channels=myChannel&v=1.2&key=' + apiKey

const eventSource = new EventSource(url)

eventSource.onmessage = event => {

var message = JSON.parse(event.data)

console.log('Message: ' + message.name + ' - ' + message.data)

}On the server, you can now multicast or broadcast events by publishing an event through Ably.

Essentially what you’re doing here is publishing events through the Ably infrastructure and subscribing to them on the other side. Inbetween, we make sure the message takes the most efficient route through the network to minimise latency.

Should the client disconnect, Ably will work in tandem with EventSource to read the automatically-sent Last-Event-ID header and resume the stream where the user left off. And since Ably replicates the event data in at least three different data centres, you can be sure the messages will get where they’re going, even in the face of unexpected failures or outages.

You can check out the documentation, tutorials, or read more about how our customers use SSE and Ably to deliver event-driven streams to millions of users.

Server-Sent Events FAQ

When researching this article, we saw some of the same questions about SSE keep popping up. Some questions had incomplete answers while others lacked an answer at all. That’s why we’re finishing this post off strong with a list of commonly-asked questions and answers.

What is the difference between SSE and Server Push?

Since SSEs are a means to push data from the server to the client, server push sounds like it might do the same thing.

This is not the case.

While SSE provide a way for the server to send events and arbitrary data to the client when it chooses, Server Push is a feature to allow the server to proactively send (preload) resources like images, CSS files, and other dependencies needed by the page instead of waiting for them to be requested.

Said another way, SSE is a way to send realtime updates whereas Server Push is an resource loading optimisation technique that serves a similar purpose to inlining.

You can read more about server push here.

Are SSEs a replacement for HTTP streaming?

HTTP streaming and SSE are both realtime update methods. They even follow the same paradigm where the response is delivered incrementally over a long-lived connection.

The key difference here is that HTTP streaming does not follow an open standard, nor does it have a built-in library. Therefore, it’s up to you to negotiate the connection, read the incoming binary stream, and decode the data in a consistent manner across implementations.

Free from worrying about all the low-level details, applications using SSE get to focus on building features which is generally a big productivity plus.

That being said, HTTP streaming is more flexible.

If our previous discussion about the challenges using an authentication token with EventSource resonated with you, HTTP streaming might be of interest (we have an adapter for that too).

With HTTP streaming, you forgo the convenient stream resumption features of SSE, but you get more control if you want to, for example, send a token with your opening request. In fact, this is exactly what open source libraries like EventSource by Yaffle and fetch-event-source by Azure are doing under the hood while also following the SSE standard.

Is Server-Sent Events suitable for chat?

Because we’re the team behind Ably, we’ve probably thought more deeply about what it takes to build chat than anyone else.

Long story short, we do not recommend that you use SSE for your chat experience.

This could be a little bit surprising since, earlier in this post, we referenced a retrospective by LinkedIn where the team describes adapting SSE for chat. It’s worth pointing out that, in the same post, they say:

“WebSockets is a much more powerful technology to perform bi-directional, full-duplex communication, and we will be upgrading to that as the protocol of choice when possible.”

Implementing a basic chat experience with SSE is not inconceivable, but it’s not recommended either.

To power features like typing indicators and online presence, information really has to flow simultaneously in both directions.

As the requirements become more complex and reactions start flying in (edited messages too), read receipts tick over, and bots join the party, all of a sudden there’s a lot going on and the overhead of using separate channels to subscribe and post data will definitely become noticeable to the user.

If chat is on your mind, we’ve written about how to build a chat app then scale it elsewhere on the Ably blog.

Recommended Articles

Building dependable realtime apps with WebSockets and .NET

Learn how to build dependable realtime apps that scale with WebSockets and .NET, and the challenges associated with this.

SockJS vs WebSocket

Learn how SockJS and WebSocket compare, with key features, advantages, and disadvantages, as well as our recommendation on what to use.

Realtime apps with React Native and WebSockets: client-side challenges

Learn about the many challenges of implementing a dependable client-side WebSocket solution for React Native.