- Topics

- /

- Realtime technologies

- /

- Scaling SignalR: Available options and key challenges

Scaling SignalR: Strategies, challenges, and scalable alternatives

SignalR simplifies realtime communication in .NET applications — but scaling SignalR is a different story. If you’re building for a global audience, supporting thousands of concurrent users, or facing performance issues, it’s essential to understand how SignalR scales, where its limits are, and what your options are going forward.

This guide explores SignalR’s scaleout architecture, common scalability bottlenecks, and where cloud-native alternatives can offer greater reliability and flexibility.

How SignalR scales today

Out of the box, SignalR is designed for simple, server-bound deployments. Each server tracks its own connections in memory. To scale out across multiple servers (e.g. behind a load balancer), you must coordinate connection state and message delivery - typically using:

Redis backplane: Synchronizes messages across SignalR servers when using ASP.NET Core Signalr (the open-source library)

Azure SignalR Service: Offloads infrastructure and state-sharing to Microsoft’s managed platform

Without this coordination, messages sent from one instance will not reach clients connected to another — breaking the realtime experience.

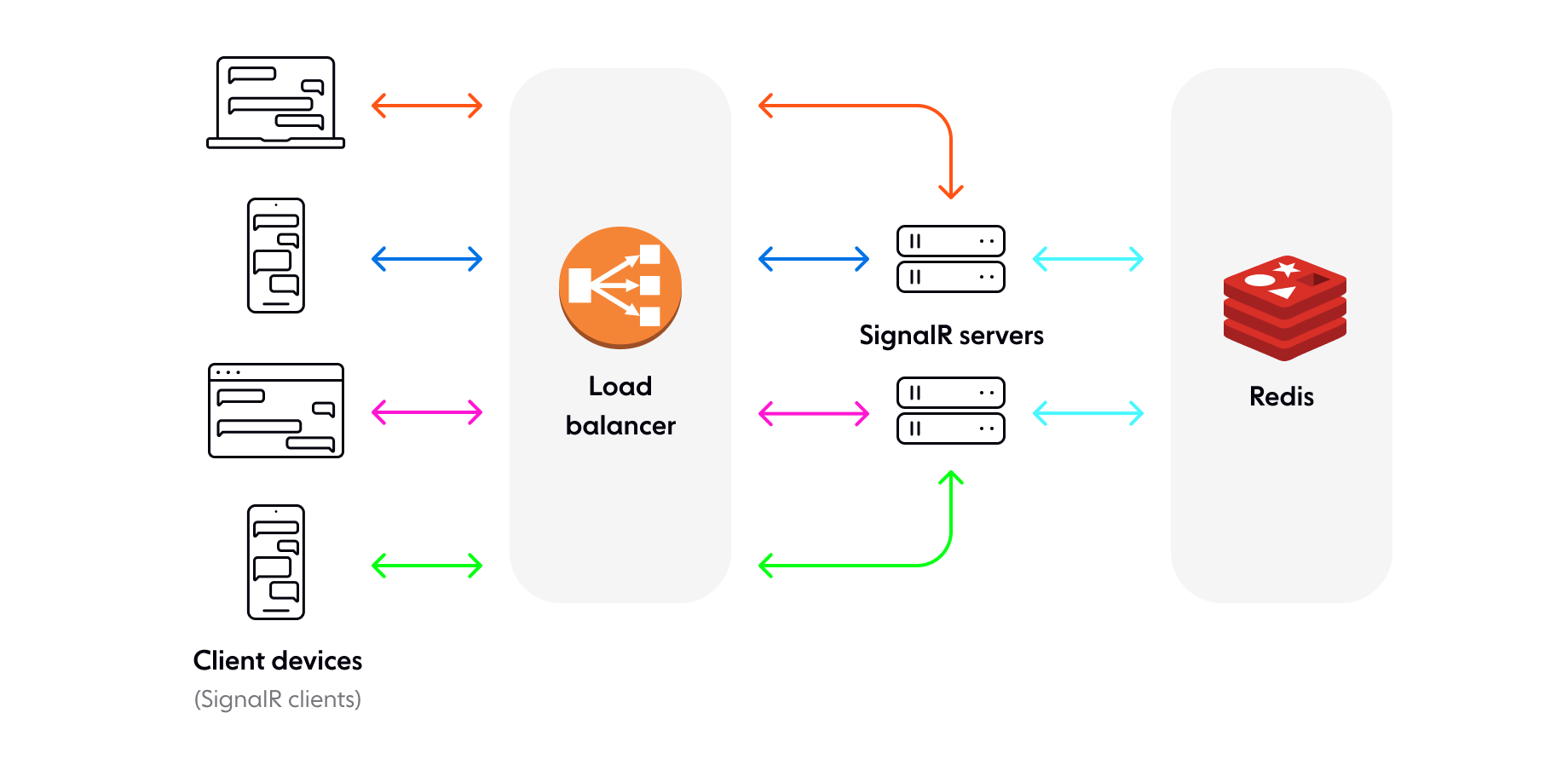

Scaling with Redis backplane

When paired with a load balancer (or multiple load balancers, depending on the size and complexity of your system), a Redis backplane allows multiple SignalR servers to publish and receive messages through a shared Redis channel. This enables message broadcast across all connected clients, regardless of which server they’re on.

⚠️ Challenges with Redis scaleout:

Redis is a central bottleneck: limited throughput under high fan-out can increase latency

No message durability or retries: You will have to make decisions about how to manage this in-house

Redis acts as a single point of failure: Any maintenance windows or unexpected downtime will in lost messages as you can't keep your servers in sync

Requires Redis high availability and monitoring: Related to the above, you need to deploy Redis in a highly available configuration and ensure ongoing monitoring of Redis health - making it a critical part of your realtime infrastructure (vs just a plug-and-play solution for SingalR scaleout)

Redis works well for internal tools or modest scale, but often struggles with global delivery or large fanout patterns (e.g. chat, gaming, live dashboards).

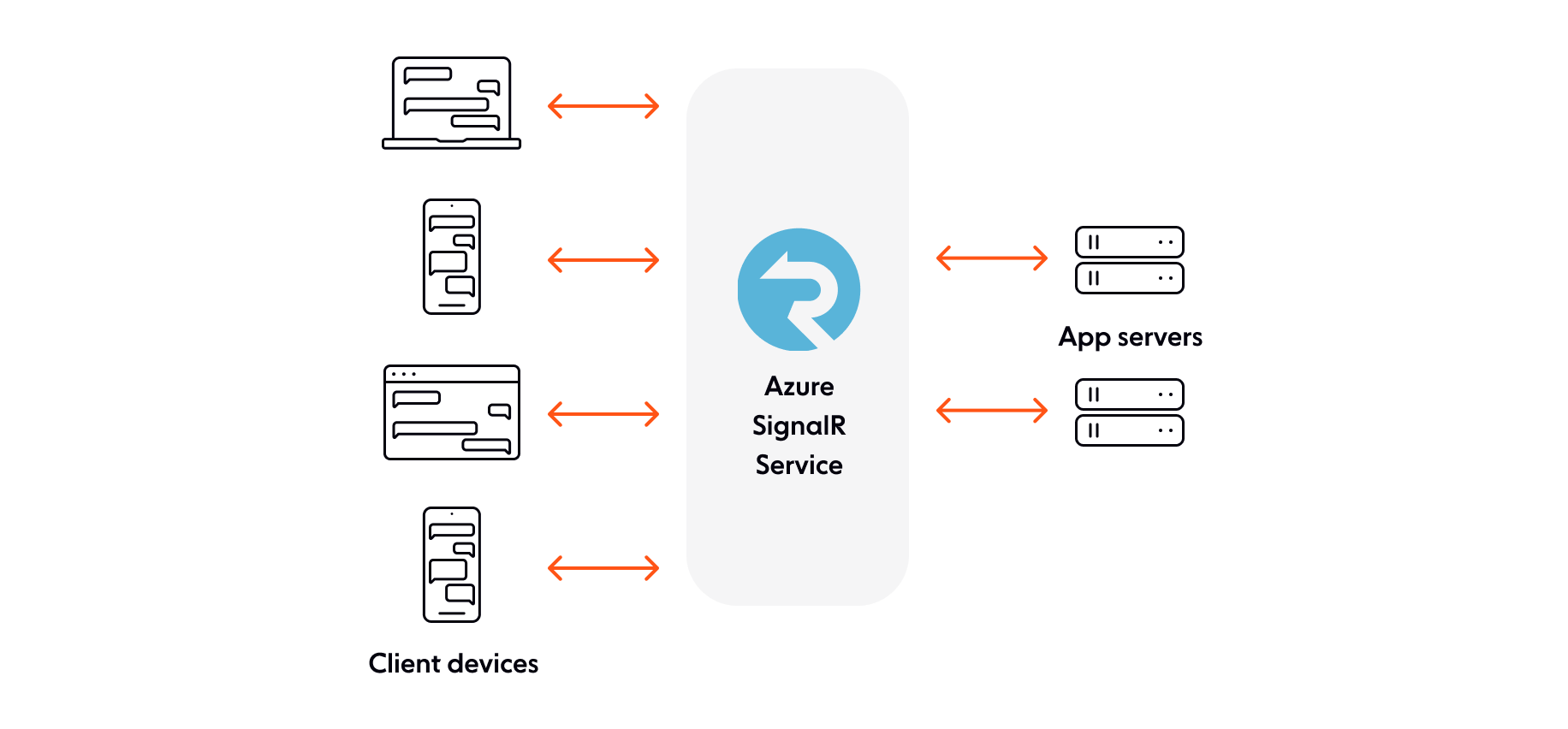

Scaling with Azure SignalR Service

Azure SignalR Service offers a managed way to scale real-time functionality in .NET applications without hosting and maintaining your own infrastructure. It eliminates the need to manually configure Redis backplanes or provision dedicated SignalR hubs, and it’s tightly integrated with the Azure ecosystem.

Azure SignalR Service is a proxy for realtime traffic and also acts as a backplane when you scale out to multiple servers. Each time a client initiates a connection to a SignalR server, the client is redirected to connect to the Service instead. Azure SignalR Service then manages all these client connections and automatically distributes them across the server farm, so each app server only has to deal with a limited number of connections.

Key features include:

No Redis backplane required: Azure SignalR Service replaces the need for a Redis backplane by managing connection state and message routing internally, across multiple servers.

Automatic scaling and load balancing: Azure handles horizontal scaling and load balancing behind the scenes. You don’t need to provision or manage servers — infrastructure scales with usage.

Supports “millions of client connections”: According to Microsoft’s official documentation, Azure SignalR Service is capable of scaling to millions of simultaneous client connections, making it attractive for high-concurrency use cases.

Azure-grade compliance and security: As part of the Azure platform, the service inherits Azure’s compliance standards (such as ISO, SOC, and HIPAA certifications) and integrates with Azure Active Directory, managed identities, and role-based access control.

Multi-region support for disaster recovery: While the service runs in a single region at a time, Azure supports deploying instances in multiple regions. This enables disaster recovery and manual or programmatic failover across regions.

Serverless bindings with Azure Functions: Developers can broadcast messages or negotiate connections using serverless Azure Functions, enabling event-driven messaging without managing persistent hubs or hosts.

Integrated monitoring: Basic observability is available via Azure Monitor and App Insights, allowing teams to track connection metrics and function invocations.

While these features make Azure SignalR Service appealing for teams already building within Azure, it’s important to look beyond surface-level scalability and evaluate how it performs under real-world conditions, especially at enterprise scale.

Azure SignalR Service limitations

Despite its promise of scalability, Azure SignalR Service has several important limitations that can impact performance, reliability, and architecture — especially for mission-critical or globally distributed applications.

1. Single-region architecture: Azure SignalR Service is a regional service - meaning your instance runs in a single Azure region.

⚠️ Impact:

Increased latency for global users: If your service is deployed in Europe, but your users are in Australia or Southeast Asia, every message must cross the globe and back. This can create significant delays in time-sensitive use cases like gaming, live collaboration, or financial alerts.

Resiliency is manual: While Azure provides tools to fail over between regions, it doesn’t happen automatically. You’ll need to design and maintain a multi-region topology yourself — including health checks, load balancing, and fallback logic.

Higher cost: You’ll need to pay for idle standby instances in secondary regions to achieve geographic failover, which increases your operational overhead.

2. Service uptime and SLA limitations Azure SignalR Service offers an SLA of 99.95% uptime for premium accounts (~ 4.5 hours of downtime per year), or 99.9% uptime for standard accounts (~ 9 hours of downtime per year).

⚠️ Impact:

These SLAs may be insufficient for mission-critical systems, especially in healthcare, finance, or emergency response.

There is no built-in redundancy beyond what you architect manually.

Outages can lead to total connection loss and broken real-time functionality for all users until failover is completed.

3. No message delivery guarantees: Azure SignalR Service does not guarantee message delivery. There is no message acknowledgement, no retry mechanism, and no built-in persistence or queueing.

⚠️ Impact:

In high-volume or unstable network conditions, messages can be dropped without notice.

This makes it risky for any use case where reliable event delivery is crucial — such as fraud alerts, trade confirmations, or IoT telemetry.

To mitigate this, you’d need to layer in your own delivery guarantees — for example, by building message queues, using retries, or implementing event logging and reconciliation.

4. No message ordering guarantees: Message ordering is not enforced by Azure SignalR Service. If two messages are sent in quick succession, there's no guarantee they’ll arrive in the correct order.

⚠️ Impact:

This can be frustrating for users in chat or collaboration tools, where context depends on sequence.

In transactional or stateful systems, out-of-order messages can cause inconsistencies or even system errors.

Developers would need to add sequencing metadata to each message and handle reordering on the client — a non-trivial task.

5. Limited quality-of-service controls: Because Azure SignalR Service focuses on raw connection and broadcast capabilities, it doesn’t offer:

Message history

Retry logic

Idempotency

Fine-grained delivery status or control over client-side message behavior

⚠️ Impact: These quality-of-service (QoS) limitations make it harder to build reliable real-time systems where delivery guarantees, integrity, and client control matter.

Common SignalR scalability issues

Even when SignalR is deployed successfully in a single-server environment, teams often encounter new challenges as they try to scale to support more users, more regions, or higher message throughput.

Below are the most common issues that arise when scaling SignalR - especially in production or realtime customer-facing systems.

🔁 Messages don’t reach all clients

What’s happening: SignalR connections are stateful and bound to the server that accepted them. In a scaled-out environment (e.g. multiple app instances behind a load balancer), each server manages only its own connections. If one server broadcasts a message, clients connected to other servers won't receive it - unless a Redis backplane or Azure SignalR Service is used.

Why this matters: Developers often discover this only after scaling up, leading to message loss and broken user experiences.

What to watch for:

Users missing updates in chats, dashboards, or live feeds

Inconsistent behavior between users in the same session or room

Debug logs showing successful broadcasts, but no delivery

📉 Performance degrades under load

What’s happening: SignalR uses server memory and threads to maintain persistent connections. Under high concurrency - especially without WebSockets - the server can become overloaded with long polling or SSE connections.

Why this matters: Unlike stateless HTTP requests, SignalR requires keeping connections open, which limits scalability without infrastructure tuning.

What to watch for:

Increased latency or CPU/memory usage as concurrent users grow

Application pool recycling or crashes under load

Transport fallbacks to long polling increasing server strain

🌍 High latency for global users

What’s happening: SignalR does not offer built-in edge acceleration or global distribution. All traffic flows through the region where your servers (or Azure SignalR Service) are deployed.

Why this matters: In time-sensitive applications - games, trading platforms, collaborative tools - even a few hundred milliseconds of latency can ruin the experience.

What to watch for:

Lag or message delays reported by users far from your data center

Need for multi-region support but no native failover or routing

Poor real-time UX despite low server load

🧪 Transport fallback and silent degradation

What’s happening: SignalR attempts to use WebSockets but silently falls back to Server-Sent Events or long polling if WebSockets aren’t supported by the client or infrastructure.

Why this matters: These fallback transports are less efficient and more resource-intensive - but developers often don’t realize a fallback has occurred until performance issues arise.

What to watch for:

Unexplained latency or server load spikes

Users behind corporate proxies or restrictive networks experiencing degraded performance

Poor performance on mobile or older browsers

🔐 Limited visibility into connection health

What’s happening: SignalR provides minimal built-in observability. You don’t get native metrics for connection duration, disconnect reasons, dropped messages, or transport type usage.

Why this matters: Troubleshooting issues at scale becomes reactive and inefficient, and it’s hard to prove service reliability or diagnose regional behavior differences.

What to watch for:

Difficulty answering questions like “How many clients are using long polling?”

Gaps in your monitoring dashboard for real-time behavior

No way to proactively detect dropped or stalled connections

When SignalR stops scaling - what are your options?

If you’ve hit a wall with SignalR’s architecture, or your team is spending more time maintaining infrastructure than building features, it may be time to consider alternatives.

Scalable pub/sub systems like Ably offer:

Global edge-accelerated WebSocket infrastructure

Delivery guarantees, ordering, and retries

Built-in observability and monitoring

SDKs for 25+ languages and platforms

They’re designed to support high-concurrency use cases with minimal operational effort.

Wrapping it up

We hope this article helps you understand how to scale SignalR, and sheds light on some of the key related challenges. As we have seen, scaling the open-source version, ASP.NET Core SignalR, involves managing a complex infrastructure, with many moving parts. The managed cloud version, Azure SignalR Service, removes the burden of hosting and scaling SignalR yourself, but it comes with its own limitations, which we discussed in the previous sections.

If you’re planning to build and deliver realtime features at scale, it is ultimately up to you to asses if SignalR is the best choice for your specific use case, or if a SignalR alternative is a better fit.

Recommended Articles

Cord alternatives: Top 5 competitors to consider in 2024

A round-up of the best alternatives to Cord for adding collaborative features - from avatar stacks to component locking - in-app.

Scaling AWS API Gateway WebSocket APIs - what you need to consider

AWS API Gateway WebSocket APIs allow you to power realtime communication for use cases like chat and live dashboards. But how well does AWS API Gateway scale?

PubNub vs. WebSockets: Pros, cons, and key differences

Discover how PubNub and WebSockets compare, including their pros and cons, use cases, key differences, and available alternatives.