- Topics

- /

- Design patterns

- /

- What is Event Streaming? A Deep Dive

What is Event Streaming? A Deep Dive

Event streaming is widespread and growing by the day. Engineers, architects, and executives use it when talking about their systems. There are many conference talks and blog posts on the topic, and books have been written about it. But like many things in our industry, it's an overloaded term. What does it mean? When should I use it? What are its trade offs?

This article is an event streaming deep dive. We start from its elemental components, describe its use cases, alternatives, and review some open-source and commercial implementations.

An overview of event streaming

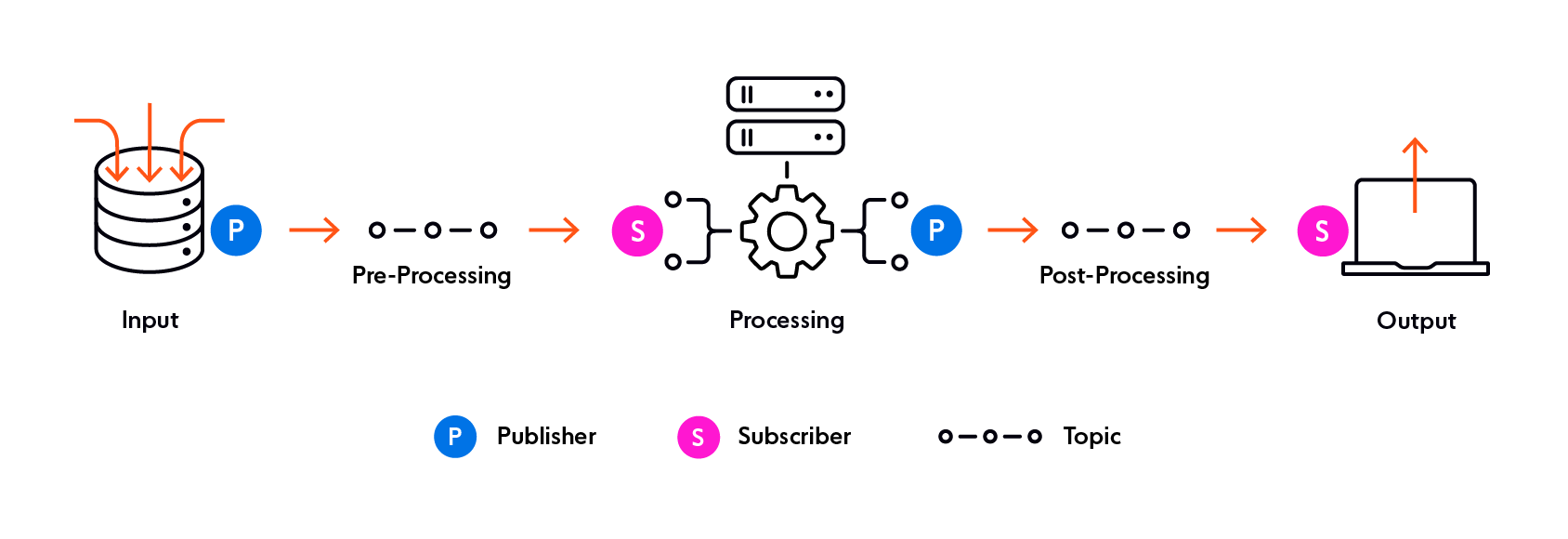

Event streaming is an implementation of the publish/subscribe architecture pattern (pub/sub) with certain specific characteristics. The pub/sub pattern involves the following elements:

Message: discrete data a publisher wishes to communicate to a subscriber.

Publisher: puts messages in a message broker in a particular topic.

Subscriber: reads messages from a message broker's particular topic.

Message broker: a system with the ability to store messages from publishers and make them available to subscribers

Channel or Topic: a subset of events that share a category. The terms topic and channel are used interchangeably in the industry. In this blog post, we'll refer to these as topics.

What makes event streaming particular as an implementation of pub/sub is that:

Messages are events.

Messages (within a topic) are ordered, typically based on a relative or absolute notion of time.

Subscribers/Consumers can subscribe to read events from a particular point in the topic.

The messaging framework supports temporal durability. Events are not removed once they are "processed". Instead, the removal happens after a certain amount of time, such as "after 1 hour".

What is an event?

An event stores information about a state change in the system that has "business meaning".

An event does not itself describe what processing must take place as part of a subscription. Below, we have two examples of messages that might be added to a message broker:

1. Not an event

{

"message_type": "send_welcome_email",

"to": "[email protected]",

"content": "Hi, thanks for..."

}2. Event

{

"message_type": "user_signup",

"email": "[email protected]",

"content": "Hi, thanks for..."

}The difference is that the first message specifically describes what should happen. It is reasonable to conclude an email needs to be sent by the subscriber to "process" the message.

The second message is subscriber agnostic. Any number of subscribers could read that message and take different actions. One subscriber could send a welcome email, and another subscriber might add [email protected] to a CRM system.

Events represent a change of state, but they do not specify how that state change should modify the system. Instead, that is up to consumers to implement.

What is an event stream?

An event stream is a sequence of events, meaning order between events matters. Events flow through the system in a specific direction: from producers to consumers.

As producer events occur in producers, they are published to a topic. A topic is typically a categorization for a particular type of event. Subscribers interested in events of a particular category subscribe to that topic.

As events are published to the topic, the broker identifies the subscribers for the topic and makes the events available to them. Publishers and subscribers need not know about each other.

Protocols and delivery models

The set of rules that components of a system follow to communicate with each other are known as the protocol. In our industry, there are many choices for implementing event-driven architectures.

An event is always published by a single publisher, but it might need to be processed by multiple subscribers. Handling subscriber failures and determining which subscriber to deliver messages to is a big part of the protocol implementation. Because of that, one of the most important factors of these protocols is how they handle broker/subscriber communication.

Connection initiation

The connection between broker and subscriber can be either subscriber initiated or broker initiated.

Broker initiated models require the broker to know about existing subscribers. This can be achieved either through static registration (e.g., Webhooks) or a service discovery mechanism.

In subscriber initiated models, subscribers need to be aware of the broker's address. These models are more convenient when there are many dynamic subscribers, especially in environments when service discovery is not feasible (e.g., a WAN).

Push vs. pull model

In the pull model, subscribers send a message to the broker when they want to receive events, and only then the broker sends them data. Subscribers need to maintain the state to know what topics exist and what data to ask for (for example: where to read from in a particular topic). For example, Kafka Consumers pull data from brokers.

In the push model, the broker determines when to send data to subscribers based on its own logic. Subscribers do not need to maintain state or implement special logic to coordinate amongst themselves. For example, SSE and Webhooks use a push model.

Some platforms like Google Pub/Sub and Amazon Kinesis Data Streams support both models.

Backpressure

Whenever a streaming pattern is used, events can be published faster than subscribers can process them. If that occurs, newly arrived events are typically added to a buffer, in memory, or durable. If that capacity is exhausted, events are dropped using a predefined policy (FIFO, LIFO, etc.).

Regardless of the action taken, the system needs a mechanism to let the broker know that consumers cannot currently process more events. Otherwise, either consumers or downstream services would suffer from resource exhaustion. This mechanism is commonly known as backpressure.

When consumers use a pull-based mechanism, the most common backpressure approach is pulling for events only when no events are actively being processed. This ensures systems downstream from the broker are not accumulating a backlog.

Push-based systems need a mechanism for subscribers to let the broker know that they cannot handle new events, sending an error instead of a normal ACK or temporarily pausing delivery.

In addition to applying back pressure, many systems are also architected to increase their consumption and processing capacity when resource exhaustion is near. This ability to scale dynamically, combined with backpressure is fundamental. It prevents the system from suffering issues and/or outages and gives it time to provision capacity to handle the increased publishing rate.

Message delivery

Another essential aspect of technologies implementing the pub/sub pattern is determining which subscribers get each event. The options are:

All subscribers to a topic get all events for the topic, which is useful for use cases where there is no shared state across consumers. Chat applications running on client devices are good fits for this model. Event processing requires updating different states (that of the chat app in client devices) whenever a new chat message is sent. For example, MQTT uses this model.

Only one of the topic subscribers gets an event: This model is commonly used for cases when event processing involves shared state, as multiple subscribers processing an event would result in inconsistencies. A common example would be subscribers that send an email when an event occurs (like a new user sign up). If multiple subscribers were running and all subscribers processed the event, multiple emails would be sent.

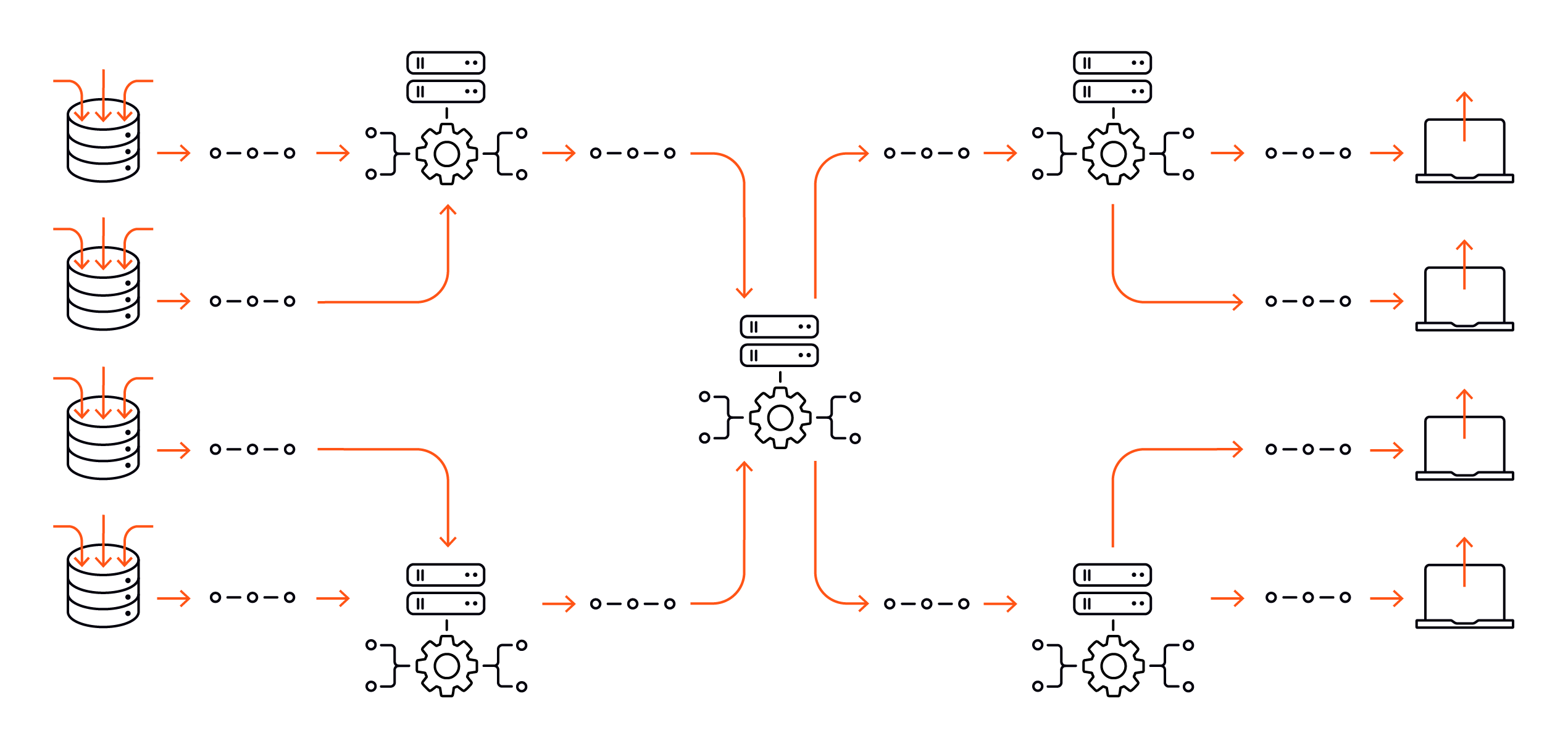

Having subscribers grouped into "groups" and having all groups and only one subscriber per group get an event. The benefit is that it allows multiple processing actions to occur at different points in time and has many instances of each subscriber type per group to scale processing capacity. A drawback compared to the previous models is that it requires additional coordination mechanisms between brokers and consumers for group membership and group message processing. Kafka Consumer Groups support this model.

What are the advantages of event streaming?

Decoupling

As an implementation of the pub/sub pattern, you decouple publishers from subscribers. There's no need for publishers and subscribers to know about each other, keep up to date membership lists, etc.

Decoupling of events and processing actions. Because events are not "removed from the broker", and because events do not specify their processing actions, multiple subscribers could get the same event and perform different actions, as explained before.

Team independence

Decoupling brings team independence. Different teams can work on subscribers for the same type of events without needing to coordinate. Keeping coordination costs down is extremely valuable as companies grow. Many service-oriented/microservices architectures share an event streaming broker as a communication mechanism instead of having services communicate directly.

Reliability

Event Streams are durable. Suppose consumers down events are not lost. You might still incur processing delays if you lack consumer (or downstream) capacity.

The best Event Streaming platforms will automatically deal with subscribers' failure and help you implement exactly-once processing semantics, which is fundamental when building reliable systems. Building those capabilities requires a careful architecture of all components, including the message broker, protocol, and publisher and consumer libraries.

Real-time feedback

Continuously applying system state changes (events) to an entity's state allows for real-time experiences. Users don't have to wait minutes or hours to see their operations take place. See the stream vs batch section below for more on this.

Stream vs. batch

Historically, many systems used a technique known as "batch processing", which is still commonly used nowadays due to resource constraints. Batch processing involves picking a time batch window (typically of "low" system activity) to process data of a batch size (typically large). For example, at the end of the day, banks typically processed all transactions from the past 24 hours and updated accounts with the appropriate balance.

As time went by, computers and storage resources became cheaper. Users also started valuing and expecting "real-time" feedback in their applications. Thus, batch processing is no longer the answer for many applications. After all, think about it: isn't it a lot better to see your balance update 1 minute after you've made a transaction instead of after 24 hours?

Running batch processes more often, e.g., every hour instead of every day would reduce the time it takes to reflect the system's right balances. Suppose we are looking to provide "real-time" feedback. In that case, one could conceptually think of consuming an event stream as making the batch process run whenever there is an event, i.e., the batch process becomes a stream consumer. The batch size becomes 1, and the batch window becomes "all the time".

In the above case mentioned, each transaction could be thought of as an event, so transactions from the previous 24 hours can be read in order and their operations applied to the original balances. However, there are cases when not using batch processes that might require reconciling/syncing two or more data sources.

Imagine a scenario where an offline data warehouse (DWH) used for business analytics needs to be updated based on data from a production database that stores Users. If the production database stores each user as a separate row, and the goal is to have a table in the DWH with a row per user, then the batch process:

Either needs to

only read users that were updated since the last batch run

diff the DWH and DB users to figure out what has changed

and figure how to handle any errors that occur while a batch is processed, as those users need to be re-synced

Or needs to recreate a whole new table in the DWH by reading all users from scratch and dump the old one.

Both of those approaches are workable and they might do fine for a while, but they are less than practical if the dataset becomes too big, increasing both errors and batch job processing time.

Like everything in software, the decision of whether to use batch jobs or event streams is based on tradeoffs. Acceptable processing delay, initial investment, operational cost, complexity, throughput, and other factors need to be considered when deciding which approach to use.

Event streaming platforms

There are a number of event streaming platforms. Apache Kafka is probably one of the better-known ones. It is open source, and you can choose to run it yourself. It is also offered as a service by most cloud providers and many vendors, so it is useful to avoid vendor lock-in from a business perspective.

Cloud providers have their event streaming platform implementation and surrounding ecosystem: Amazon Kinesis, Google Cloud Pub/Sub, and Azure Event Hubs. These allow you to dynamically configure (and pay for) capacity, support both push and pull models and integrate easily with other cloud provider products, e.g., to use Serverless functions for event processing.

Ably is an event streaming platform for far-edge event streaming, which we describe in more detail here.

Event stream processing

As its name states, Event Stream Processing involves processing a sequence of events. The key here is operating on the sequence of events and not just one event at a time. Examples of Event Stream Processing are:

Joining two streams of events, transforming a field value, and filtering a subset of the join

Performing an aggregate calculation (max, avg, etc.) over a moving time window

Event Stream Processing platforms are useful as they allow you to work with event streams, transformations, filters, and joins directly. For example, you could write the following short and legible code snippet to count how many Twitter mentions Ably had in a sliding window:

// Tweets with a comp

TwitterUtils.createStream(...)

.filter(_.getText.contains("@ablyrealtime"))

.countByWindow(Minutes(5))The example above was inspired by a sample from https://spark.apache.org/streaming/

Without Stream Processing technologies, you would have to:

Consume events from the event stream

Store them in a database (or in memory)

Run batches every N minutes to process the last M minutes of events

Publish the results to another event stream

Apache Flink, Apache Spark (Streaming), Apache Beam, Apache Kafka Streams are some of the open-source platforms you can use for stream processing. Cloud providers offer these capabilities as a service, some of the options being Amazon Kinesis Data Analytics, Google Cloud Dataflow, and Azure Stream Analytics. Here is an analysys of the pros and cons of those and more stream processing platforms.

Ably and event streaming

Ably is an enterprise far-edge event streaming solution.

What does that mean? Let's dissect it:

Far-edge: Unlike solutions like Apache Kafka, Amazon Kinesis, etc. Ably runs outside your network perimeter. By operating on the edge and receiving traffic before your infrastructure does, Ably can handle traffic spikes by scaling its infrastructure and guarantee delivery to edge consumers, so you don't have to deal with it.

Enterprise: enterprises want vendors they can depend on. For an event streaming platform, this means predictable and low latency delivery. If Ably confirms it received a message, it will be delivered, and fast! Ably is a pub/sub platform that is engineered around four pillars of dependability and provides:

Reliability through infrastructure redundant at the regional and global level, and a 99.999% uptime SLA.

Predictable latency even when operating under unreliable network conditions, such as that of a WAN. The Round trip latency from any of its 700+ PoPs globally that receive at least 1% of its global traffic is < 65ms for 99th percentile.

Integrity for message order and delivery, including exactly once processing.

Scalability for traffic variations, including 50% global capacity margin for surge and the ability to double capacity every 5 minutes.

Event Streaming, as stated in the introduction, requires order, the ability to read from a point in time, and durability. Ably supports this out of the box. All you have to do is use one of the many Ably Client Library SDKs.

Ably has many integrations that allow you to integrate with third-party services, including queuing technologies, serverless functions, and others. Ably Reactor Firehose seamlessly integrates with some of the best existing Event Streaming providers to get data to them and implement Event Stream Processing.

In essence, Ably enables you to implement event streaming architectures in scenarios where you have millions of devices generating millions of events. To learn more, get in touch with Ably's technical experts.

Further reading

Recommended Articles

Pub/Sub pattern architecture

Learn about the key components involved in any Pub/Sub system, understand the characteristics of Pub/Sub architecture, and explore its benefits.

Realtime reliability: How to ensure exactly-once delivery in pub/sub systems

Discover the complexities of achieving exactly-once delivery in pub/sub systems, and the techniques necessary to achieve reliable message delivery at scale.

Idempotency - Challenges and Solutions Over HTTP

Learn what idempotency is and the engineering challenges of providing idempotent guarantees over a globally-distributed messaging platform.