Each business (regardless of industry) and every software developer has latency on their radar. Yet, it's typically not at the top of the priority list, and generally, that's just fine. But when it comes to the world of realtime updates, this casual attitude can trip you up.

When you're piecing together a system for realtime updates, low latency jumps from being an afterthought to a critical, front and center requirement. Engineers who've wrestled with crafting realtime infrastructures themselves probably know this conundrum all too well.

Knowing that maintaining low latency is important, however, isn’t enough and even if engineers apply techniques to improve performance that have worked in other contexts, those tactics will often not be enough in a realtime setting.

In a previous blog, we peeled back the layers on why realtime updates are so crucial and gave you a broad-brush picture of what goes into making a realtime update infrastructure in practice. Here, we’re going to dive into latency, a make-or-break metric for providing a successful realtime experience.

What do we mean by low latency and what are the parameters for realtime updates?

Low latency is well understood as a concept but not always understood as the basis from which realtime updates succeed.

Latency, in short, is the time it takes for data to travel from the backend (such as a data center or public cloud) to the end-user’s device. Reducing latency times has been an important part of software infrastructure since the beginning of the Internet. Faster delivery of data has never been anything but a good thing.

The trouble is that, in the context of realtime updates, the value of a “live” update drops rapidly based on latency alone.

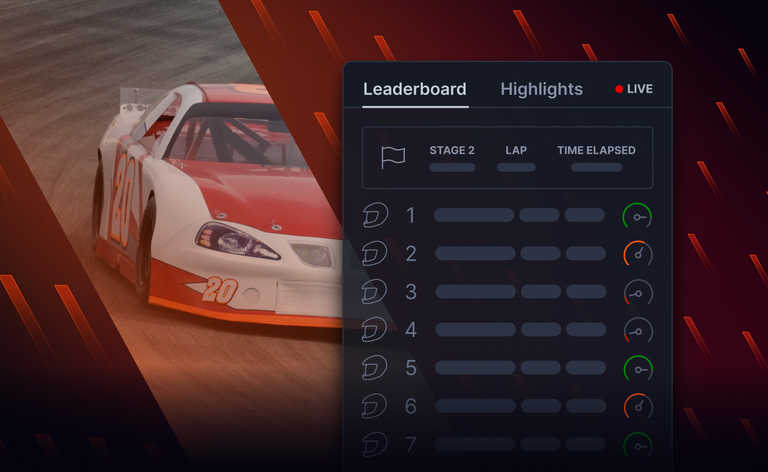

Latencies need to be in the low hundreds of milliseconds for end-users to feel like the updates are “live” or instantaneous. In almost every other context, a 100ms lag means little but for companies offering realtime updates, that latency gap can mean the difference between a happy user and a frustrated user refreshing the application or quitting altogether.

Two reasons why low latency is essential for delivering realtime updates at scale

Latency levels of 100ms or less are hard to achieve in most contexts but this goal is an even bigger challenge for realtime updates because the latency not only has to be low – it has to be predictably, reliably low.

And that difficulty points to two major reasons why low latency is so important for delivering realtime updates at scale: The brand promise of realtime and the user’s expectations of scalability.

1. The brand promise of realtime

Many products promise experiences to users that the companies behind them are not entirely sure they can deliver.

When a productivity app claims users will feel “empowered,” for example, that feeling is both hard to measure and hard to deliver. But a productivity app that makes that claim can do so as long as users feel something akin to empowerment most of the time.

Realtime applications, though, promise a feeling of instantaneousness and that feeling, despite seeming similarly fuzzy, has clear metrics. As we said above, latency needs to be in the low hundreds of milliseconds.

But as companies aim for that number, they need to realize that in this case, promising a “live” or realtime experience makes a claim that users will expect to be fulfilled. There’s very little wiggle room.

Ultimately, even if everything else is working perfectly, a realtime updates system that doesn’t have low enough latency will be perceived by most users as not working well enough to deliver on the promise of being “live.”

2. Expectations of scalability

If your product promises a live experience, users need to be able to log in at any time and from any location and experience latency levels that feel instantaneous.

Even if an event is disproportionately big and draws in a huge fluctuation of users – the Super Bowl, for example – users will still expect the experience to feel “live.”

Users rarely know the technical difficulties of what they’re asking for when they refresh a realtime update feed on their mobile phone while connected to a flaky network, for example, but they still have strict expectations of what a realtime experience needs to be.

How to to guarantee low latency delivery at scale

The primary decision companies face here is whether to build or buy a realtime update system. That decision needs to be informed by a range of factors, including:

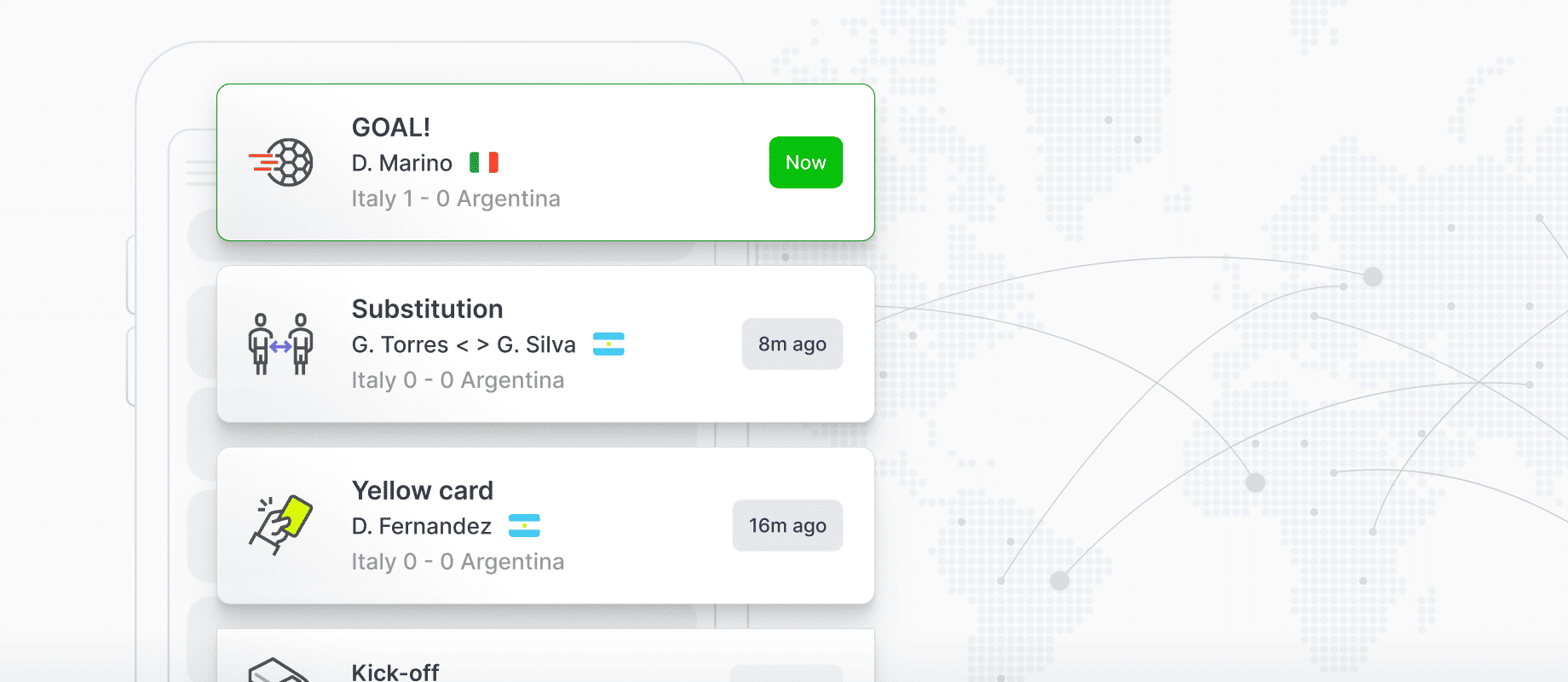

- Ensuring regional and global coverage: Latency needs to be global and round trip, which often requires distributed core data centers and edge acceleration points-op-presence (POPs).

- Message routing: Messages need to be routed intelligently, so that they can be delivered with the least amount of network hops.

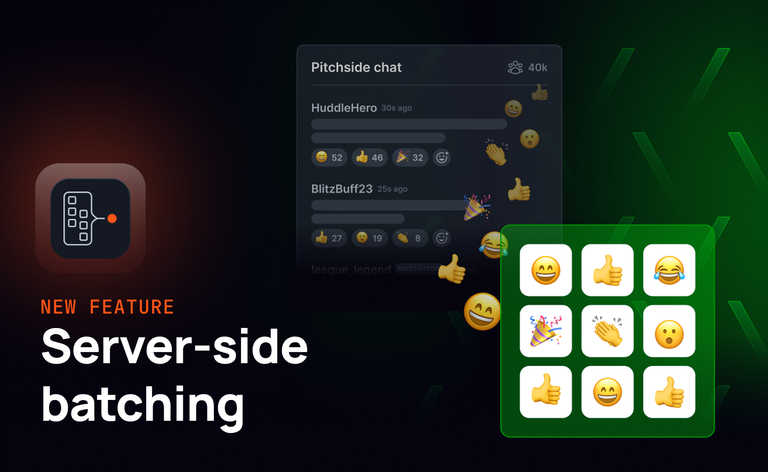

- Message payloads: The amount of messages being sent to end-users can fluctuate if, for example, a new score during a soccer game feeds into an update that includes everything else that’s happened during the game so far.

- Encoding: Inefficient encoding processes will increase latency by slowing down the system’s ability to translate data into a transmittable format and back again.

For many companies, a system like this is impractical. Building one from the ground up requires a lot of time and skill. Most companies either can’t afford the investment or would prefer to put that investment toward other features. The investment also tends to be more costly than anticipated because maintenance costs and other challenges - such as scalability and data integrity - make maintaining a low enough latency even harder.

The Ably team is closely familiar with all the challenges and edge cases. We believe in the value of realtime updates but we also believe building a system to support realtime updates is too costly and too difficult for most companies. That’s why we’ve done it for you.

Read about how you can use Ably’s APIs and SDKs to broadcast realtime data with predictable low latency or start building today with a free developer account.

This is the second in a series of four blog posts that look at what it takes to deliver realtime updates to end users. In other posts, we look at why data integrity, and elasticity are so important when you're trying to deliver realtime updates to end users at scale.