In any chat experience, creating a safe and welcoming environment is just as important as delivering messages in realtime. As online interactions scale, the need for effective moderation becomes essential, not only to protect users, but to maintain trust, foster community, and support healthy growth.

That’s why today, we’re excited to announce Moderation for Ably Chat, a flexible and powerful way to filter inappropriate content and protect your community - with minimal overhead and maximum control.

Whether you’re building realtime chat for a live stream, game, or online community, moderation helps ensure your users have a safe, respectful space to interact. With this release, Ably makes moderation simple to adopt, adaptable to your policies, and scalable to meet the needs of fast-moving apps.

Built for realtime: How moderation works

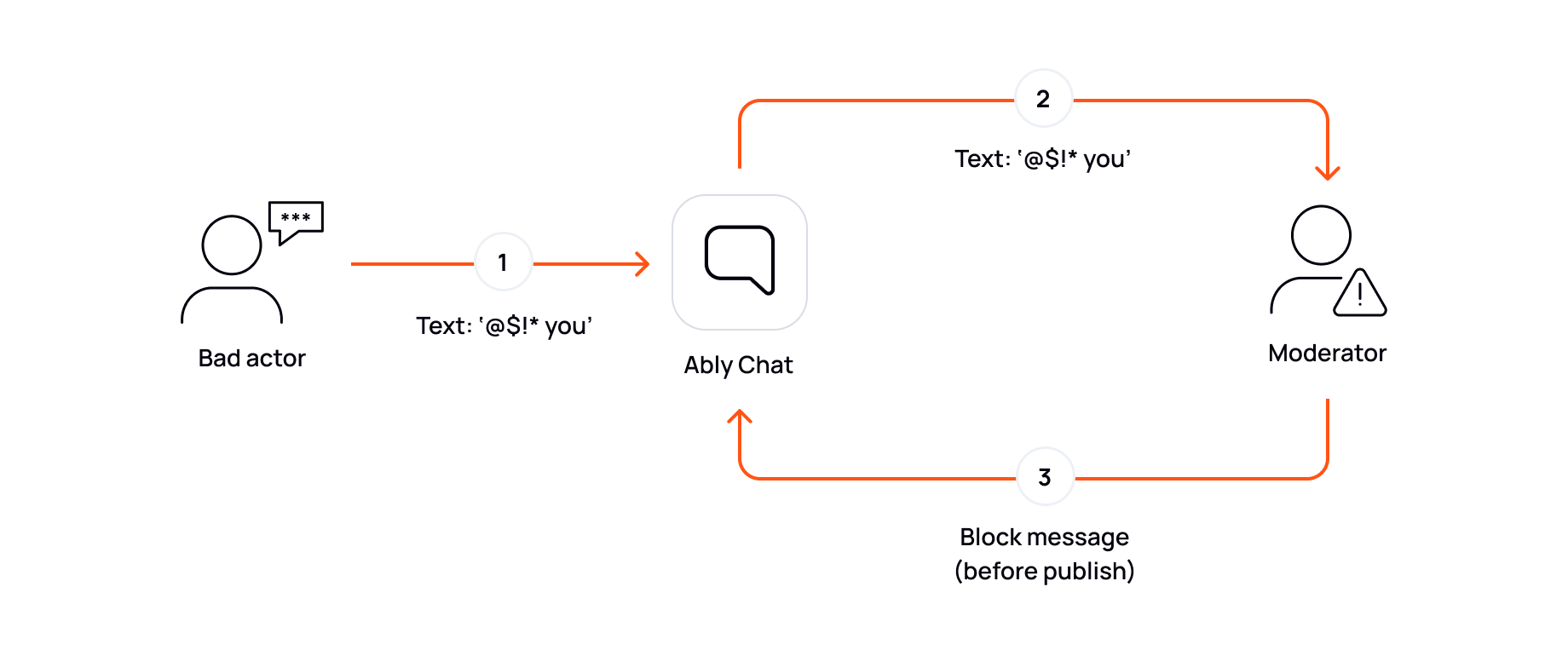

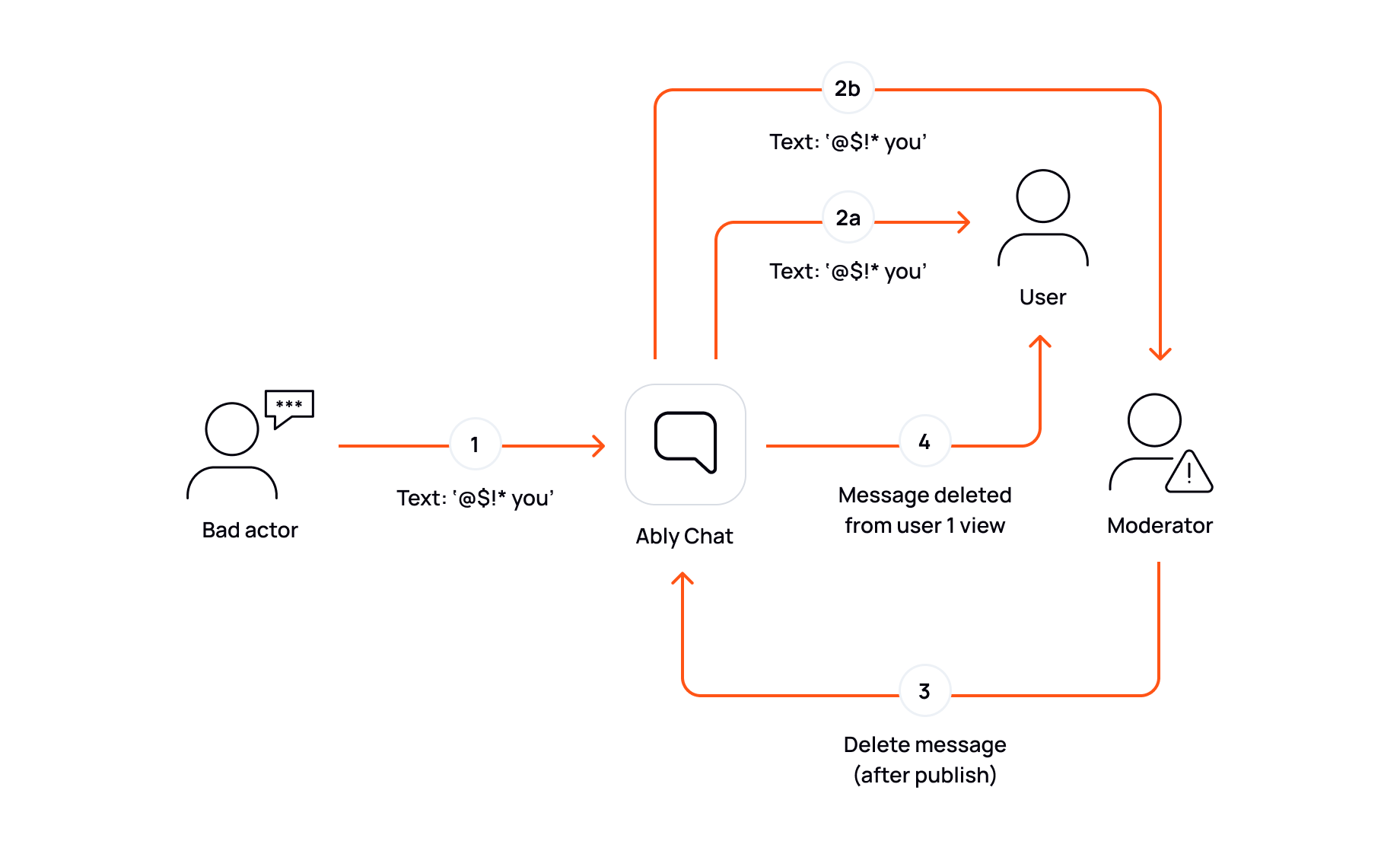

Moderation is available today for Ably Chat and comes in two modes: before-publish and after-publish.

In the before-publish mode, messages are passed through a moderation engine before they are allowed into a room. This ensures that any inappropriate content is filtered before it ever reaches other users. It’s ideal for sensitive use cases such as education or financial services, where content must be strictly controlled.

The after-publish mode allows messages to be delivered immediately, while moderation runs in parallel. If a message is later flagged, it can be edited or deleted using Ably’s edit or delete methods. This approach has near-zero impact on latency and is suited to high-velocity chats where brief visibility of inappropriate content is acceptable.

Both modes are managed using integration rules in the Ably dashboard, and you can configure moderation at the chat room level using filters.

Moderation powered by Hive

To get started quickly, Ably offers a native integration with Hive, a leading moderation provider specializing in AI-based content review. You can choose from two Hive-based options:

Hive model-only (before publish)

This option uses Hive’s moderation model to score messages in real time, flagging potential content like hate speech, abuse, or explicit language. You configure which scores are acceptable for each moderation category, and Ably will accept or reject messages based on your thresholds.

All moderation happens before messages are published. The setup is fast - just add your Hive API key, define your thresholds, and choose your fallback behavior (e.g., reject or allow messages if we’re unable to reach Hive). This flow gives you high confidence that offensive content never reaches your users.

Hive Dashboard (after publish)

Hive also offers a full moderation dashboard, combining AI and human review. With this setup, messages are sent to Hive after they’re published, where they appear grouped by room ID and can be reviewed by moderators. Human moderators can delete or edit messages directly, using custom post actions configured to call Ably’s moderation API.

Setup is simple: you create a Hive account, generate an API key, and configure a moderation rule in the Ably dashboard. You’ll also get $50+ in free credits after adding a payment method to your Hive account. Moderators can take action using Hive’s UI, and Ably automatically applies those changes in your chat room.

For full instructions, including how to configure the callback action, see Hive Dashboard integration setup.

Bring your own moderation engine with AWS Lambda

For teams that want full control - or need to integrate with a moderation provider not natively supported - we’ve built support for custom moderation using AWS Lambda.

This rule allows you to run your own moderation logic before a message is published, using an Ably-defined API contract. Your Lambda function receives message data in a standard format, and responds with either an accept or reject decision. If rejected, the message will never be delivered to the room.

You can configure retry behavior, timeouts, and error handling, and even choose whether to allow messages through when moderation fails. This flexibility makes the AWS Lambda rule a powerful option for customers with strict compliance needs or domain-specific rules.

For full details, see the Lambda integration documentation.

Designed for control and flexibility

Moderation is fully integrated into Ably’s realtime pipeline and dashboard. You can:

- Apply moderation rules to specific rooms using filters

- Control retry and fallback policies for moderation failures

- Track moderation errors through the Ably log meta channel

- Combine automation with human review for nuanced control

- Use mutable messages for after-the-fact edits or deletions

All moderation actions - including edits and deletions - are billed as standard Ably messages and appear in your usage stats. You can retrieve the latest message state through history APIs, thanks to Ably’s support for mutable messages.

Try moderation today

Moderation is now available in Ably Chat. Whether you need strict controls, light-touch filtering, or custom workflows, you can now keep your rooms safer - without slowing your app down.

To get started:

- Sign up for Ably Chat

- Enable moderation via the Ably dashboard or API

- Use Hive or bring your own moderation logic via AWS Lambda

- Explore thresholds, retries, and fallback options

- Combine with Ably’s mutable messages for post-publish moderation

Not using Ably Chat yet? Sign up today or explore our Moderation docs to build a safer, smarter chat experience.