Updated Friday 27th September 2019

The Ably team lives and breathes realtime messaging. Our mantra has always been to solve the tough problems so developers don’t need to. This approach has allowed us to solve not only the obvious problems but also the difficult edge cases others have shied away from.

In this article I describe how Ably's engineering team overcame a key challenge associated with realtime messaging: connection state and stream continuity.

Connection state and stream continuity

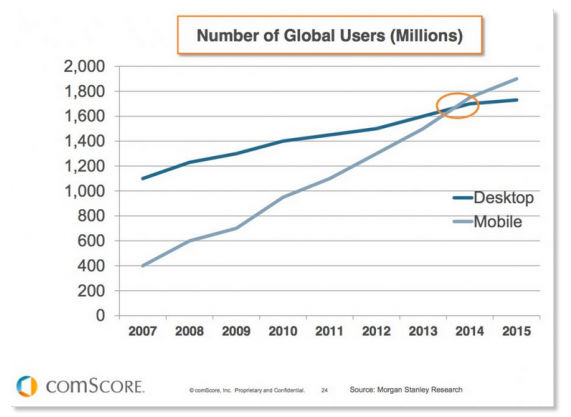

Significant and ongoing improvements in the bandwidth and quality of network infrastructure has led to an illusion of an internet connection being universally and continuously available. However, mobile devices have displaced the desktop as the principal consumption device. This means we no longer have a reliable, consistent transport to work with. Mobile devices are on the move, they change networks frequently from Wifi to 3G to 4G to 5G, they become disconnected for periods of time as they move around, and particular transports such as WebSockets may suddenly become unavailable as a user joins a corporate network.

With the proliferation of mobile, a realtime messaging system that allows messages to be lost whilst disconnected is simply not good enough. A rigorous and robust approach to handling changing connectivity state is now a necessity if you want to rely on realtime messaging in your apps.

The challenge: pub/sub is loosely coupled

Most realtime messaging services implement a publish/subscribe pattern which, by design, decouples publishers from subscribers. This is a good thing: it allows a publisher to broadcast data to any number of subscribers listening for data on that channel.

However, because of this design this pattern is slightly at odds with the need for subscribers to have continuity of messages throughout brief periods of disconnection. If you want guaranteed message delivery to all subscribers, then you typically need to remove that decoupling and know, at the time of publishing, who the subscribers are so that you can confirm which of them has received the message.

Yet if this approach is taken then you lose most of the benefits of the decoupled pub/sub model. Namely, the publisher will now be responsible for keeping track of message deliverability and, most importantly, is blocked — or at least must retain state — until all subscribers have confirmed receipt.

Where does connection state matter?

Chat functionality is now common requirement and a good example of where connection state is essential. While seemingly simple to build, many realtime messaging platforms with a pub/sub model simply don't work reliably enough for chat. See the example below in the diagram:

Without connection state, Kat, whilst moving through a tunnel is disconnected from the service until she comes out the other end. In the intervening time, all messages published are ephemeral and from the perspective of Kat no longer exist. The problem with this is twofold:

- Kat loses any messages published whilst disconnected

- Worse, Kat has no way of knowing that she missed any messages whilst disconnected

A better way: connection state stored in the realtime service

At Ably we've approached the problem of ensuring messages are delivered to temporarily disconnected clients in a different way. Publishers, or components of the infrastructure representing the publisher, aren't required to know which clients have or have not received messages. Instead we retain the state of each client's that is or was recently connected to Ably's frontend servers.

Because the frontend servers retain connection state when clients are disconnected, the server effectively continues to receive and store messages on behalf of the disconnected client as if it was still connected. When the client does eventually reconnect (by presenting a private connection key they were issued the first time they connected), our system is able to replay what happened whilst they were disconnected.

A simple group chat app with a realtime service like Ably that provides connection state looks as follows:

Why is it hard to maintain connection state for disconnected clients?

Much like all the problems we've solved, the challenges are overwhelmingly more complicated when you have to think about the problem at scale, without any disruption, and with guarantees about continuity of service.

Resilient storage

Data is susceptible to loss if connection state is stored only in volatile memory on a server that the client originally connected to. For example, during scaling down events, deployments, or recycling the server process will be terminated. If the data exists only in memory, these routine day-to-day operations will result in data loss for clients.

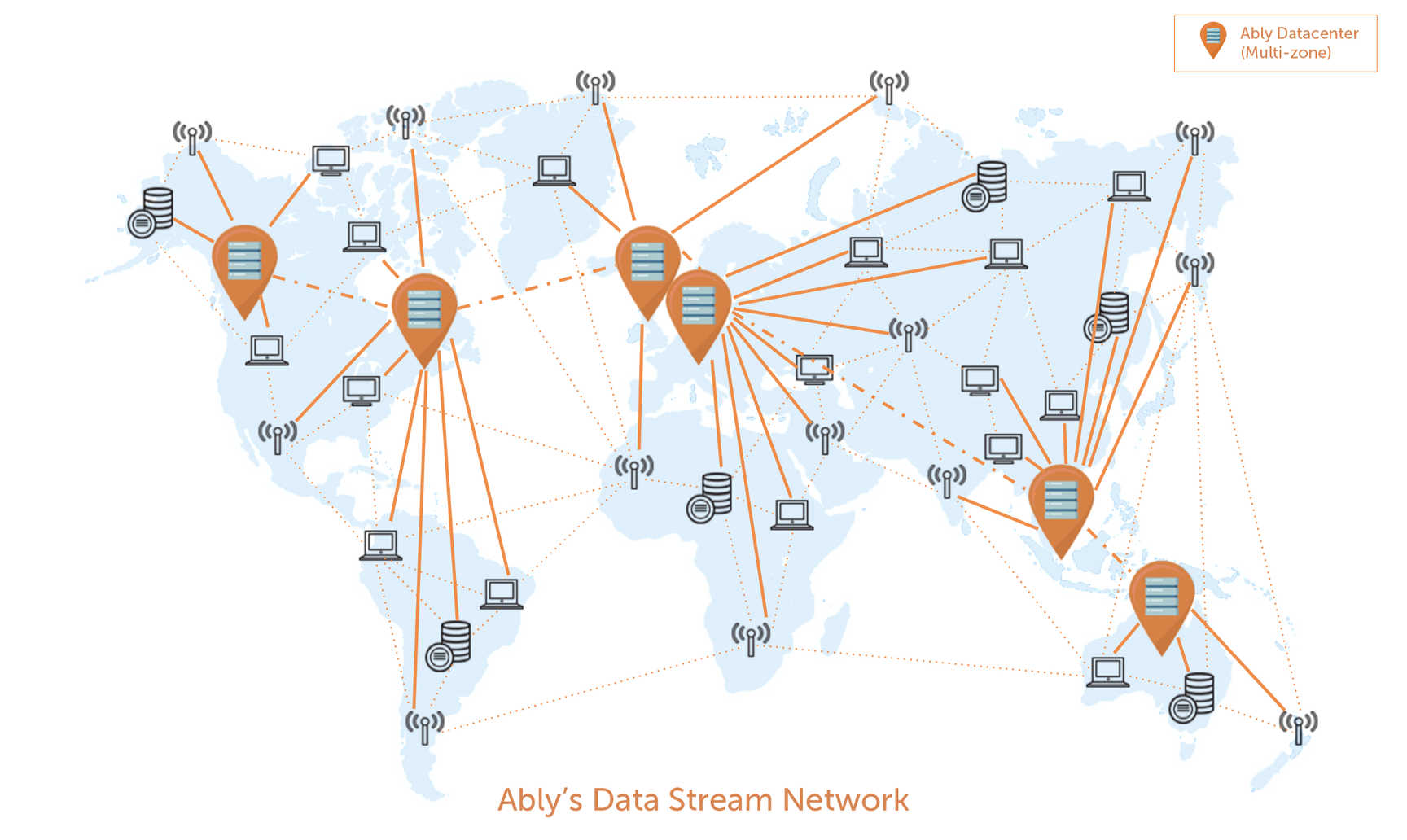

We’ve solved this at Ably by storing all connection states in more than one location across at least two data centres.

Connection state migration

If a client connects to one of your frontend servers, the connection state will persist on the server they connected to. However, following an abrupt disconnection, a subsequent established connection could attempt to resume connection state stored on another server. A simple way to solve this is to use shared key/value data store such as Redis. However, this is not an entirely robust solution. Redis may be recycled, overloaded, or unresponsive and service continuity should remain unaffected.

We’ve solved this at Ably in a number of ways:

- First, we have a routing layer that monitors the cluster state via gossip and routes connections being resumed or recovered to the frontend server that holds the state, if available.

- Second, if the frontend server or underlying cache for that connection has gone away in the intervening time, we're able to rebuild the state from the secondary fail-over cache on a new frontend server. When a new server rebuilds the state, it will also persist the state in a secondary location to ensure a subsequent fail-over will succeed.

- Finally, if the router is unable to determine which frontend holds the connection state, each frontend can effectively take over the connection state notifying the old frontend to discard its connection state.

Fallback transports

Some clients cannot use WebSockets and will need to rely on fallback transports such as Comet. Comet will quite likely result in frequent HTTP requests being made to different frontend servers. It's impractical to keep migrating the connection state for each request as Comet, in its very nature, will result in frequent new requests. It is imperative that a routing layer exists to route all Comet requests to the frontend that is retaining an active connection state so that it can wrap all Comet requests and treat them as a single connection.

We’ve solved this at Ably by building our own routing layer that is aware of the cluster, can determine where a connection currently resides, and can route all Comet requests to the server that is currently maintaining the connection state for that client.

Store cursors, not data

If the process that is spawned to retain connection state simply keeps a copy of all data that has been published whilst the client has been disconnected, it would very soon become impractical to do this. For example, assuming a single frontend has 10,000 clients connected, and each client is receiving 5 messages per second with 10Kib of data each. In just 2 minutes, the frontend would need to store 57Gib of data. Added to this, if a client reconnects and has no concept of a serial number or cursor position, the frontend cannot ever be sure which messages the client did or did not receive so could inadvertently send duplicate messages.

We’ve solved this at Ably by providing deterministic message ordering, which in turn allows us to assign a serially-numbered id for each message. As such, instead of keeping a copy of all messages published for every subscriber, we simply keep track of a serial number for each channel for each disconnected client. When the client then reconnects, it announces what the last serial was that it received before it was disconnected, and the frontends can then use that serial to determine exactly which messages need to be replayed without having to store them locally.

In summary

After three and half years in development, we learnt the hard way that the devil is in the detail when it comes to depenadable, reliable realtime messaging at scale.

If you're considering building your own realtime messaging service or another realtime platform, a simple reason to choose Ably instead is because we invested three years and over 50,000 hours solving these problems before even launching to the public. All so developers don't need to waste time finding and solving the same problems we've already overcome, and that spend all day thinking about. In the years since, we've developed our Realtime Messaging fabric and global cloud network so much so that Ably is now considered the serious provider for companies with demanding realtime needs.

If you have any questions, feedback or corrections for this article, please do get in touch.