Start a conversation on your laptop, finish it on your phone. The context just follows you. That’s what cross-device AI sync delivers. No reloading history, no reintroducing yourself, just one continuous thread across every screen. It builds trust, reduces friction, and makes the assistant feel like a single, persistent presence. This post unpacks why users expect it, what makes it technically tricky, and what your system needs to get it right.

AI conversations must survive the device switch

Modern users have grown to expect realtime, seamless experiences from their apps and AI tools. They want instantaneous responses, continuous interactions, and no interruptions as they move between devices. This expectation extends to AI-powered experiences: if you start a conversation with an AI assistant on your laptop, you should be able to pick it up on your phone or another tab without missing a beat. Equally, if you have initiated a long-running asynchronous task you want to be notified once it’s completed, no matter which device you’re using at the time.

What users want, and why this enhances the experience

Users want continuous, identity-aware AI conversations that follow them across devices. In practice, this means an AI chat session linked to their identity rather than a single tab or device. The conversation should feel like a single thread they can return to at any time.

That continuity builds trust. The AI isn’t “forgetting” just because you switched devices. A remembered history signals reliability and intent, helping users feel the assistant is genuinely useful. Multi-turn conversations flow naturally, and users avoid repeating themselves or reconstructing context.

This matters even more once AI systems move beyond simple chat. When an LLM is running long-lived, asynchronous work such as multi-step research, tool calls, or background analysis, users expect to see progress and results wherever they happen to be at the time. You might start a task on your desktop, step away while the model works, and then pick up your tablet to see the output appear as soon as it’s ready.

Real-world usage makes multi-device continuity unavoidable. These moments must be frictionless and reinforce the sense that the AI is persistent, reliable, and working on your behalf rather than being tied to a fragile client session.

Why this is proving challenging

HTTP is fundamentally stateless. Each request stands alone, meaning conversation context has to be manually preserved and restored for both the client and the server. This makes cross-device AI session continuity complex.

Having clients poll for updates is inefficient and adds latency. Long-polling or server-sent events help, but only partially. They don't enable simultaneous bi-directional, low latency messaging, which is what smooth AI conversations require.

Handling reconnections, preserving message order, and managing updates across multiple active clients requires considerable infrastructure. Doing this reliably, at scale, across networks and devices, is beyond what the typical product team can or should build from scratch.

Why you need a drop-in AI transport

Given the challenges described, building a robust system for realtime synchronisation from scratch can significantly drain engineering resources and slow product velocity. This is where a drop-in AI transport layer becomes essential.

Persistent, bi-directional messaging

To support conversations that stay in sync across devices, such a layer must offer persistent, bi-directional messaging using protocols like WebSockets for AI streaming. This allows for continuous, low-latency communication in both directions, enabling the AI to push updates and the client to send input without waiting for discrete request/response cycles.

Identity aware fan-out

Equally important is identity-aware fan-out. The transport system needs to recognize all active sessions associated with a single user and ensure that every message or state update is sent to all of those endpoints. That means when a user sends a message on one device, every other device they’re signed in on should immediately reflect the change.

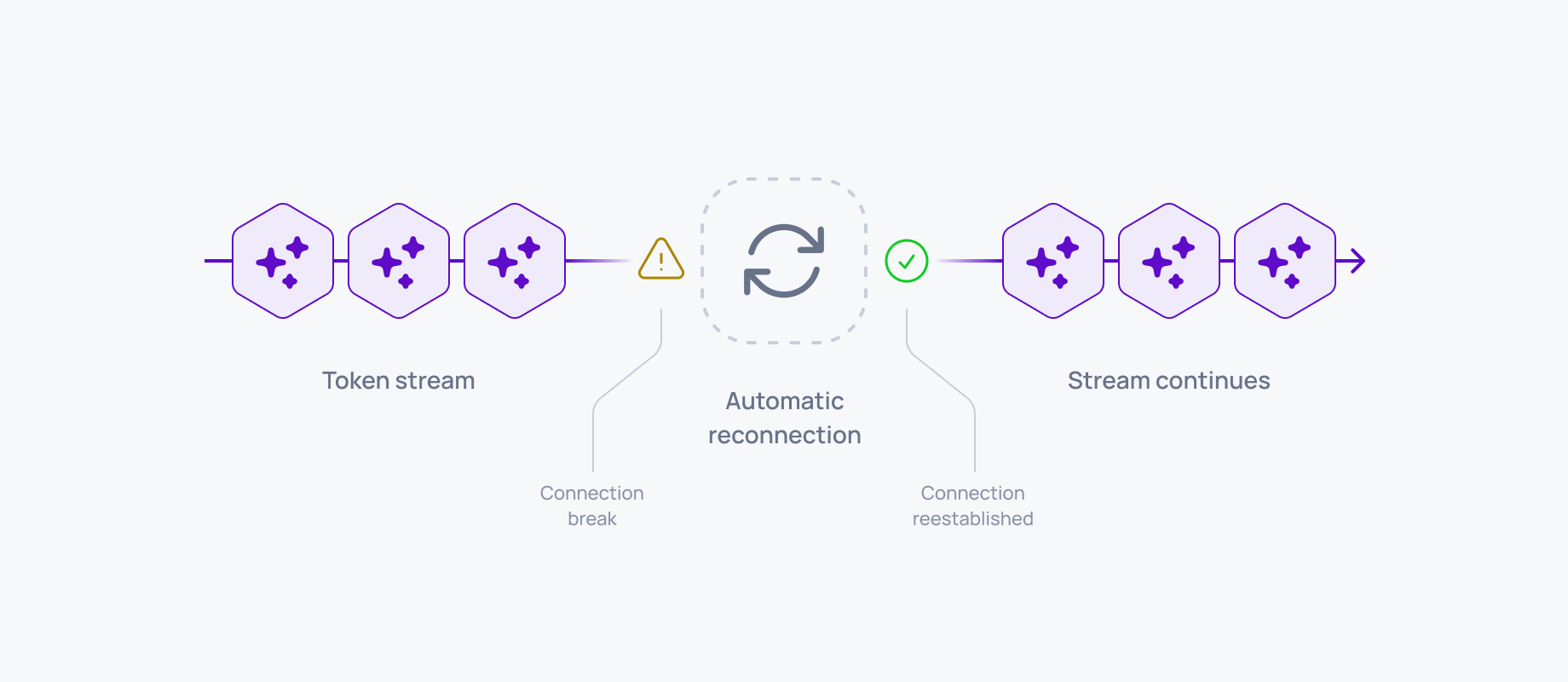

Ordering and session recovery

The system also needs to preserve message ordering and support reliable session recovery. If the connection drops momentarily, say from a device switch or network disruption, the user shouldn’t lose messages or see them out of order. A well-designed transport layer offers mechanisms to replay missed events and keep message sequences intact, ensuring consistency in the conversation.

Presence tracking

This enables the backend to know which devices are currently online and active. It helps coordinate updates, prevents redundant notifications, and can be used to power features like realtime indicators for typing or collaborative editing across devices.

Streaming support

To maintain a high-quality conversational UX, the transport layer must support LLM token streaming. This includes delivering partial, realtime updates from the AI model as it generates responses. That stream must arrive quickly, in order, and appear simultaneously on any active device.

Ably’s infrastructure supports all of these capabilities as part of its realtime platform. It eliminates the need to custom-build low-level transport solutions, allowing engineering teams to focus on building intelligent, agentic features instead of protocol logic.

How the desired experience maps to the transport layer

The table below breaks down what users expect from cross-device AI conversations, what your transport layer must support to deliver those experiences, and the technical mechanics that make it all work.

Cross device AI? You can ship it now.

Seamless cross-device conversations aren’t futuristic - they’re achievable today. You don’t need to rebuild your stack to make it happen.

Ably AI Transport provides all you need to support persistent, identity-aware, streaming AI experiences across multiple clients. If you’re working on Gen‑2 AI products and want to get this right, we’d love to talk.