When was the last time you used a chat service and noticed messages arriving out of order? Probably never, because consistent message ordering is one of the core expectations users have when chatting in real time.

Sounds simple. But scale this to millions of users across a global network, and message ordering quickly turns into a complex distributed computing problem. Even at smaller scales, ordering isn’t guaranteed once you move beyond a direct connection between two users. As soon as you have more than a handful of clients and servers, preserving order becomes a serious engineering challenge.

That’s why we built Ably Chat - our dedicated chat SDK designed to handle challenges like message ordering, delivery guarantees, and scale, so you can focus on building the chat experience, not the infrastructure.

In this article, we’ll explore why message ordering is harder than it seems, how scale complicates the problem, and practical ways to solve it, whether you’re building your own platform or want to rely on a proven solution like Ably Chat.

Why message ordering is a challenge

Why is it so hard to keep chat messages in order? There are two main issues:

- Coordinating multiple chat users: Direct chats between two people are relatively easy because there’s not much to go wrong. But if you’re connecting multiple people through a backend then it becomes a lot more challenging to keep messages in the right order.

- Scale and complexity: And, similarly, the more users, traffic, and backend servers you have, the more opportunities there are for messages to arrive out of sync.

So, let’s start by looking at three basic chat architectures and then what happens when your chat application scales.

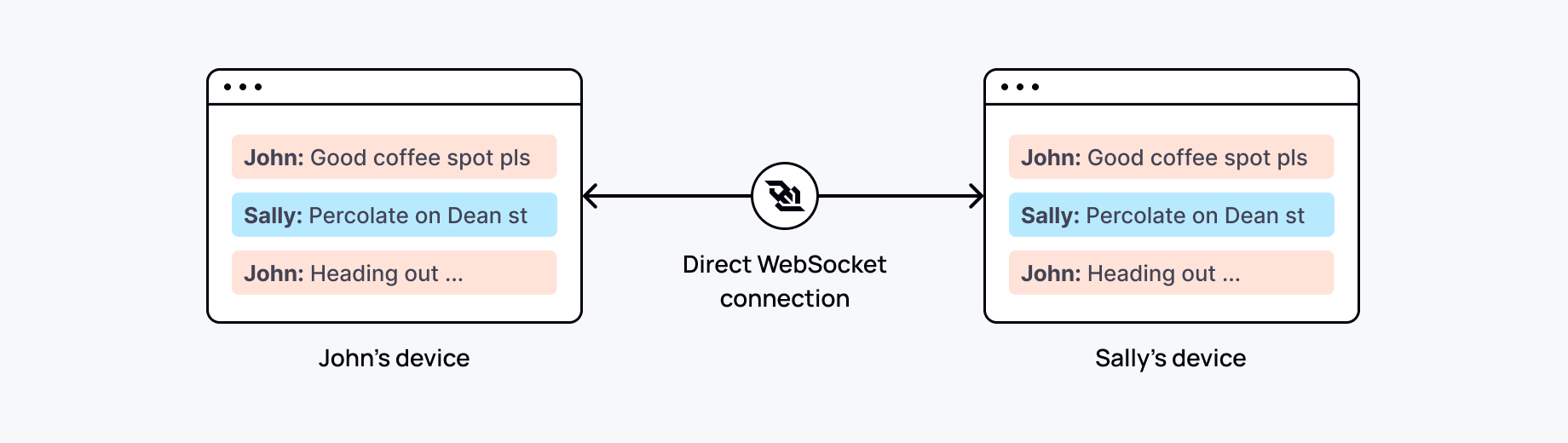

Direct chat between two people

Think of a simple chat box on a website. Both sides of the conversation connect directly to one another, perhaps using a WebSocket connection.

What could prevent the chat messages arriving in the right order?

- Simultaneous messages: If both users send messages at the same time, network delays may cause one message to arrive before the other, even if it was sent slightly later.

- Network interruptions: A temporary connection drop can lead to one user’s messages being resent, causing them to arrive out of sequence.

- Retries and timeouts: If a message doesn’t go through on the first try, it may be retried and delivered out of order or multiple times.

In each of these scenarios, the solution is relatively simple. By adding timestamps or sequence numbers to each message—and assuming that two people sending a message at the exact same microsecond is rare—the chat windows can establish a consistent order without needing a backend.

Realistically, though, how many chat applications work this way? Connecting two people directly means you miss out on richer functionality such as message persistence, centralized auth, and moderation.

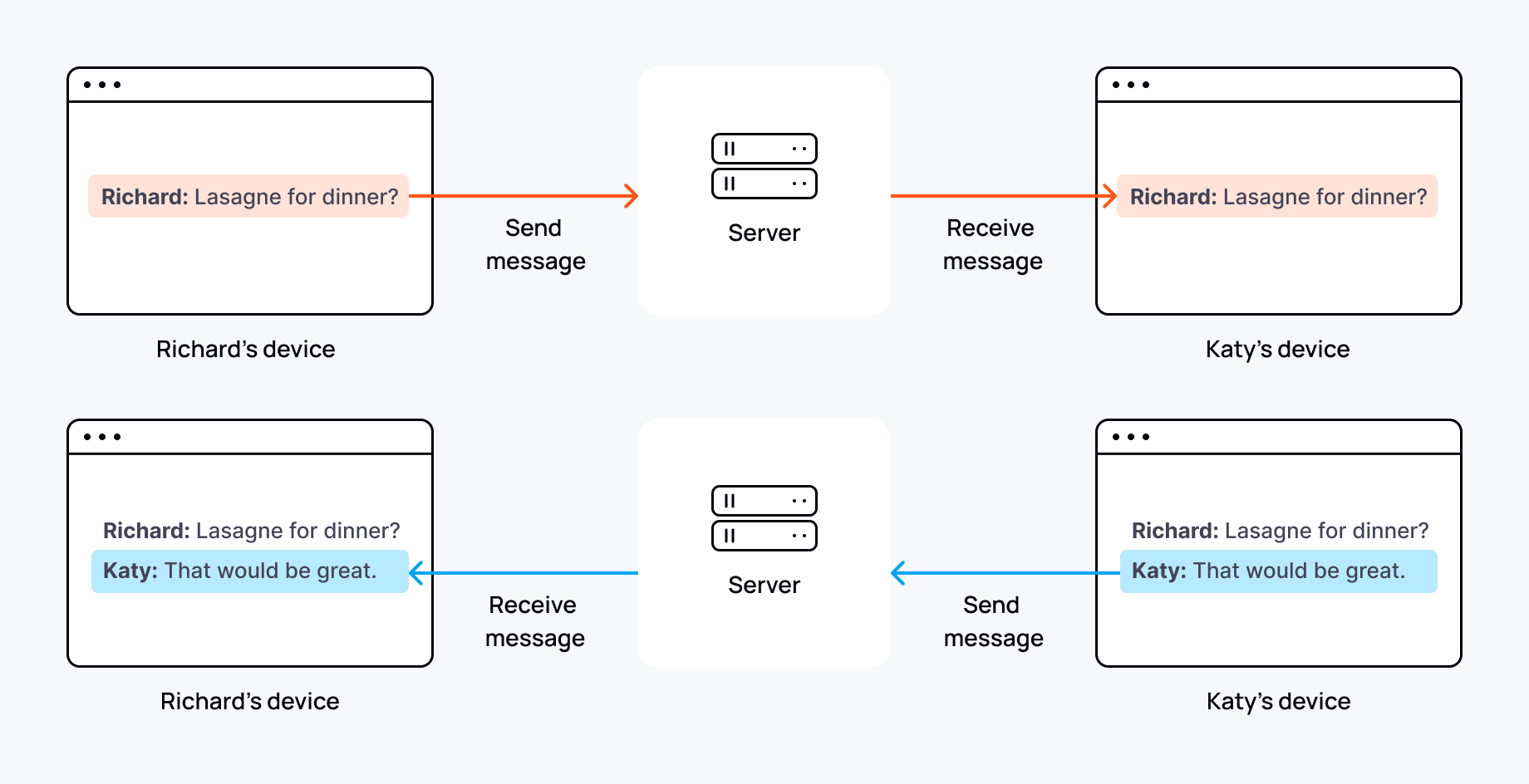

Two people chatting via a backend server

So, if you do want to log conversations, enforce language filters, or integrate with other systems, then you need an intermediary backend server. This provides more flexibility, but adding even one extra system between chat participants increases the chances of messages arriving out of order.

So, what issues might arise?

- Multi-hop network delays: Messages now travel two hops—from the sender to the server, and then from the server to the recipient. This additional step introduces latency on its own, while also increasing the chance of network interruptions, such as temporary congestion on the open internet.

- Processing lag on the server: At times of high load, the server might receive messages faster than it can process them. This can happen in bursty traffic scenarios or when other services are also competing for resources. As a result, a message sent later might be processed and sent out before an earlier one, purely because it reaches an idle processor first.

- Asynchronous handling: One way to handle load more efficiently is to introduce multiple parallel systems and have them handle messages asynchronously. In this model, messages are queued and handled by multiple parallel processes, which can lead to out-of-order delivery if processes complete at different speeds.

Even with just two people chatting, introducing an intermediary backend increases the likelihood of messages arriving out of order. However, the flexibility and control it provides often outweigh the added complexity. For multi-user chats, though, there’s really no practical alternative to a backend; a backend is essential to manage and coordinate messages reliably across participants.

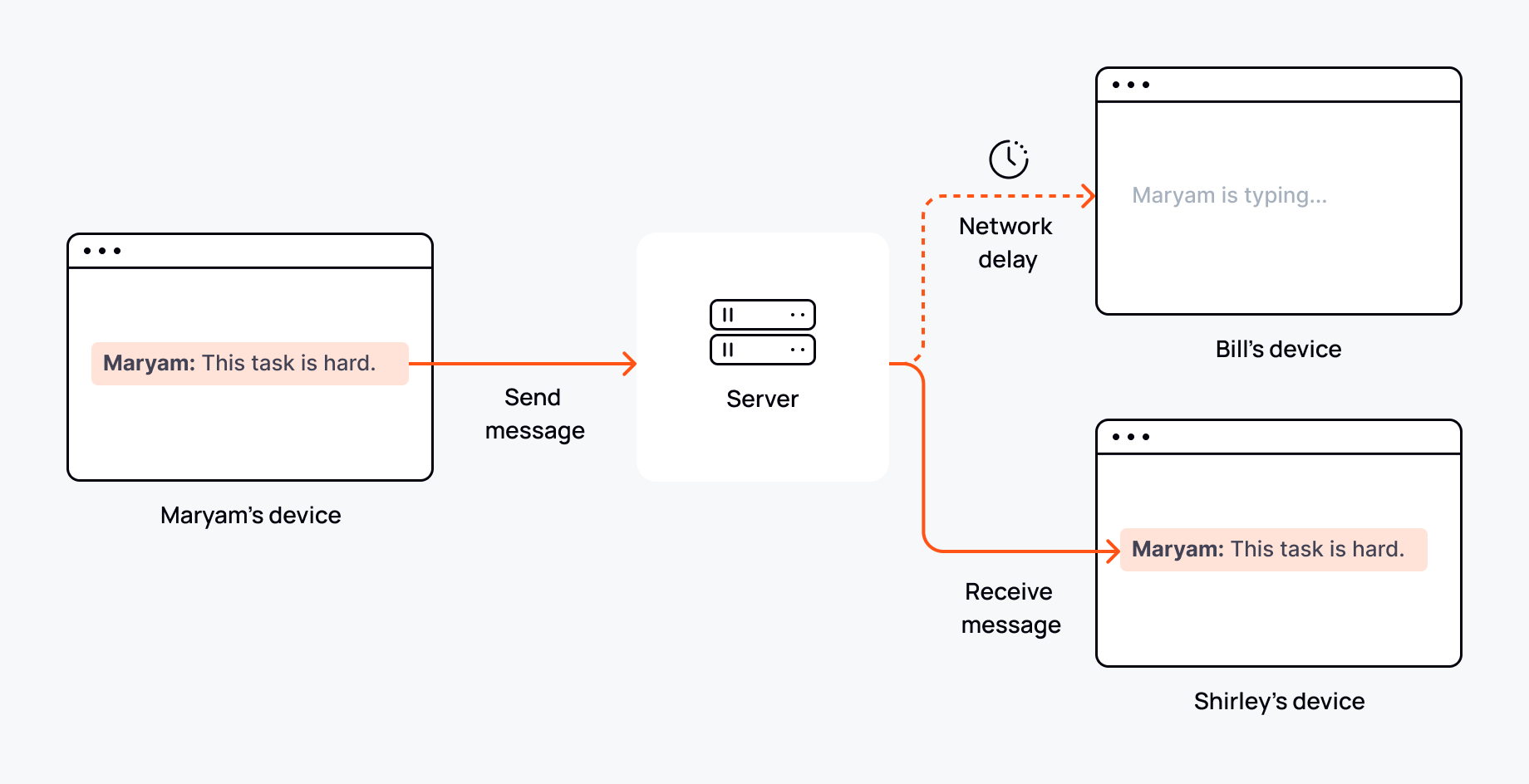

Multi-user conversations

Most chat applications enable multiple people to converse either in one-on-one chats or larger group (multi-user) conversations. In these setups, ensuring that messages arrive in the right order becomes a major challenge. The system isn’t just managing the order between two people; it now has to coordinate messages across multiple participants, each with unique network conditions and potential delays.

Managing ordering across multiple participants adds significant complexity and requires careful engineering to ensure consistency. In particular, you’re likely to come across challenges such as:

- Different network latencies: Each participant’s connection to the backend varies. For example, one person might be located near the cloud data center, while another is on the other side of the world. This difference can mean that a nearby user’s message is received, processed, and delivered in the time it takes a more distant user’s message even to reach the backend.

- Backend processing and load balancing: As with a two-person chat, message ordering depends on the backend’s ability to process messages efficiently. Scaling out to multiple servers adds capacity but also increases complexity. During peak load times, different nodes may process and forward messages at varying speeds, making delivery order inconsistent without additional measures.

- Concurrent messaging and timestamp conflicts: When several users send messages simultaneously, determining the exact order of those messages becomes difficult. Timestamping might seem like an obvious solution, but slight clock differences between devices can lead to noticeable inconsistencies. If two messages arrive at almost the same time, the system must also prioritize one, which requires a clear rule or ordering mechanism to maintain a coherent sequence.

Each time your chat application becomes more complex, you introduce opportunities for messages to arrive out of order.

Realistically, though, just about any chat functionality you build will need to support multi-user conversations.

Now, let’s scale multi-user chat to add more complexity

Handling the ingestion, processing, routing, and delivery of messages for a few hundred users on a chat platform is already a complex task. But as the user base expands to thousands or even millions of concurrent users, the challenges don’t just grow larger—they multiply exponentially.

This is particularly relevant to preserving message ordering. Let’s look at why.

In a small chat setup with a few hundred users, a single server can typically handle message ingestion, processing, routing, and delivery. That makes it much easier to process messages sequentially because all messages pass through a single point, where they can be ordered and routed without the complexity of cross-server communication or synchronization.

There are limits to how “big” an individual server or virtual machine can become. Once you reach thousands or millions of users, you might find that you get diminishing returns by adding more resources to a single server instance. At that point, you need to scale-out rather than scale-up.

In essence, scaling-up is a continuation of what you’ve almost certainly already been doing. That is, adding more chat processes on a single server to create multiple, parallel message queues. That in itself opens the chance of messages arriving in the wrong order because one queue might process faster than the other. But the real complexity starts when you message queues running across multiple backend servers.

There are a couple of ways to do this. One is to have dedicated servers for ingestion, processing, and delivery. However, a more common horizontal scaling model would be to have multiple servers with identical capabilities. This way, you can spin up new instances of the chat backend when demand increases. And when traffic slows, removing instances will save you from paying for capacity you don’t need at that moment.

The trade-off is that this greatly increases the chance of messages arriving out of order.

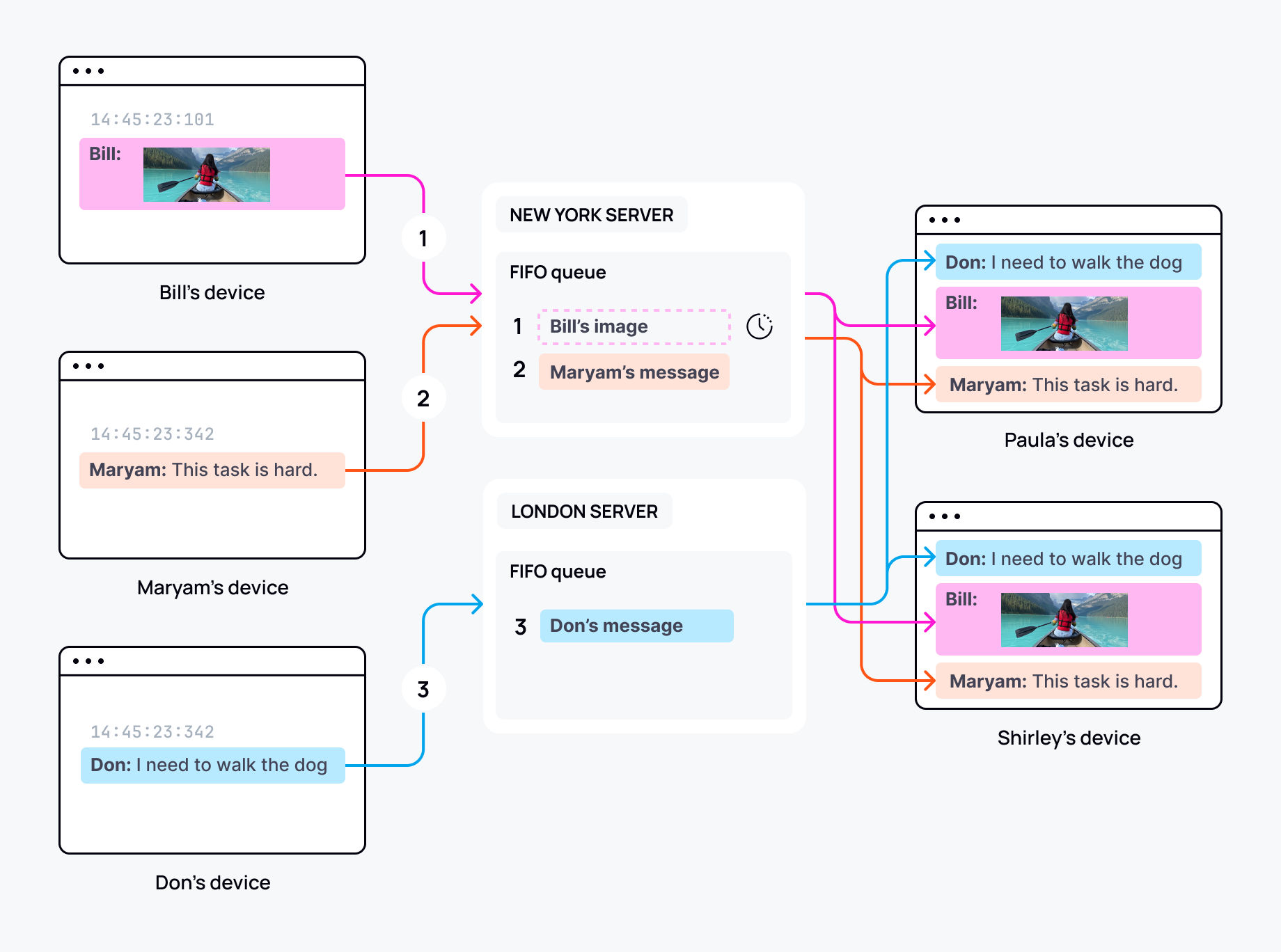

Imagine you have five people in a group chat for work. Bill, Maryam, and Paula are working in an office on the US east coast. Their chat clients connect to a backend server in New York. Don and Shirley are working from home in Europe and their chat clients connect to a server in London.

Coordinating messages across those servers might look something like this:

- Bill’s chat client is connected to the New York server. He sends a message with a large image attached. The New York server processes the message.

- Maryam’s chat client is also connected to the New York server. She sends a message to the group chat and the New York server adds it to the queue behind Bill’s large message.

- Don is connected to the London server. He sends a message at the same time as Maryam. But because Maryam’s message is stuck behind Bill’s on the New York server, and Bill’s message is still processing, Don’s message arrives with the other chat participants before everyone else’s.

Summarizing the architectural challenge of scaling and maintaining message order

When multiple servers process messages simultaneously, it’s easy to see how messages could arrive out of order. As we saw earlier, if one server is under heavier load, its messages may be delayed, causing an earlier message to arrive after others that were processed more quickly on less busy servers.

But that’s just telling the surface-level story. Let’s look in some more depth at the architectural challenges, how they evolve as you scale, and what the impact is on your chat service’s users.

Maintaining order despite network conditions

Perhaps the most obvious challenge is that chat messages travel across the open internet, where message speed is limited by the distance between users and servers.

While the internet is generally reliable, fluctuations in traffic, varying routes, and occasional network issues mean that latencies and reliability can still vary widely.

Let’s look at what some of the challenges are, how they change with scale, and what impact they might have on your chat users.

- Challenges: Network delays, dropped connections, and retries can disrupt the flow of messages, causing them to arrive out of order.

- Scaling impact: As the number of concurrent users and messages increases, network congestion and packet loss become more frequent, increasing the chance that messages will need to be re-sent or will arrive at varying speeds.

- User impact: Without an effective reordering mechanism, users could see messages arrive out of sequence, breaking the flow of conversation. For example, messages may appear to “jump” backward or forward, especially in poor network conditions, leading to a confusing and fragmented experience.

Synchronizing across devices and users

In group chats, keeping messages synchronized across devices and users is inherently complex. Messages often arrive simultaneously from different sources, requiring careful handling to preserve order.

Here’s how this synchronization challenge scales and affects the user experience:

- Challenge: In group chats, messages from multiple users and devices arrive simultaneously from different sources, creating concurrency challenges that require careful synchronization.

- Scaling impact: As more participants join, messages sent at similar times are often handled by different servers, each with its own queue. This can break the natural first-in, first-out (FIFO) sequence, leading to messages arriving out of order.

- User impact: Without synchronization across devices, users may see messages appear in different orders on different devices or across group members. This inconsistency can make group discussions feel disjointed, as participants may miss crucial context or respond to messages in an unintended sequence.

Managing retries, failures, and duplicates

This is where things become especially tricky. When a message times out or seems to fail, most chat systems will automatically retry. However, with multiple servers and clients involved, these retries can lead to duplicate messages and out of order delivery.

- Challenge: Failures, retries, and redundant processing can disrupt message order. When a message fails and is retried, duplicates can occur if the original message eventually succeeds. At scale, fallback nodes and parallel processing add further complexity, with some messages arriving faster or being duplicated.

- Scaling impact: As more nodes handle messages, error management becomes complex. For example, if a message fails to send, another server might retry it, but if the original server recovers and sends it as well, the same message could be delivered twice. With more nodes, the chance of unintentional duplication increases.

- User impact: Users may see duplicate messages or out-of-order deliveries, especially during network interruptions or server failovers. For instance, they might receive the same message multiple times or see a previous message pop up unexpectedly, disrupting the natural conversation flow.

Balancing speed and ordering

As with everything in engineering, there’s a trade-off here. Here’s how the balance between speed of delivery and strict message ordering changes as your systems scales and what is means for the user experience:

- Challenge: Fast message delivery is critical for chat systems, but enforcing strict message order across distributed servers can introduce latency, especially in high-traffic environments.

- Scaling impact: At scale, achieving strict order requires coordination across multiple servers, which can be particularly challenging when servers are distributed across regions. Synchronizing message order across regions increases the need for network hops and consensus, which may slow delivery times. Techniques like sequence numbers or logical clocks can enforce ordering but require careful orchestration to minimize latency impacts.

- User impact: In applications where realtime delivery is crucial, users may notice slight reordering as a trade-off for speed, particularly in high-traffic situations. This balance may be necessary in scenarios like live events or fast-paced group chats, where strict ordering could introduce delays and impact responsiveness.

Building a chat architecture that preserves message order as you scale

Maintaining message order at scale is a game of cat and mouse: as user numbers grow and demands on resources increase, new challenges keep emerging. Each time you solve one issue, rising traffic and added complexity push new problems to the surface, requiring you to adapt your approach. From the protocol you choose to connect clients to the way you handle retries, every decision shapes your system’s ability to preserve message order reliably.

Let’s look at some of the decisions you can make and the impact they have on your ability to preserve message order.

Choosing the right protocol

The protocol you choose plays a fundamental role in maintaining message order at scale. Some protocols provide in-order delivery at the protocol level itself, while others push ordering to the application layer. For chat, there are only two realistic options: MQTT and WebSocket. While there is a limited JavaScript implementation of gRPC, the protocol is effectively unavailable in web browsers for most practical applications. And WebRTC’s focus is on streamed data, where the velocity and continuity of data flow is more important than the integrity of individual messages.

Choosing between MQTT and WebSocket comes down to the type of devices your chat participants are likely to be using. MQTT is a publish/subscribe-based protocol whose origins in IoT mean it is very power efficient but it doesn’t offer two-way communication at the protocol level. And, although publish/subscribe is useful at the application level, it lacks the simplicity and directness of WebSocket's true bidirectional communication. With the advent of low cost yet powerful Android devices, there’s less need for MQTT’s power saving in chat.

So, WebSocket is most likely the better choice of protocol for chat. Its persistent, low-latency, bidirectional connections suit chat well and it is available in all browsers and major development languages. However, it doesn’t do the full job of guaranteeing message ordering.

What does that mean?

WebSocket guarantees ordered delivery only for messages sent and received over the same, uninterrupted connection. If you have multiple connections (due to scale, reconnections, or multi-device usage), you need to ensure global message order at the application level. The alternative is to choose a realtime or chat platform that handles message ordering on your behalf.

Adding unique identifiers and sequencing

Unique identifiers, like sequence numbers or timestamps, are critical for keeping messages in order, but they’re tricky to manage across distributed servers at scale. A centralized sequence generator can become a bottleneck, adding latency as nodes wait for the latest sequence number.

In a fully distributed setup, logical clocks or decentralized sequencing bring their own risks—like clock drift and inconsistency—especially during network partitions. To maintain order, you’ll often need consensus mechanisms, such as Raft or Paxos, to keep all nodes aligned, but this adds complexity and coordination overhead.

To address these challenges, there are a few practical approaches for managing unique identifiers at scale. Each has its trade-offs in terms of latency, complexity, and consistency, but they can help ensure ordered message delivery across distributed systems:

- System-wide sequence numbers: Using a global sequence number enforces order but can introduce latency, as servers must sync. Logical clocks or a centralized sequence generator (e.g., with Raft for leader election) can mitigate risks but add complexity.

- Timestamps with client-side IDs: Timestamps, combined with a unique sender ID, provide order but need buffer adjustments to account for clock drift. Synchronizing clocks using NTP or setting buffers can help avoid minor inconsistencies.

- Combined sequencing: Using both sequence numbers and timestamps provides a more precise order, though it requires complex coordination, especially under high traffic.

Buffering, windowing, and watermarking

To keep messages ordered without adding lag, large-scale chat systems often borrow techniques from streaming data: buffering, windowing, and watermarking. Here’s how each approach helps manage the challenges of maintaining order as the system scales:

- Buffering and reordering: Incoming messages are temporarily stored on the client or server until they can be shown in sequence. Sequence numbers help detect gaps. If a message arrives out of order, later messages are buffered until the gap is filled. This preserves order but can cause delays if not tuned carefully for high traffic, where buffering needs to scale dynamically with load.

- Windowing: Windowing holds messages within defined time intervals, reordering them before display. Once the window closes, messages are released together, which reduces the risk of out-of-order display. At scale, adjusting window size based on traffic conditions is essential to keep the right balance between responsiveness and message order.

- Watermarking: Watermarking sets a time limit for holding messages in the buffer. Once that limit is reached, all buffered messages are displayed—even if slightly out of order—keeping chat responsive and preventing long delays that result from waiting on older messages.

Together, these techniques help manage reordering and timing constraints, balancing speed and accuracy in message delivery as the system scales. By fine-tuning these approaches, you can maintain a responsive, realtime experience for users, even under high traffic and fluctuating loads.

Concurrency and load distribution

Concurrency is both an asset and a challenge in a large-scale chat system. While it boosts capacity and resilience, it also increases the risk of messages arriving out of order. To tackle this, there are a couple of core techniques to consider:

- Consistent routing: Direct all messages from a user or group to a specific processing node or queue, minimizing the chance of out-of-sequence processing even during peak loads. As the system scales, consistent routing keeps ordering predictable, though it requires careful management to prevent bottlenecks at individual nodes.

- Partitioning strategies: Techniques like consistent hashing distribute load across nodes while keeping message order intact. By routing messages from the same user or group to the same partition, consistent hashing helps prevent overloading any one node, maintaining order across distributed systems. At scale, though, partitions need tuning to adapt to shifting loads without sacrificing order.

These methods draw on advanced distributed computing techniques. As concurrency grows, maintaining message order requires effective load distribution, consistent state management, and fault tolerance across nodes. While consistent routing and partitioning help streamline ordering, scaling up demands careful coordination to avoid reordering issues.

Handling retries and deduplication

While ordering is crucial, handling duplicate messages is equally important especially where you have multiple concurrent processes. Deduplication logic ensures that each message is processed only once, even if it’s received multiple times due to retries.

- Deduplication logic: Using unique identifiers for each message helps prevent duplicates from being processed out of sequence. By tracking these IDs across distributed nodes, the system can detect and ignore duplicates, ensuring retried messages don’t break the intended order or result in multiple displays of the same message.

- Idempotent message processing: Idempotency ensures that processing the same message multiple times has the same effect as processing it once. For example, if a message updates a chat or changes a user’s status, the logic checks whether the update has already been applied before proceeding. This approach prevents duplicate messages from causing unintended changes or misordering.

Together, deduplication and idempotent processing ensure that messages are handled reliably and in order, even under high traffic and frequent retries. These techniques help maintain consistency across the system, keeping the chat experience smooth and predictable as demand scales.

Other architectural considerations

Beyond ordering and concurrency, your chat system’s architecture will also need to handle:

- Delivery semantics at scale: Deciding between at-most-once, at-least-once, and exactly-once delivery becomes more complex as you handle more users and messages. Exactly-once delivery ensures each message arrives only once and in the correct order, even during retries. Achieving this at scale requires idempotent processing and stateful components, adding complexity and resource overhead. As your system grows, you need to balance avoiding duplicates against increased latency and processing costs.

- Message durability under heavy load: Durability in case of failures is straightforward with low traffic. Scale-up and you could find persistent storage becomes a bottleneck. Your system must efficiently write to durable storage and recover messages after failures, all while maintaining realtime performance for millions of users.

Creating a resilient, responsive chat architecture that performs well at scale involves significant architectural and engineering effort. The challenges evolve and multiply as your traffic and user base grow, raising the question: Is building and maintaining such a complex system in-house the best use of your resources?

Should you build or buy to guarantee message ordering?

Building a chat system that reliably maintains message order at scale is no small feat. And, like other parts of your application infrastructure, it doesn’t have to be built from scratch.

Instead, you essentially have three main options. Each comes with different characteristics in terms of time, resources, and control. Let’s explore them so you can evaluate which approach aligns best with your goals and capabilities.

- Build in-house: Building a chat system in-house requires a significant, ongoing commitment. It’s resource-intensive and demands specialized expertise for design, implementation, and maintenance. While building in-house offers maximum control and customization, it often diverts resources from your core application needs. Even if chat is central to your application, consider whether you can deliver features beyond what existing platforms offer. This option can make sense if you need highly specialized features or tight control, but be prepared for the dedicated team and budget required to support it long-term.

- Use a chat service: Using a dedicated chat service can save your team’s time and resources, letting you focus on core functionality rather than chat-specific challenges. But the flipside is that an out-of-the-box solution might require that you modify your requirements to meet the service’s approach. And you could find that customization or advanced features are harder to achieve. Crucially, though, the biggest downside of most chat services is that they don’t guarantee message ordering. As a result, you'll need a plan to handle out-of-order messages when they arrive on the client.

- Build with a realtime platform: Working with a realtime platform-as-a-service (PaaS), like Ably, lets you offload the complexities of scaling, message ordering, and maintaining low latency while retaining the flexibility to build exactly the solution you need. This reduces the long-term load both on your team and infrastructure, helping you get features to users faster. Beware that, unlike Ably, not all realtime PaaS providers guarantee message ordering. When selecting a provider, consider whether you'd need to take on the engineering and maintenance costs of handling message ordering yourself.

Ultimately, each option requires balancing control and customization against resource limitations. Choosing the best approach lies in weighing what you can manage for the long-term in-house against freeing-up your team’s time by using an external service. If you’re still not sure which is the right approach for you, take a look at our “Build vs Buy” guide for live chat.

How Ably can help with your chat architecture

For most teams, using existing platforms and libraries is the most efficient way to build reliable realtime features without starting from scratch. While chat may be your immediate priority, it’s part of a broader category of realtime functionality that depends on low-latency data transport, guaranteed delivery, and correct ordering.

Whether you’re connecting microservices, integrating external data sources, distributing realtime updates to end-users, or enabling realtime collaboration, they all rely on the same foundational infrastructure.

Ably is a realtime PaaS that thousands of companies trust to power realtime experiences for billions of users. With Ably, you can either build your own custom chat using Ably Pub/Sub or rapidly launch a fully-featured chat service with Ably Chat. Whichever you choose, you can serve massive numbers of users reliably and smoothly thanks to:

- Global edge infrastructure: Keeps realtime functionality close to users, ensuring low-latency delivery with a global median latency of 6.5ms.

- 99.999% uptime SLA: Gives confidence that your application will consistently serve users without interruption.

- Strong data integrity: Guarantees message ordering and exactly-once delivery, even in unreliable network conditions.

- Developer-friendly SDKs: Provides support for all major languages and frameworks, ensuring a seamless developer experience.

Whether you’re building a chat application or a suite of realtime features, Ably’s global infrastructure and realtime expertise give you the flexibility and power to create a seamless user experience. Try it free today.