Today we’re launching Ably AI Transport: a drop-in realtime delivery and session layer that sits between agents and devices, so AI experiences stay continuous across refreshes, reconnects, and device switches - without an architecture rewrite.

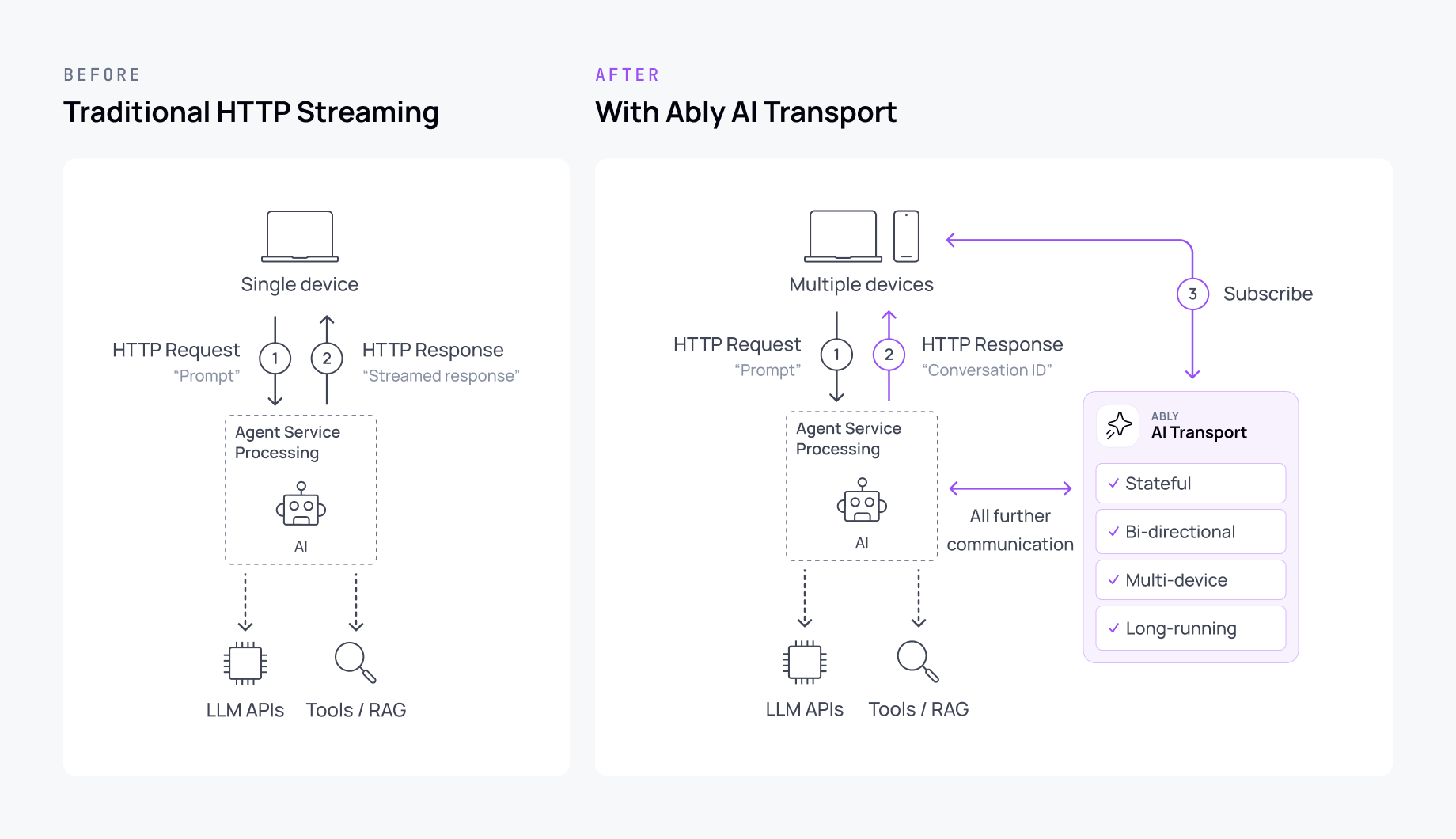

The gap: HTTP streaming breaks down for stateful AI UX

AI has moved from “type and wait” requests to experiences that are long-running and stateful: responses stream, users steer mid-flight, and work needs to carry across tabs and devices. That shift changes what “working” means in production. It’s not just whether the model can generate tokens, it’s whether the experience stays continuous when real users behave like real users do.

Most AI apps still start with a connection-oriented setup: the client opens a streaming connection (SSE, fetch streaming, sometimes WebSockets), the agent generates tokens, and the UI renders them as they arrive. It’s low friction and demos well.

But HTTP streaming really solves only the first part of the problem, and it’s not a good place to end.

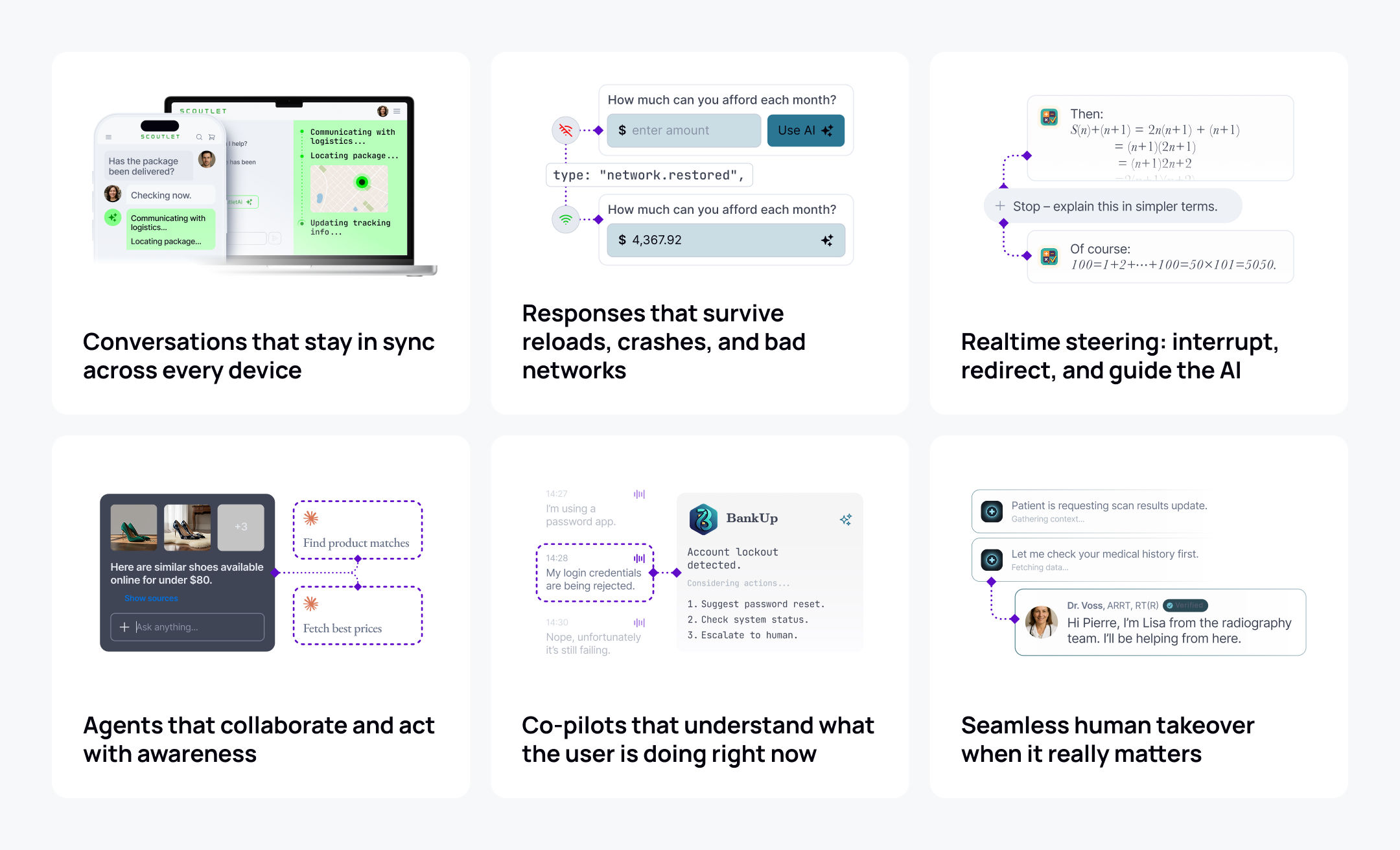

First: continuity. When output is tied to a specific connection, the experience becomes fragile by default. Refreshes, network changes, backgrounding, multiple tabs, device switches, agent handovers (even agent crashes) are normal behaviour. And they’re exactly where teams see partial output, missing tokens, duplicated messages, drifting state, and “start again” recovery paths. That’s where user trust gets lost.

Second: capability. A connection-first transport layer doesn’t just make UX fragile. It limits what you can build. Once you want true collaborative patterns like barge-in, live steering, copilot-style bidirectional exchange, multi-agent coordination, or a seamless human takeover with full context, you need more than “a stream.” You need a stateful conversation layer that can support multiple participants, resumable delivery, and shared session state.

So teams patch it: buffering, replay, offsets, reconnection logic, session IDs, routing rules for interrupts and tool results, multi-subscriber consistency, and observability once production incidents start. It’s critical work - but it’s not differentiation.

What Ably AI Transport does

AI Transport gives each AI conversation a durable bi-directional session that isn’t tied to one tab, connection or agent. Agents publish output into a session channel, clients subscribe from any device, and Ably handles the delivery guarantees you’d otherwise rebuild yourself: ordered delivery, recovery after reconnects, and fan-out to multiple subscribers.

It’s deliberately model and framework-agnostic. You keep your agent runtime and orchestration. AI Transport handles the delivery and session layer underneath.

The key shift: sessions become channels

In a connection-oriented setup, the “session” effectively lives inside the streaming pipe. When the pipe breaks, continuity becomes a headache.

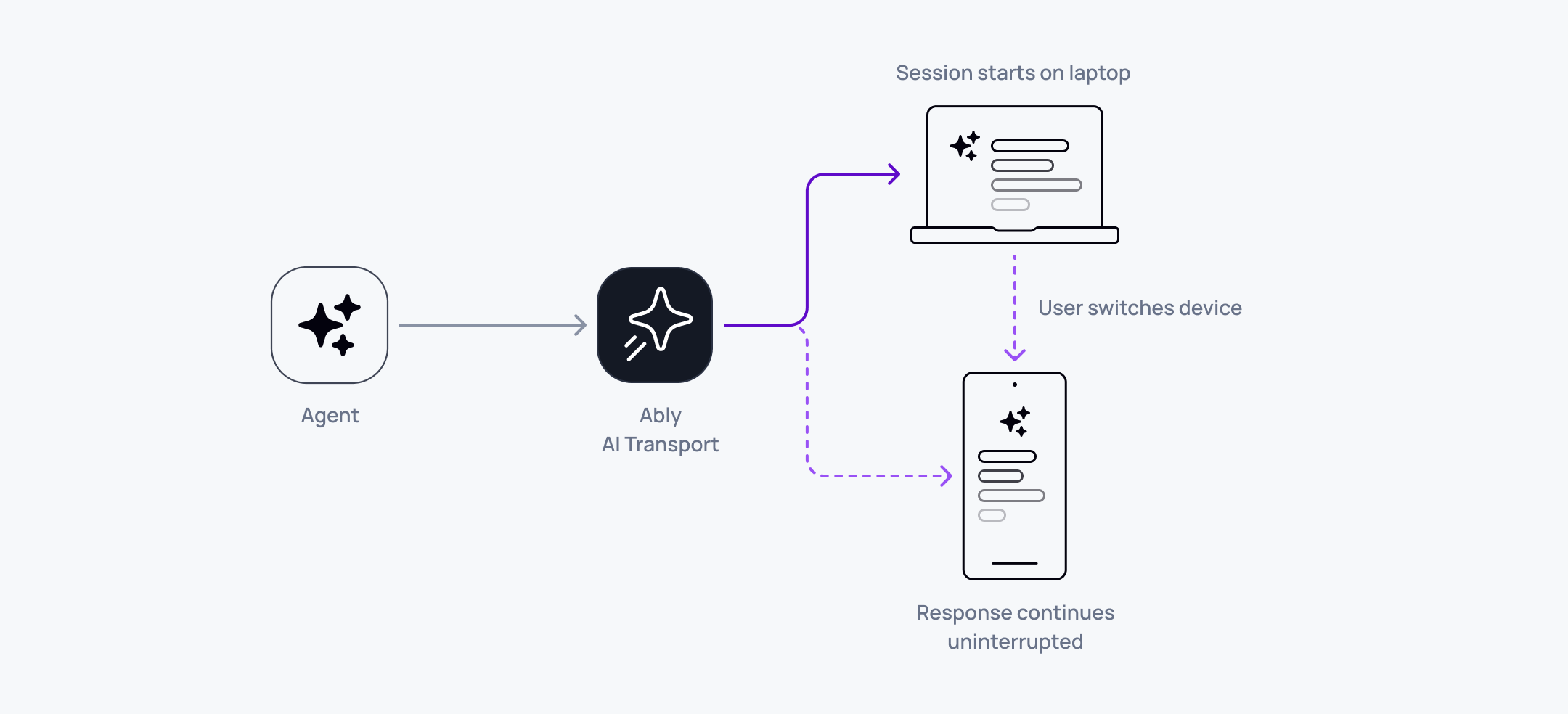

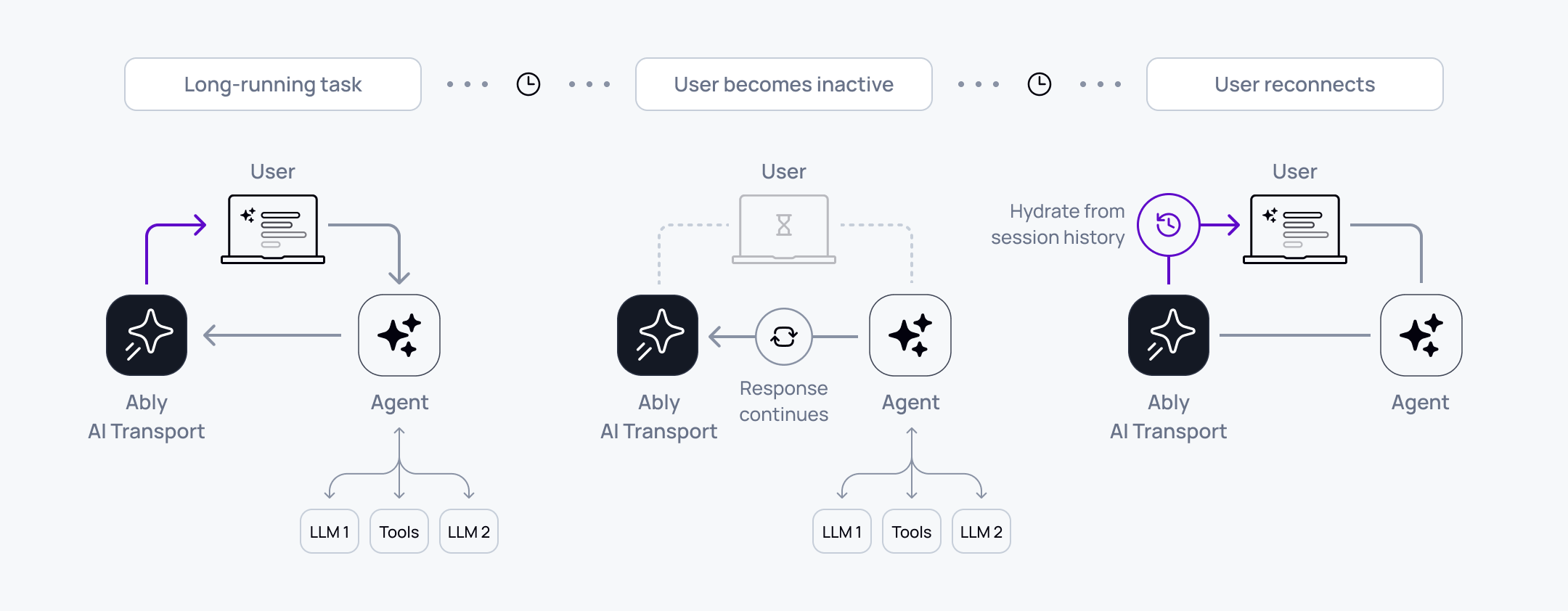

With AI Transport, the session is created once and represented as a durable channel. Agents and clients can join independently. Refresh becomes reattach and hydrate. Device switching becomes another subscriber joining the same session. Multi-device behaviour becomes fan-out rather than custom routing. Agents and humans become truly connected over a transport designed for AI bi-directional low latency conversations.

How Ably AI Transport ensures a resilient, stateful AI UX

Resumable, ordered token streaming: A great AI UX depends on durable streaming. Output is treated as session data, so clients can catch up cleanly after refreshes, brief dropouts, and network handoffs.

Multi-device continuity: Conversations are user-scoped, not tab-scoped. Multiple clients can join the same session without split threads, duplication, or drifting state.

Live steering and interruption: Modern AI UX needs control, not just output. Interrupts, redirects, and approvals route through the same bi-directional session fabric as the response stream, so steering works even across reconnects and devices.

Presence-aware sessions: Once agents do real work, wasted compute becomes a serious cost problem. Presence provides a reliable signal for whether the user is currently connected (or fully offline across devices), so you can throttle, defer, or resume work accordingly.

Agents that collaborate and act with awareness: As soon as you have more than one agent (or an agent plus tools/workers), coordination becomes the product. Shared session state and routing prevent clashing replies, duplicated context, and “two brains answering at once,” so multiple agents can communicate directly with users coherently.

Seamless human takeover when it really matters: When an agent hits a boundary (risk, uncertainty, or policy) a human should be able to step in with full context and continue the session immediately. The handoff keeps the same session history and controls, so there’s no repeated questions, no “start again,” and no losing track of what happened mid-flight.

Identity and access control: Beyond toy demos, you need to know who can read, write, steer, or approve actions. Verified identity plus fine-grained permissions let multi-party sessions stay secure without inventing a bespoke access model.

Observability and governance: When AI UX breaks in production, it’s rarely obvious where. Built-in visibility into session delivery and continuity makes failures diagnosable and auditable instead of “black box streaming incidents.”

Concrete examples

Multi-device copilots: A user starts a long-running answer on desktop, switches to mobile mid-response, and the session continues without restarting. Steering and approvals apply to the same session regardless of device.

Long-running agents: A research agent runs multi-step tool work for minutes. If the user disconnects, the work continues; when the user returns, the client hydrates from session history instead of resetting.

Getting started (low friction)

You can get a basic session running in minutes

import Ably from 'ably';

// Initialize Ably Realtime client

const realtime = new Ably.Realtime({ key: 'API_KEY' });

// Create a channel for publishing streamed AI responses

const channel = realtime.channels.get('my-channel');

// Publish initial message and capture the serial for appending tokens

const { serials: [msgSerial] } = await channel.publish('response', { data: '' });

// Example: stream returns events like { type: 'token', text: 'Hello' }

for await (const event of stream) {

// Append each token as it arrives

if (event.type === 'token') {

channel.appendMessage(msgSerial, event.text);

}

}

What to do next

Sign-up for a free developer account and follow our guides on setting up a publisher and subscriber locally to test refresh/reconnect/device-switch behaviour - that’s where you’ll feel the value immediately.